Introduction According to the latest 2022 cancer burden data, breast cancer ranks as the second most common cancer globally (after lung cancer), thereby ranking it as the leading cancer among women in 157 countries, including China1,2. In 2022, it was estimated that approximately 429,000 people were newly diagnosed with breast cancer, and 124,000 people lost

How Scientists Are Finally Revealing AI’s Hidden Thoughts – SciTechDaily

Scientists have developed a groundbreaking method to uncover how deep neural networks “think,” finally shedding light on their decision-making process.

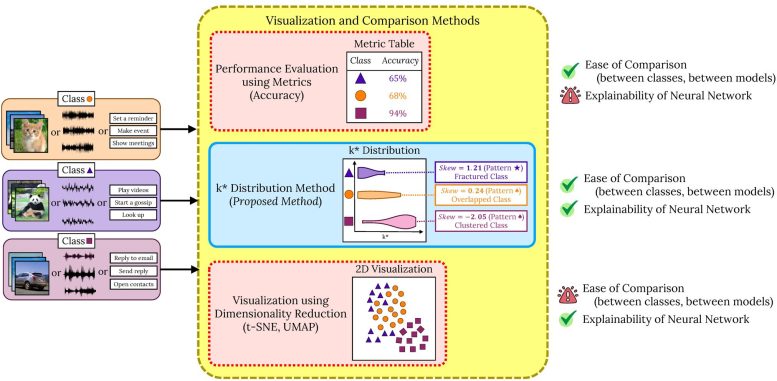

By visualizing how AI organizes data into categories, this method ensures safer, more reliable AI for real-world applications like healthcare and self-driving cars, taking us one step closer to truly understanding Currently, methods for visualizing how AI organizes information rely on simplifying high-dimensional data into 2D or 3D representations. These methods let researchers observe how AI categorizes data points—for example, grouping images of cats close to other cats while separating them from dogs. However, this simplification comes with critical limitations. “When we simplify high-dimensional information into fewer dimensions, it’s like flattening a 3D object into 2D—we lose important details and fail to see the whole picture. Additionally, this method of visualizing how the data is grouped makes it difficult to compare between different neural networks or data classes,” explains Vargas. In this study, the researchers developed a new method, called the k* distribution method, that more clearly visualizes and assesses how well deep neural networks categorize related items together. The model works by assigning each inputted data point a “k* value” which indicates the distance to the nearest unrelated data point. A high k* value means the data point is well-separated (e.g., a cat far from any dogs), while a low k* value suggests potential overlap (e.g., a dog closer to a cat than other cats). When looking at all the data points within a class, such as cats, this approach produces a distribution of k* values that provides a detailed picture of how the data is organized. “Our method retains the higher dimensional space, so no information is lost. It’s the first and only model that can give an accurate view of the ‘local neighborhood’ around each data point,” emphasizes Vargas. Using their method, the researchers revealed that deep neural networks sort data into clustered, fractured, or overlapping arrangements. In a clustered arrangement, similar items (e.g., cats) are grouped closely together, while unrelated items (e.g., dogs) are clearly separated, meaning the AI is able to sort the data well. Fractured arrangements, however, indicate that similar items are scattered across a wide space, while overlapping distributions occur when unrelated items are in the same space, with both arrangements making classification errors more likely. Vargas compares this to a warehouse system: “In a well-organized warehouse, similar items are stored together, making retrieval easy and efficient. If items are intermixed, they become harder to find, increasing the risk of selecting the wrong item.” AI is increasingly used in critical systems like autonomous vehicles and medical diagnostics, where accuracy and reliability are essential. The k* distribution method helps researchers, and even lawmakers, evaluate how AI organizes and classifies information, pinpointing potential weaknesses or errors. This not only supports the legalization processes needed to safely integrate AI into daily life but also offers valuable insights into how AI “thinks”. By identifying the root causes of errors, researchers can refine AI systems to make them not only accurate but also robust—capable of handling blurry or incomplete data and adapting to unexpected conditions. “Our ultimate goal is to create AI systems that maintain precision and reliability, even when faced with the challenges of real-world scenarios,” concludes Vargas. Reference: “k* Distribution: Evaluating the Latent Space of Deep Neural Networks Using Local Neighborhood Analysis” by Shashank Kotyan, Tatsuya Ueda and Danilo Vasconcellos Vargas, 16 September 2024, IEEE Transactions on Neural Networks and Learning Systems.

Limitations of Current Visualization Methods

Introducing the k* Distribution Method

Impact and Applications of the New Method

AI in Critical Systems and the Future

DOI: 10.1109/TNNLS.2024.3446509