Tyson Foodservice operates as a critical division within Tyson Foods Inc., using its extensive protein production capabilities to supply a diverse array of foodservice clients across multiple sectors. As one of the largest protein providers in the US, Tyson Foods produces approximately 20% of the nation’s beef, pork, and chicken, which forms the foundation of

Things are so desperate at OpenAI that Sam Altman is starting to sound like Gary Marcus

Once upon a time, Sam Altman promised that AGI was imminent. In light of my warnings that scaling wouldn’t get us to AGI and might not turn a profit, he called me a “troll” and a “mediocre deep learning skeptic”.

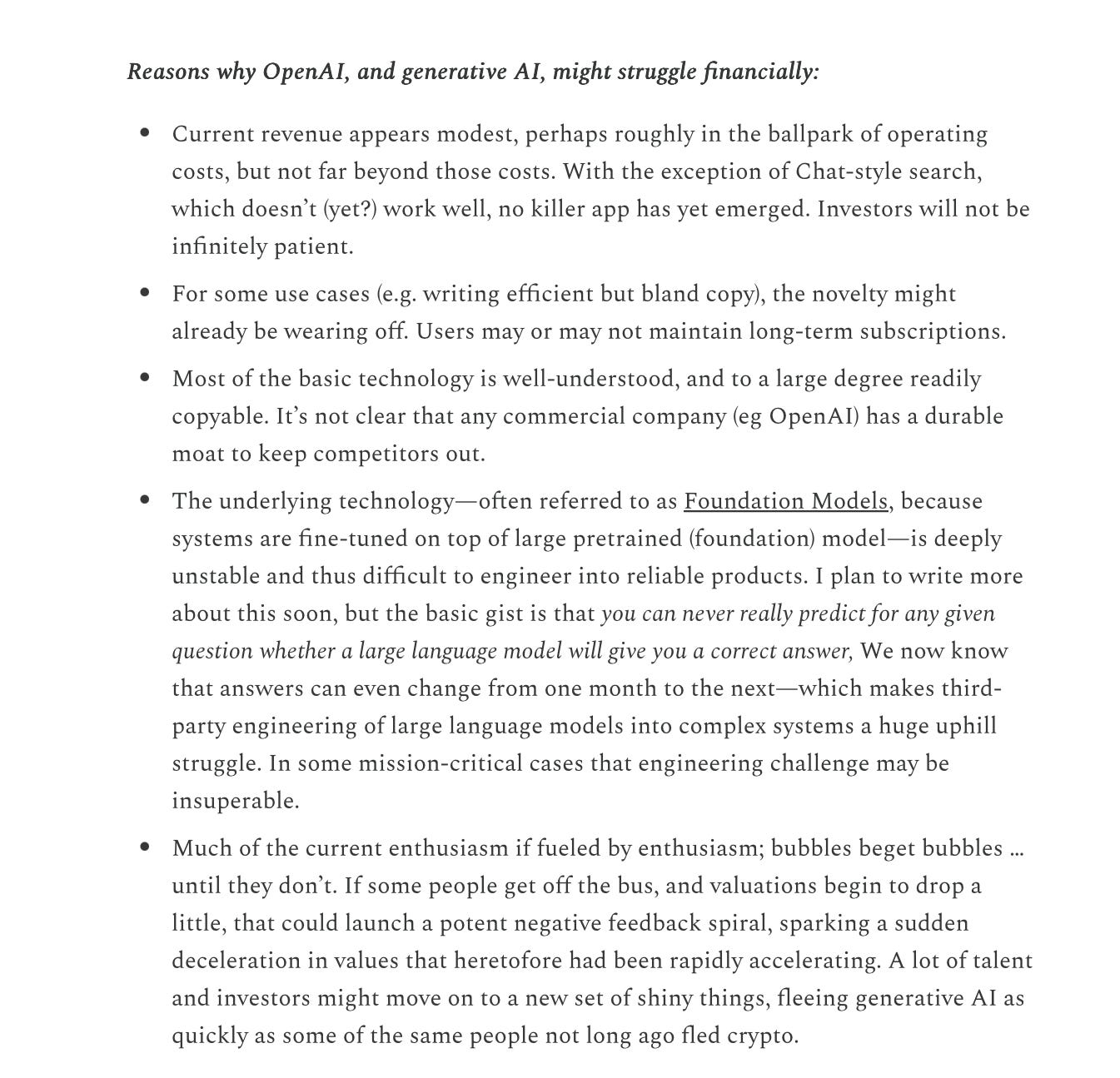

Hard to imagine that he was pleased when I said that scaling would reach a point of diminishing returns and that the economics of large language models were questionable, as in this Substack essay from August 2023, which largely anticipated where we are now:

But things just changed. GPT-5 finally dropped, and it was an immense disappointment. Nobody is buying Altman’s “AGI is imminent” act any more.

So Altman has been busy retrenching like mad, with TV appearances and private conversations with journalists. Last week he said nobody really knew what AGI was anyway (sour grapes!), implicitly acknowledging it might not be so close after all, and downplaying the goal of AGI altogether.

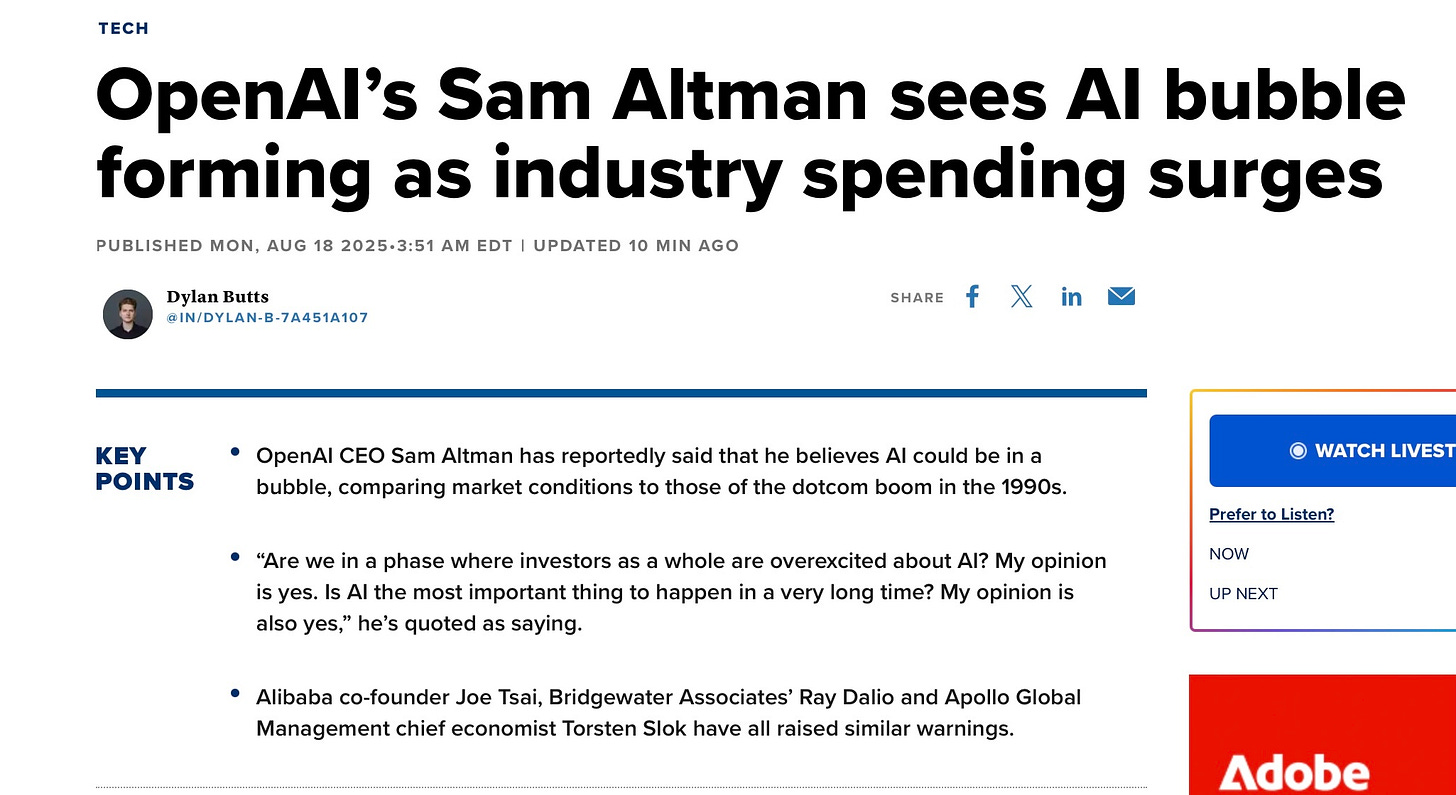

Now he is also apparently acknowledging that AI might be in a bubble:

§

I have seen this dynamic before, a one-time AI hypester switching sides to a position much closer to mine, albeit only under considerable pressure.

The previous time, some readers may remember, involved Yann LeCun, Meta’s chief AI scientist. LeCun had told me in 2022 that deep learning was categorically not “hitting a wall”, only to switch in December of 2022 to dismissing LLMs every chance he can, calling LLMs an off-ramp and saying that they “suck”. (LeCun has never once publicly acknowledged that I was making similar points for years. He would say, in his defense, that he is not a carbon copy of me, rightly pointing out that LLMs aren’t all that deep learning has to offer, and arguing that my famous “deep learning is hitting a wall” title was too broad. But the fact is, LLMs are, for now, anyway, the best that deep learning has to offer. And no other form of deep learning has yet solved the issues of reasoning, hallucinations, stupid errors, and factuality, either. At least until some new technique is invented, deep learning has hit a wall on these issues.).

What caused LeCun to switch his tone was (or so I strongly suspect) the November 2022 runaway success of ChatGPT, which thoroughly eclipsed closely-related work that LeCun’s company was building at the time (a short-lived LLM called Galactica, that LeCun had hyped just a couple weeks earlier). In other words, LeCun was warm to LLMs when his company was in the lead, but once they lost the lead, he was hostile to LLM’s thereafter.

The dynamic with Altman is slightly different; it’s not that his lead is gone, but that his lead (and his reputation) has dwindled, after the disappointment of GPT-5. He is now on a mission to downscale the insane expectations that he built and couldn’t meet, and on a search to remain relevant, even though he didn’t come up with the goods.

§

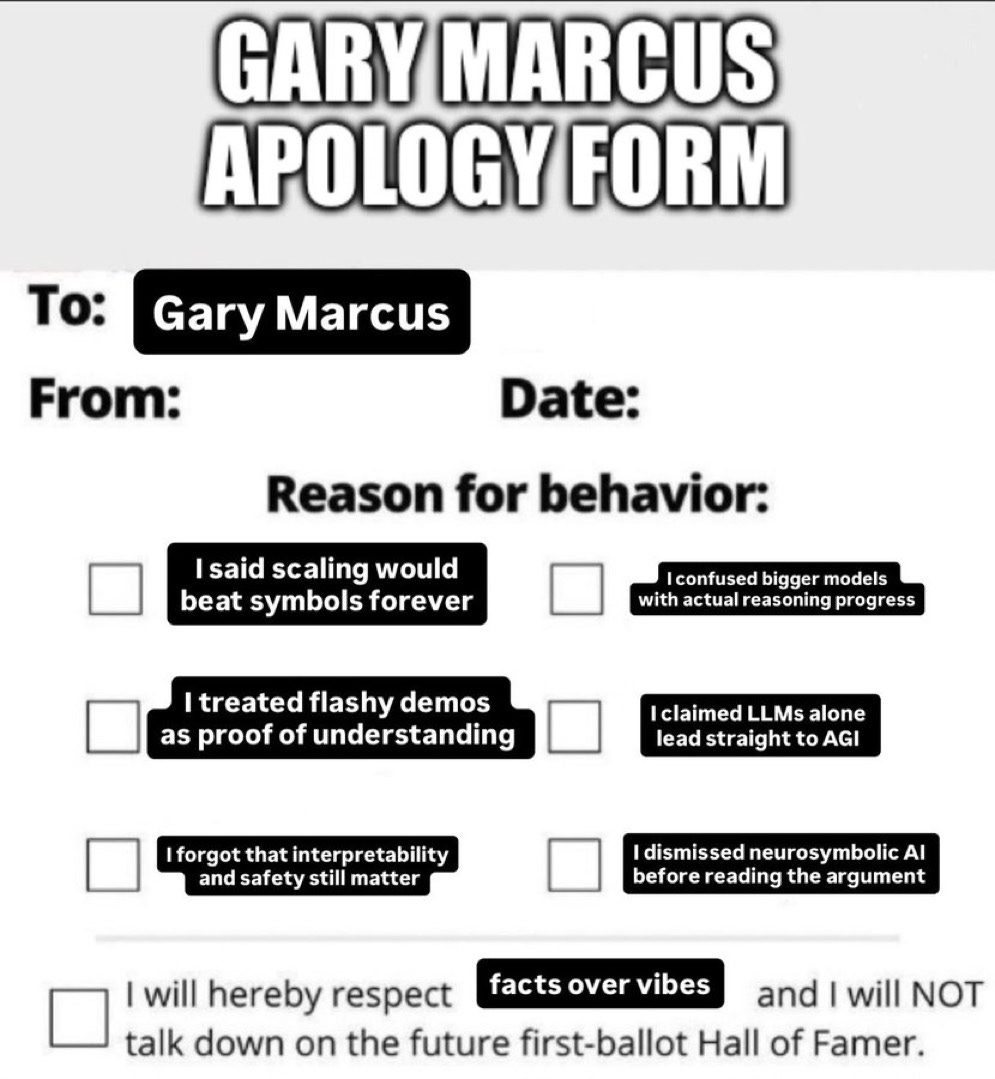

Also, in fashion news, here’s the second, independent Gary Marcus apology form I have seen in less than a week. (I don’t write these things, but it’s hard to resist the temptation to share them.)

Maybe someone should get Sam a copy to fill out?

Also, um, if there is a bubble, what does that mean for the valuations of NVidia and OpenAI? 🤔