Tyson Foodservice operates as a critical division within Tyson Foods Inc., using its extensive protein production capabilities to supply a diverse array of foodservice clients across multiple sectors. As one of the largest protein providers in the US, Tyson Foods produces approximately 20% of the nation’s beef, pork, and chicken, which forms the foundation of

Enhance AI agents using predictive ML models with Amazon SageMaker AI and Model …

Machine learning (ML) has evolved from an experimental phase to becoming an integral part of business operations. Organizations now actively deploy ML models for precise sales forecasting, customer segmentation, and churn prediction. While traditional ML continues to transform business processes, generative AI has emerged as a revolutionary force, introducing powerful and accessible tools that reshape customer experiences.

Despite generative AI’s prominence, traditional ML solutions remain essential for specific predictive tasks. Sales forecasting, which depends on historical data and trend analysis, is most effectively handled by established ML algorithms including random forests, gradient boosting machines (like XGBoost), autoregressive integrated moving average (ARIMA) models, long short-term memory (LSTM) networks, and linear regression techniques. Traditional ML models, such as K-means and hierarchical clustering, also excel in customer segmentation and churn prediction applications. Although generative AI demonstrates exceptional capabilities in creative tasks such as content generation, product design, and personalized customer interactions, traditional ML models maintain their superiority in data-driven predictions. Organizations can achieve optimal results by using both approaches together, creating solutions that deliver accurate predictions while maintaining cost efficiency.

To achieve this, we showcase in this post how customers can expand AI agents’ capabilities by integrating predictive ML models and Model Context Protocol (MCP)—an open protocol that standardizes how applications provide context to large language models (LLMs)—on Amazon SageMaker AI. We demonstrate a comprehensive workflow that enables AI agents to make data-driven business decisions by using ML models hosted SageMaker. Through the use of Strands Agents SDK—an open source SDK that takes a model-driven approach to building and running AI agents in only a few lines of code—and flexible integration options, including direct endpoint access and MCP, we show you how to build intelligent, scalable AI applications that combine the power of conversational AI with predictive analytics.

Solution overview

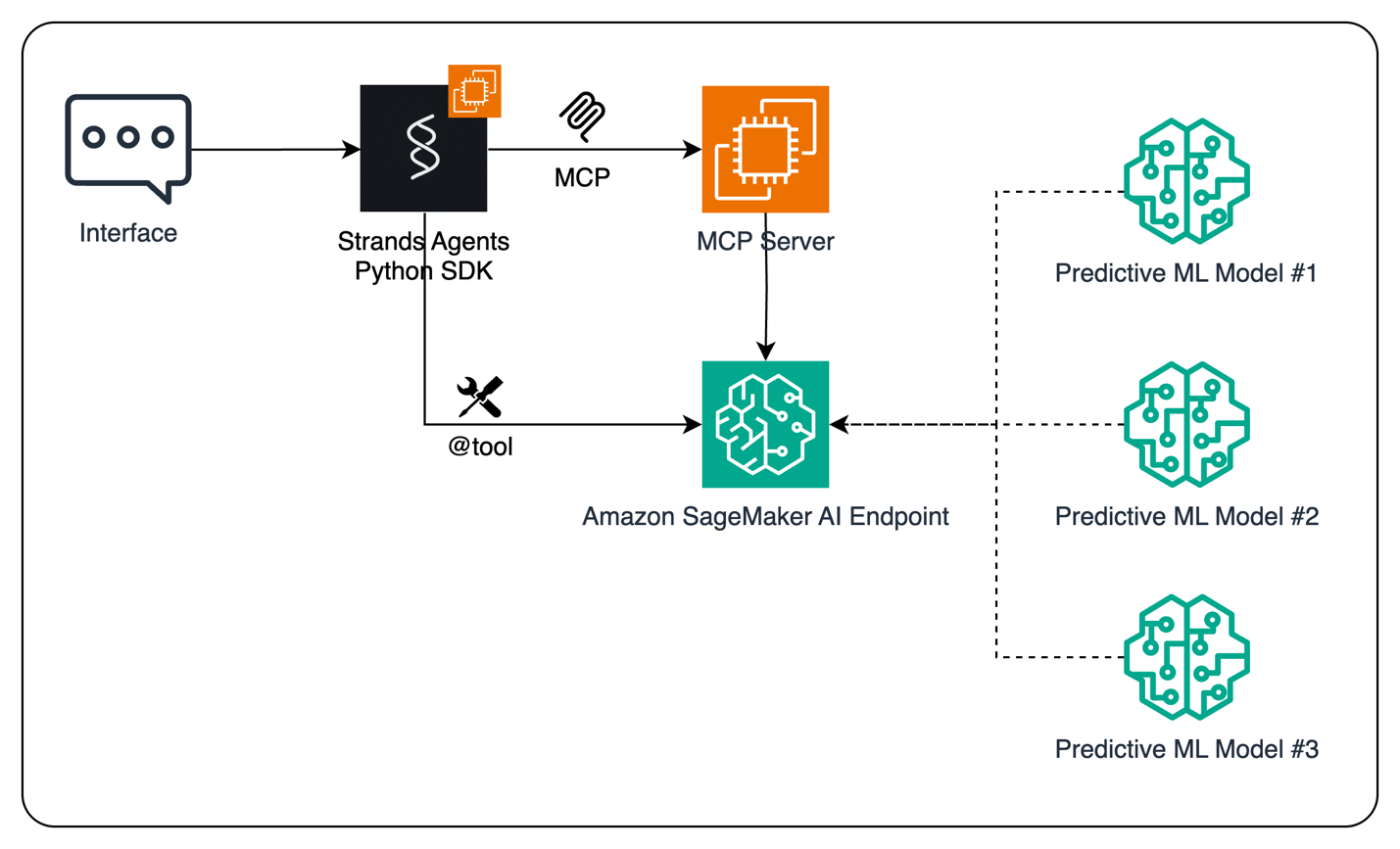

This solution enhances AI agents by having ML models deployed on Amazon SageMaker AI endpoints integrate with AI Agents, to enable them to make data-driven business decisions through ML predictions. An AI agent is an LLM-powered application that uses an LLM as its core “brain” to autonomously observe its environment, plan actions, and execute tasks with minimal human input. It integrates reasoning, memory, and tool use to perform complex, multistep problem-solving by dynamically creating and revising plans, interacting with external systems, and learning from past interactions to optimize outcomes over time. This enables AI agents to go beyond simple text generation, acting as independent entities capable of decision-making and goal-directed actions in diverse real-world and enterprise scenarios.For this solution, the AI agent is developed using the Strands Agents SDK, which allows for rapid development from simple assistants to complex workflows. Predictive ML models are hosted on Amazon SageMaker AI and will be used as tools by the AI agent. This can happen in two ways: agents can directly invoke SageMaker endpoints for more direct access to model inference capabilities or use the MCP protocol to facilitate the interaction between AI agents and the ML models. Both options are valid: direct tool invocation doesn’t require additional infrastructure by embedding the tool calling directly in the agent code itself, whereas MCP enables dynamic discovery of the tools and decoupling of agent and tool execution through the introduction of an additional architectural component, the MCP server itself. For scalable and secure implementation of the tool calling logic, we recommend the MCP approach. Although we’re recommending MCP, we discuss and implement the direct endpoint access as well, to give readers the freedom to choose the approach that they prefer.

Amazon SageMaker AI offers two methods to host multiple models behind a single endpoint: inference components and multi-model endpoints. This consolidated hosting approach enables efficient deployment of multiple models in one environment, which optimizes computing resources and minimizes response times for model predictions. For demonstration purposes, this post deploys only one model on one endpoint. If you want to learn more about inference components, refer to the Amazon SageMaker AI documentation Shared resource utilization with multiple models. To learn more about multi-model endpoints, refer to the Amazon SageMaker AI documentation Multi-model endpoints.

Architecture

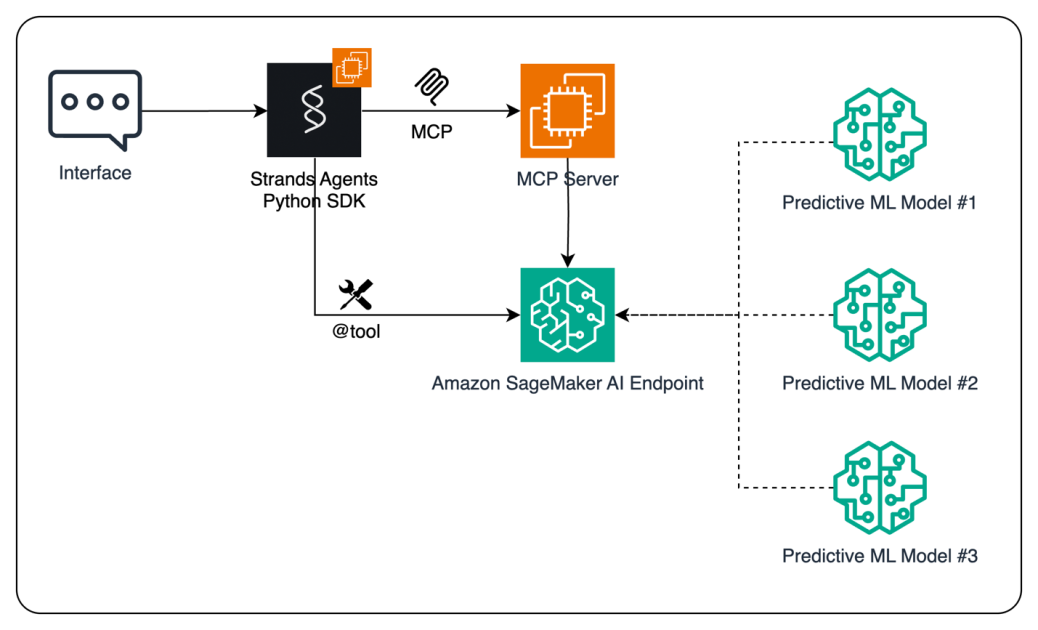

In this post, we define a workflow for empowering AI agents to make data-driven business decisions by invoking predictive ML models using Amazon SageMaker AI. The process begins with a user interacting through an interface, such as a chat-based assistant or application. This input is managed by an AI agent developed using the open source Strands Agents SDK. Strands Agents adopts a model-driven approach, which means developers define agents with only a prompt and a list of tools, facilitating rapid development from simple assistants to complex autonomous workflows.

When the agent is prompted with a request that requires a prediction (for example, “what will be the sales for H2 2025?”), the LLM powering the agent decided to interact with the Amazon SageMaker AI endpoint hosting the ML model. This can happen in two ways: directly using the endpoint as a custom tool of the Strands Agents Python SDK or by calling the tool through MCP. With MCP, the client application can discover the tools exposed by the MCP server, obtain the list of required parameters, and format the request to the Amazon SageMaker inference endpoint. Alternatively, agents can directly invoke SageMaker endpoints using tool annotations (such as @tool), bypassing the MCP server for more direct access to model inference capabilities.

Finally, the prediction generated by the SageMaker hosted model is routed back through the agent and ultimately delivered to the user interface, enabling real-time, intelligent responses.

The following diagram illustrates this process. The complete code for this solution is available on GitHub.

Prerequisites

To get started with this solution, make sure you have:

- An AWS account that will contain all your AWS resources.

- An AWS Identity and Access Management (IAM) role to access SageMaker AI. To learn more about how IAM works with SageMaker AI, refer to AWS Identity and Access Management for Amazon SageMaker AI.

- Access to Amazon SageMaker Studio and a SageMaker AI notebook instance or an interactive development environment (IDE) such as PyCharm or Visual Studio Code. We recommend using SageMaker Studio for straightforward deployment and inference.

- Access to accelerated instances (GPUs) for hosting the LLMs.

Solution implementation

In this solution, we implement a complete workflow that demonstrates how to use ML models deployed on Amazon SageMaker AI as specialized tools for AI agents. This approach enables agents to access and use ML capabilities for enhanced decision-making without requiring deep ML expertise. We play the role of a data scientist tasked with building an agent that can predict demand for one product. To achieve this, we train a time-series forecasting model, deploy it, and expose it to an AI agent.

The first phase involves training a model using Amazon SageMaker AI. This begins with preparing training data by generating synthetic time series data that incorporates trend, seasonality, and noise components to simulate realistic demand patterns. Following data preparation, feature engineering is performed to extract relevant features from the time series data, including temporal features such as day of week, month, and quarter to effectively capture seasonality patterns. In our example, we train an XGBoost model using the XGBoost container available as 1P container in Amazon SageMaker AI to create a regression model capable of predicting future demand values based on historical patterns. Although we use XGBoost for this example because it’s a well-known model used in many use cases, you can use your preferred container and model, according to the problem you’re trying to solve. For the sake of this post, we won’t detail an end-to-end example of training a model using XGBoost. To learn more about this, we suggest checking out the documentation Use XGBoost with the SageMaker Python SDK. Use the following code:

from sagemaker.xgboost.estimator import XGBoost xgb_estimator = XGBoost(...) xgb_estimator.fit({'train': train_s3_path, 'validation': val_s3_path})Then, the trained model is packaged and deployed to a SageMaker AI endpoint, making it accessible for real-time inference through API calls:

predictor = xgb_estimator.deploy( initial_instance_count=1, instance_type=instance_type, serializer=JSONSerializer(), deserializer=JSONDeserializer() )After the model is deployed and ready for inferences, you need to learn how to invoke the endpoint. To invoke the endpoint, write a function like this:

ENDPOINT_NAME = "serverless-xgboost" REGION = boto3.session.Session().region_name def invoke_endpoint(payload: list): """ Use the model deployed on the Amazon SageMaker AI endpoint to generate predictions. Args: payload: a list of lists containing the inputs to generate predictions from Returns: predictions: an NumPy array of predictions """ sagemaker_runtime = boto3.client("sagemaker-runtime", region_name=REGION) response = sagemaker_runtime.invoke_endpoint( EndpointName=ENDPOINT_NAME, Body=json.dumps(payload), ContentType="application/json", Accept="application/json" ) predictions = json.loads(response['Body'].read().decode("utf-8")) return np.array(predictions)Note that the function invoke_endpoint() has been written with proper docstring. This is key to making sure that it can be used as a tool by LLMs because the description is what allows them to choose the right tool for the right task. YOu can turn this function into a Strands Agents tool thanks to the @tool decorator:

from strands import tool @tool() def invoke_endpoint(payload: list): ....And to use it, pass it to a Strands agent:

from strands import Agent agent = Agent( model="us.amazon.nova-pro-v1:0", tools=[generate_prediction_with_sagemaker] ) agent( "Invoke the endpoint with this input:nn" f"{test_sample}nn" "Provide the output in JSON format {'predictions':}" ) As you run this code, you can confirm the output from the agent, which correctly identifies the need to call the tool and executes the function calling loop:

To fulfill the User's request, I need to invoke the Amazon SageMaker endpoint with the provided input data. The input is a list of lists, which is the required format for the 'generate_prediction_with_sagemaker' tool. I will use this tool to get the predictions. Tool #1: generate_prediction_with_sagemaker The predictions from the Amazon SageMaker endpoint are as follows: ```json { "predictions": [89.8525238, 52.51485062, 58.35247421, 62.79786301, 85.51475525] } ```As the agent receives the prediction result from the endpoint tool, it can then use this as an input for other processes. For example, the agent could write the code to create a plot based on these predictions and show it to the user in the conversational UX. It could send these values directly to business intelligence (BI) tools such as Amazon QuickSight or Tableau and automatically update enterprise resource planning (ERP) or customer relationship management (CRM) tools such as SAP or Salesforce.

Connecting to the endpoint through MCP

You can further evolve this pattern by having an MCP server invoke the endpoint rather than the agent itself. This allows for the decoupling of agent and tool logic and an improved security pattern because the MCP server will be the one with the permission to invoke the endpoint. To achieve this, implement an MCP server using the FastMCP framework that wraps the SageMaker endpoint and exposes it as a tool with a well-defined interface. A tool schema must be specified that clearly defines the input parameters and return values for the tool, facilitating straightforward understanding and usage by AI agents. Writing the proper docstring when defining the function achieves this. Additionally, the server must be configured to handle authentication securely, allowing it to access the SageMaker endpoint using AWS credentials or AWS roles. In this example, we run the server on the same compute as the agent and use stdio as communication protocol. For production workloads, we recommend running the MCP server on its own AWS compute and using transport protocols based on HTTPS (for example, Streamable HTTP). If you want to learn how to deploy MCP servers in a serverless fashion, refer to this official AWS GitHub repository. Here’s an example MCP server:

from mcp.server.fastmcp import FastMCP mcp = FastMCP("SageMaker App") ENDPOINT_NAME = os.environ["SAGEMAKER_ENDPOINT_NAME"] @mcp.tool() async def invoke_endpoint(payload: list): """ Use the model ... """ [...] if __name__ == "__main__": mcp.run(="stdio")Finally, integrate the ML model with the agent framework. This begins with setting up Strands Agents to establish communication with the MCP server and incorporate the ML model as a tool. A comprehensive workflow must be created to determine when and how the agent should use the ML model to enhance its capabilities. The implementation includes programming decision logic that enables the agent to make informed decisions based on the predictions received from the ML model. The phase concludes with testing and evaluation, where the end-to-end workflow is validated by having the agent generate predictions for test scenarios and take appropriate actions based on those predictions.

from mcp import StdioServerParameters from mcp.client.stdio import stdio_client from strands.tools.mcp import MCPClient # Create server parameters for stdio connection server_params = StdioServerParameters( command="python3", # Executable args=["server.py"], # Optional command line arguments env={"SAGEMAKER_ENDPOINT_NAME": ""} ) # Create an agent with MCP tools with stdio_mcp_client: # Get the tools from the MCP server tools = stdio_mcp_client.list_tools_sync() # Create an agent with these tools agent = Agent(model="us.amazon.nova-pro-v1:0", tools=tools) # Invoke the agent agent( "Invoke the endpoint with this input:nn" f"{test_sample}nn" "Provide the output in JSON format {'predictions':}" ) Clean up

When you’re done experimenting with the Strands Agents Python SDK and models on Amazon SageMaker AI, you can delete the endpoint you’ve created to stop incurring unwanted charges. To do that, you can use either the AWS Management Console, the SageMaker Python SDK, or the AWS SDK for Python (boto3):

# SageMaker Python SDK predictor.delete_model() predictor.delete_endpoint() # Alternatively, boto3 sagemaker_runtime.delete_endpoint(EndpointName=endpoint_name)Conclusion

In this post, we demonstrated how to enhance AI agents’ capabilities by integrating predictive ML models using Amazon SageMaker AI and the MCP. By using the open source Strands Agents SDK and the flexible deployment options of SageMaker AI, developers can create sophisticated AI applications that combine conversational AI with powerful predictive analytics capabilities. The solution we presented offers two integration paths: direct endpoint access through tool annotations and MCP-based integration, giving developers the flexibility to choose the most suitable approach for their specific use cases. Whether you’re building customer service chat assistants that need predictive capabilities or developing complex autonomous workflows, this architecture provides a secure, scalable, and modular foundation for your AI applications. As organizations continue to seek ways to make their AI agents more intelligent and data-driven, the combination of Amazon SageMaker AI, MCP, and the Strands Agents SDK offers a powerful solution for building the next generation of AI-powered applications.

For readers unfamiliar with connecting MCP servers to workloads running on Amazon SageMaker AI, we suggest Extend large language models powered by Amazon SageMaker AI using Model Context Protocol in the AWS Artificial Intelligence Blog, which details the flow and the steps required to build agentic AI solutions with Amazon SageMaker AI.

To learn more about AWS commitment to the MCP standard, we recommend reading Open Protocols for Agent Interoperability Part 1: Inter-Agent Communication on MCP in the AWS Open Source Blog, where we announced that AWS is joining the steering committee for MCP to make sure developers can build breakthrough agentic applications without being tied to one standard. To learn more about how to use MCP with other technologies from AWS, such as Amazon Bedrock Agents, we recommend reading Harness the power of MCP servers with Amazon Bedrock Agents in the AWS Artificial Intelligence Blog. Finally, a great way to securely deploy and scale MCP servers on AWS is provided in the AWS Solutions Library at Guidance for Deploying Model Context Protocol Servers on AWS.

About the authors

Saptarshi Banerjee serves as a Senior Solutions Architect at AWS, collaborating closely with AWS Partners to design and architect mission-critical solutions. With a specialization in generative AI, AI/ML, serverless architecture, Next-Gen Developer Experience tools and cloud-based solutions, Saptarshi is dedicated to enhancing performance, innovation, scalability, and cost-efficiency for AWS Partners within the cloud ecosystem.

Saptarshi Banerjee serves as a Senior Solutions Architect at AWS, collaborating closely with AWS Partners to design and architect mission-critical solutions. With a specialization in generative AI, AI/ML, serverless architecture, Next-Gen Developer Experience tools and cloud-based solutions, Saptarshi is dedicated to enhancing performance, innovation, scalability, and cost-efficiency for AWS Partners within the cloud ecosystem.

Davide Gallitelli is a Senior Worldwide Specialist Solutions Architect for Generative AI at AWS, where he empowers global enterprises to harness the transformative power of AI. Based in Europe but with a worldwide scope, Davide partners with organizations across industries to architect custom AI agents that solve complex business challenges using AWS ML stack. He is particularly passionate about democratizing AI technologies and enabling teams to build practical, scalable solutions that drive organizational transformation.

Davide Gallitelli is a Senior Worldwide Specialist Solutions Architect for Generative AI at AWS, where he empowers global enterprises to harness the transformative power of AI. Based in Europe but with a worldwide scope, Davide partners with organizations across industries to architect custom AI agents that solve complex business challenges using AWS ML stack. He is particularly passionate about democratizing AI technologies and enabling teams to build practical, scalable solutions that drive organizational transformation.