Tyson Foodservice operates as a critical division within Tyson Foods Inc., using its extensive protein production capabilities to supply a diverse array of foodservice clients across multiple sectors. As one of the largest protein providers in the US, Tyson Foods produces approximately 20% of the nation’s beef, pork, and chicken, which forms the foundation of

Agent Factory: Building your first AI agent with the tools to deliver real-world outcomes

Agents are only as capable as the tools you give them—and only as trustworthy as the governance behind those tools.

This blog post is the second out of a six-part blog series called Agent Factory which will share best practices, design patterns, and tools to help guide you through adopting and building agentic AI.

In the previous blog, we explored five common design patterns of agentic AI—from tool use and reflection to planning, multi-agent collaboration, and adaptive reasoning. These patterns show how agents can be structured to achieve reliable, scalable automation in real-world environments.

Across the industry, we’re seeing a clear shift. Early experiments focused on single-model prompts and static workflows. Now, the conversation is about extensibility—how to give agents a broad, evolving set of capabilities without locking into one vendor or rewriting integrations for each new need. Platforms are competing on how quickly developers can:

- Integrate with hundreds of APIs, services, data sources, and workflows.

- Reuse those integrations across different teams and runtime environments.

- Maintain enterprise-grade control over who can call what, when, and with what data.

The lesson from the past year of agentic AI evolution is simple: agents are only as capable as the tools you give them—and only as trustworthy as the governance behind those tools.

Extensibility through open standards

In the early stages of agent development, integrating tools was often a bespoke, platform-specific effort. Each framework had its own conventions for defining tools, passing data, and handling authentication. This created several consistent blockers:

- Duplication of effort—the same internal API had to be wrapped differently for each runtime.

- Brittle integrations—small changes to schemas or endpoints could break multiple agents at once.

- Limited reusability—tools built for one team or environment were hard to share across projects or clouds.

- Fragmented governance—different runtimes enforced different security and policy models.

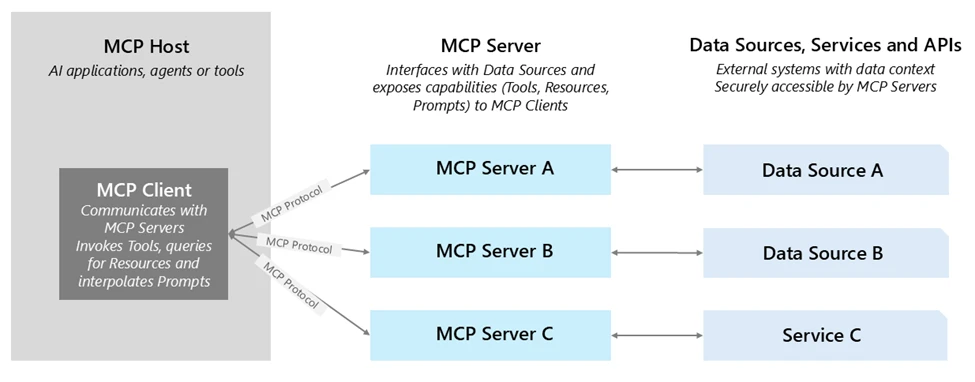

As organizations began deploying agents across hybrid and multi-cloud environments, these inefficiencies became major obstacles. Teams needed a way to standardize how tools are described, discovered, and invoked, regardless of the hosting environment.

That’s where open protocols entered the conversation. Just as HTTP transformed the web by creating a common language for clients and servers, open protocols for agents aim to make tools portable, interoperable, and easier to govern.

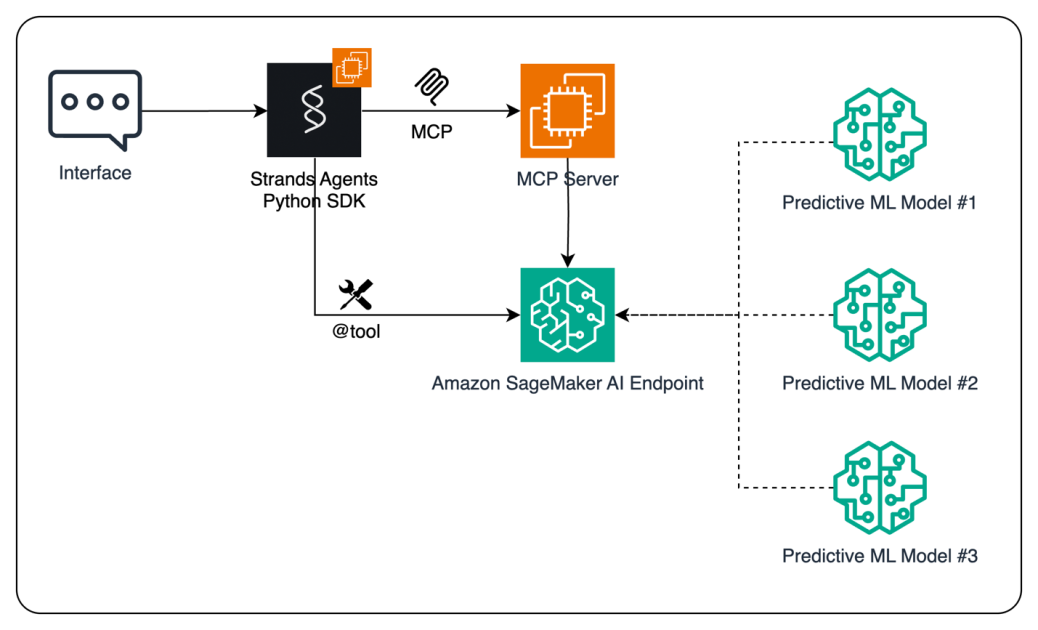

One of the most promising examples is the Model Context Protocol (MCP)—a standard for defining tool capabilities and I/O schemas so any MCP-compliant agent can dynamically discover and invoke them. With MCP:

- Tools are self-describing, making discovery and integration faster.

- Agents can find and use tools at runtime without manual wiring.

- Tools can be hosted anywhere—on-premises, in a partner cloud, or in another business unit—without losing governance.

Azure AI Foundry supports MCP, enabling you to bring existing MCP servers directly into your agents. This gives you the benefits of open interoperability plus enterprise-grade security, observability, and management. Learn more about MCP at MCP Dev Days.

Once you have a standard for portability through open protocols like MCP, the next question becomes: what kinds of tools should your agents have, and how do you organize them so they can deliver value quickly while staying adaptable?

In Azure AI Foundry, we think of this as building an enterprise toolchain—a layered set of capabilities that balance speed (getting something valuable running today), differentiation (capturing what makes your business unique), and reach (connecting across all the systems where work actually happens).

1. Built-in tools for rapid value: Azure AI Foundry includes ready-to-use tools for common enterprise needs: searching across SharePoint and data lake, executing Python for data analysis, performing multi-step web research with Bing, and triggering browser automation tasks. These aren’t just conveniences—they let teams stand up functional, high-value agents in days instead of weeks, without the friction of early integration work.

2. Custom tools for your competitive edge: Every organization has proprietary systems and processes that can’t be replicated by off-the-shelf tools. Azure AI Foundry makes it straightforward to wrap these as agentic AI tools—whether they’re APIs from your ERP, a manufacturing quality control system, or a partner’s service. By invoking them through OpenAPI or MCP, these tools become portable and discoverable across teams, projects, and even clouds, while still benefiting from Foundry’s identity, policy, and observability layers.

3. Connectors for maximum reach: Through Azure Logic Apps, Foundry can connect agents to over 1,400 SaaS and on-premises systems—CRM, ERP, ITSM, data warehouses, and more. This dramatically reduces integration lift, allowing you to plug into existing enterprise processes without building every connector from scratch.

One example of this toolchain in action comes from NTT DATA, which built agents in Azure AI Foundry that integrate Microsoft Fabric Data Agent alongside other enterprise tools. These agents allow employees across HR, operations, and other functions to interact naturally with data—revealing real-time insights and enabling actions—reducing time-to-market by 50% and giving non‑technical users intuitive, self-service access to enterprise intelligence.

Extensibility must be paired with governance to move from prototype to enterprise-ready automation. Azure AI Foundry addresses this with a secure-by-default approach to tool management:

- Authentication and identity in built-in connectors: Enterprise-grade connectors—like SharePoint and Microsoft Fabric—already use on-behalf-of (OBO) authentication. When an agent invokes these tools, Foundry ensures that the call respects the end user’s permissions via managed Entra IDs, preserving existing authorization rules. With Microsoft Entra Agent ID, every agentic project created in Azure AI Foundry automatically appears in an agent-specific application view within the Microsoft Entra admin center. This provides security teams with a unified directory view of all agents and agent applications they need to manage across Microsoft. This integration marks the first step toward standardizing governance for AI agents company wide. While Entra ID is native, Azure AI Foundry also supports integrations with external identity systems. Through federation, customers who use providers such as Okta or Google Identity can still authenticate agents and users to call tools securely.

- Custom tools with OpenAPI and MCP: OpenAPI-specified tools enable seamless connectivity using managed identities, API keys, or unauthenticated access. These tools can be registered directly in Foundry, and align with standard API design best practices. Foundry is also expanding MCP security to include stored credentials, project-level managed identities, and third-party OAuth flows, along with secure private networking—advancing toward a fully enterprise-grade, end-to-end MCP integration model.

- API governance with Azure API Management (APIM): APIM provides a powerful control plane for managing tool calls: it enables centralized publishing, policy enforcement (authentication, rate limits, payload validation), and monitoring. Additionally, you can deploy self-hosted gateways within VNets or on-prem environments to enforce enterprise policies close to backend systems. Complementing this, Azure API Center acts as a centralized, design-time API inventory and discovery hub—allowing teams to register, catalog, and manage private MCP servers alongside other APIs. These capabilities provide the same governance you expect for your APIs—extended to agentic AI tools without additional engineering.

- Observability and auditability: Every tool invocation in Foundry—whether internal or external—is traced with step-level logging. This includes identity, tool name, inputs, outputs, and outcomes, enabling continuous reliability monitoring and simplified auditing.

Enterprise-grade management ensures tools are secure and observable—but success also depends on how you design and operate them from day one. Drawing on Azure AI Foundry guidance and customer experience, a few principles stand out:

- Start with the contract. Treat every tool like an API product. Define clear inputs, outputs, and error behaviors, and keep schemas consistent across teams. Avoid overloading a single tool with multiple unrelated actions; smaller, single-purpose tools are easier to test, monitor, and reuse.

- Choose the right packaging. For proprietary APIs, decide early whether OpenAPI or MCP best fits your needs. OpenAPI tools are straightforward for well-documented REST APIs, while MCP tools excel when portability and cross-environment reuse are priorities.

- Centralize governance. Publish custom tools behind Azure API Management or a self-hosted gateway so authentication, throttling, and payload inspection are enforced consistently. This keeps policy logic out of tool code and makes changes easier to roll out.

- Bind every action to identity. Always know which user or agent is invoking the tool. For built-in connectors, leverage identity passthrough or OBO. For custom tools, use Entra ID or the appropriate API key/credential model, and apply least-privilege access.

- Instrument early. Add tracing, logging, and evaluation hooks before moving to production. Early observability lets you track performance trends, detect regressions, and tune tools without downtime.

Following these practices ensures that the tools you integrate today remain secure, portable, and maintainable as your agent ecosystem grows.

What’s next

In part three of the Agent Factory series, we’ll focus on observability for AI agents—how to trace every step, evaluate tool performance, and monitor agent behavior in real time. We’ll cover the built-in capabilities in Azure AI Foundry, integration patterns with Azure Monitor, and best practices for turning telemetry into continuous improvement.

Did you miss the first post in the series? Check it out: The new era of agentic AI—common use cases and design patterns.