This post is the second part of the GPT-OSS series focusing on model customization with Amazon SageMaker AI. In Part 1, we demonstrated fine-tuning GPT-OSS models using open source Hugging Face libraries with SageMaker training jobs, which supports distributed multi-GPU and multi-node configurations, so you can spin up high-performance clusters on demand. In this post

Artificial intelligence model for predicting early biochemical recurrence of prostate cancer …

Introduction

Prostate cancer (PCa) is a major public health issue in developed countries1. Radical prostatectomy (RP) is associated with a lower incidence of disease progression than active monitoring2,3. However, one-third of patients experience biochemical recurrence (BCR) after the procedure4,5. Previous studies have shown that the survival rate of patients with BCR is 20% lower after a ten-year follow-up, with a higher risk of metastasis and castration resistance5,6.

The European Association of Urology and European Society for Radiotherapy and Oncology recommend that physicians should discuss the possibility of administering radiotherapy to patients with adverse pathologic findings, as early treatment after RP may reduce the risk of mortality in these patients7. Nevertheless, not all patients will benefit from radiotherapy because some will never experience BCR8. As a result, various risk models have been developed to predict PCa prognosis in patients who undergo RP9,10.

D’Amico performed the first risk estimate using the Cox proportional hazards regression analysis, in which he noted differences between low-risk, intermediate-risk, and high-risk patients when estimating the PSA level 5 years after treatment9. Kattan developed a nomogram using Cox proportional hazard regression analysis to predict treatment failure after 5 years in patients with localized PCa after RP. He used preoperative PSA variables, cT stage, and biopsy Gleason scores and observed that there was only a 5% probability of patients not experiencing BCR 5 years after treatment when the total score was higher than 18011. Cooperberg presented the CAPRA score also using Cox proportional hazards regression analysis. He assigned points to PSA level, Gleason score, cT stage, age, and percentage of biopsy involvement. They found that the recurrence-free survival after 5 years of treatment was only 8% for a high CAPRA-S score12.

Although the accuracy of the previously mentioned models has been validated in different cohorts and exceeds manual decision-making capabilities, their performance is still subpar, with an area under the receiver operating characteristic (ROC) curve (AUC) 0.72–0.7513,14. Therefore, new, highly accurate tools are needed to detect PCa recurrence in patients at risk of BCR to provide effective treatment, maximize benefits, and reduce risks. The need to correctly classify patients living with cancer has led us to study the application of machine learning (ML) to predict disease progression and aid in the establishment of appropriate treatment regimens. The ability of ML tools to detect key features in complex datasets highlights the importance of this study15.

The main objective of this study was to build an ML model capable of predicting BCR in patients with PCa who underwent robot-assisted laparoscopic radical prostatectomy (RALP), with over 80% accuracy and an AUC higher than 85%. The secondary objectives were to identify the most important variables, preprocess and fine-tune the data, determine the appropriate training pattern, train and test different algorithms, compare their prediction performances, and complete the validation stage. Finally, we compared the best-performing ML model with the statistical model CAPRA-S used in urology because of the similarity in the variables analyzed and the temporality of the relapsed patients.

Materials and methods

This analytical observational study was conducted in a retrospective cohort of male patients diagnosed with localized PCa who underwent RALP between January 2008 and December 2020. Patients were registered in the Italian de Buenos Aires Hospital database. The inclusion criteria were individuals who experienced BCR after RALP with or without lymphadenectomy and without hormone blockade and/or radiotherapy. BCR was defined as PSA ≥ 0.2 ng/ml after RP. The patients were followed-up for a minimum of 2 years to determine if there was no cancer recurrence. To develop an effective ML model, a class-balanced database was created to optimize its capability. A total of 476 patients with BCR were selected, and undersampling techniques were applied to a group of 1,827 patients without recurrence, resulting in 548 patients.

The following independent variables and attributes were analyzed: age; comorbidities: diabetes (DBT), hypertension, and total body mass index; postsurgical tumor histopathology report: Gleason score, pathological stage, percentage of tumor in prostate gland, and tumor volume in the region of interest (ROI); lymphovascular invasion; surgical margins; prostate capsule penetration; extraprostatic disease; positive lymph nodes; digital rectal exam; type of surgery: RALP with or without lymph node dissection; year of surgery; neurovascular bundle preservation; PSA before RP; PSA nadir; D’amico risk groups; and MRI before RP with the PI-RADS score. The class variable is BCR (Online Appendix 1, Table S-I).

Data preprocessing and automatic attribute selection analyses were performed on the generated tabular database to obtain the optimal input pattern (Online Appendix 1). Five classifiers were used to build the AI models: artificial neural networks (ANN), support vector machines (SVM), random forests (RF), deep neural networks (DNN), and extreme gradient boosting (XGBoost) (Online Appendix 2, Table S-III). The attributes and input patterns used were the same for all classifiers. The cases were divided as follows: 70% and 30% in the training and testing stage, respectively. Additionally, tenfold cross-validation (CV) was applied to enhance the model’s robustness and ensure consistent performance across data subsets. Hyperparameters were tuned using grid search. In each model, the following metrics were assessed: percentage of accurately classified patients (accuracy), precision, sensitivity (recall), and AUC. Decision curve was created to assess the clinical net benefit of treating patients based on the models, comparing the “treat none” and “treat all” strategies. The calibration curve was used to measure the agreement between the model’s predicted probabilities and observed outcomes.

To assess the applicability of the model, a validation stage was conducted with 96 patients who were not included in the original model development. In addition, owing to the similarity in the analyzed variables and temporality of relapsed patients, the performance of the best AI model was compared with that of CAPRA-S12, a traditional prediction scoring tool commonly used in urology to estimate disease recurrence among patients with PCa.

Python (https://www.python.org/) and R (https://www.R-project.org/) were used for data pre-processing, exploratory statistical analysis, attribute selection, and classifier construction. The libraries used in R included dplyr, magrittr, caret, random forest, XGBoost, and pROC. The libraries used in Python include Pandas, NumPy, Matplotlib, Sklearn, TensorFlow, and Keras. From a statistical standpoint, the risk of each independent variable was evaluated against class. The odds ratio (OR) was assessed cross-sectionally, followed by risk variation over time using hazard ratio (HR). In both cases, the corresponding 95% confidence intervals were calculated. The level of statistical significance was set at α = 0.05. Patients enrolled in the study voluntarily signed an informed consent form regarding the use of their data in accordance with the principles of the Declaration of Helsinki, complying with the standards and protocols for conducting human research established by the Ethics Committee of the Italian Hospital of Buenos Aires. This study adhered to the TRIPOD-AI guidelines for transparent reporting and the PROBAST-AI tool for assessing risk of bias in diagnostic and prognostic prediction models based on artificial intelligence16,17.

Results

We started with a tabular database containing 25 independent variables and one temporal variable (time in months until relapse). The mean age of the non-relapsed group was 60 ± 6.4 years, and that of the relapsed group was 61.1 ± 6.5 years. The median time to relapse was 12 months.

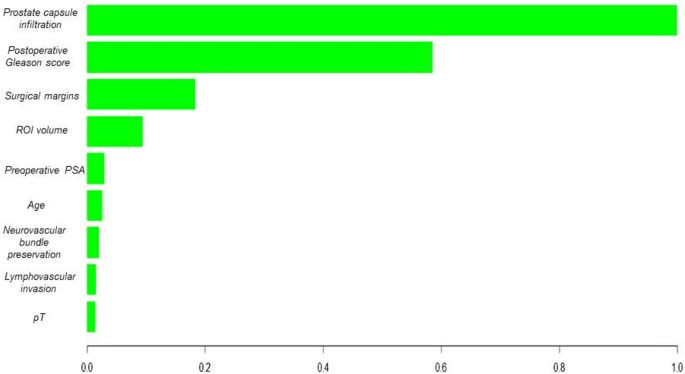

Of the 25 independent variables, those that showed neither clinically meaningful (expert knowledge) nor statistical significance were excluded, along with those that were irrelevant to automatic attribute selection. At this stage, variables related to penetration and the postoperative Gleason score consistently ranked the highest among the top attributes, as they provided the most information gain for the predictive models (Online Appendix 1). Table 1 presents the risk analyses of the 16 variables used to build the model. The variables selected to train the predictive models could differentiate between cohorts with statistical significance.

Full size table

In the classification model building phase, the ANN yielded the following results on the training dataset: 91.6% sensitivity (recall), 91.6% precision, 91.5% accuracy, and an AUC of 0.96. The SVM algorithm delivered the following results on the training dataset: 82% sensitivity (recall), 83.1% precision, 82% accuracy, and an AUC of 0.82. The RF algorithm obtained the following results on the training dataset: 80.4% sensitivity (recall), 80.4% precision, 80.3% accuracy, and AUC of 0.88. The metrics of the algorithms for the test dataset are presented in Table 2. The ANN model achieved an AUC of 0.96 on the training set, which dropped to 0.84 during testing, consistent with overfitting. This likely reflects the model’s high capacity relative to the size of the training folds, enabling it to learn noise despite regularization efforts such as early stopping.

Full size table

After observing the performance of RF and considering it a tree-based algorithm, we decided to use the XGBoost algorithm. The testing dataset showed an 84% sensitivity (recall), 84% precision, 84% accuracy, and an AUC of 0.91. (Online Appendix 2). In addition, a deep learning algorithm was trained to compare its performance with the best ML model, which showed lower metrics than the best ML algorithm (Table 2). The best ML algorithm was validated using a novel dataset comprising 96 patients. XGBoost correctly classified 82% of the cases, with an AUC of 0.89 (Fig. 1a). Figure 2 illustrates the variable importance analysis of the XGBoost model. SHAP analysis confirmed model robustness and interpretability by highlighting postoperative Gleason score, prostate capsule infiltration, and surgical margins as the top predictors (Fig. 3).

Validation of the model. (a) ROC curves illustrating discriminatory performance. (b) Calibration plot showing agreement between predicted and observed risks. (c) Decision-curve analysis comparing net clinical benefit of the XGBoost model with CAPRA-S and default strategies.

Full size image

Variable importance of XGBoost Model.

Full size image

Variable importance based on SHAP values.

Full size image

The decision curve demonstrates that the XGBoost model provides a higher clinical net benefit across a broad range of probability thresholds, suggesting that this model is more effective in determining when to intervene in patients. Finally, the calibration curve shows that the predictions from XGBoost are better calibrated compared to those of CAPRA, as the blue line is closer to the perfect calibration line. (Fig. 1b,c).

Finally, CAPRA-S outputs were calculated for progression at 3 years, with a score of 6 as the cutoff point. This resulted in a dichotomous output for the score, where values 0–6 indicated a low probability of recurrence, and values 7–12 indicated a high probability of recurrence. The outputs were calculated for 307 cases by using the XGBoost test dataset with CV. By comparing the results with the actual recurrence outcomes, we obtained 77% sensitivity, 76% precision, 77.2% accuracy, and an AUC of 0.78 (Fig. 1a).

Discussion

The European Association of Urology recommends adjuvant radiation following RP for patients with adverse pathology7. Notwithstanding, a systematic review of risk stratification for patients with recurrence after RP did not provide evidence that the proposed risk factors should be used in clinical practice to tailor treatment. Furthermore, after RP, the only risk factors with moderate levels of evidence were pT3 stage, Gleason grade 4, surgical margins and pre-salvage PSA levels exceeding 0.5 ng/ml18.

There is little evidence on the use of ML tools to assess relapsing patients after RP19. Correctly classifying patients who experience BCR is extremely important, as adjuvant therapies carry adverse effects. Unfortunately, the recommended prognostic tools have low discriminative capacity20,21.

Wong analyzed 19 variables in 338 patients who underwent RALP, 25 of whom experienced BCR over a follow-up period of 12 months. This analysis resulted in AUCs of 0.903, 0.924, and 0.940 with the k-nearest neighbors (kNN), RF, and RL algorithms, respectively. However, the models were generated based on information captured from a small database. Therefore, it is unknown whether this assessment featured an overfitting of the ML algorithms used22. In a similar study, Eski analyzed 37 variables in 368 patients who underwent RALP, 73 of whom experienced BCR over a follow-up period of 35 months. The authors obtained better results with kNN, an AUC of 0.93, an AUC of 0.95 RF, and an AUC of 0.93. However, the study was poorly designed, and thus, the results were unreliable23.

Tan analyzed 18 variables in 1,130 patients who underwent RALP, 176 of whom experienced BCR over a follow-up period of 70 months. Three ML models were applied to predict BCR, including data validation and a 70/30 split strategy. The following results were obtained: 0.823 precision and an AUC of 0.894 using naïve Bayes, 0.838 precision and an AUC of 0.887 with RF, and 0.810 precision and an AUC of 0.852 with SVM 60 months after BCR. Tan also compared statistical risk models against ML models and found that the ML models were comparable to Kattan’s nomogram24.

Finally, Lee conducted an ML study assessing 13 variables in 5,114 patients who underwent RP, 1,207 of whom experienced BCR over a follow-up period of 60 months. They used an 80/20 split strategy for the data and obtained the following results: 0.719 precision and AUC of 0.805 with RF, 0.705 precision and AUC of 0.796 with ANN, and 0.740 precision and AUC of 0.803 with RL25.

The aforementioned studies have generally found that tree-based algorithms exhibit good prediction performance. In this study, the incorporation of RF as the initial model provided consistent results. Transitioning to the XGBoost ensemble method improved the performance metrics, thereby consolidating its position as the best model in this scenario. XGBoost outperformed DNN likely due to better regularization, early stopping, and superior handling of tabular structured data with moderate sample size.

The ability to visualize variable importance, as highlighted in the analysis of the XGBoost-based model, underscores the ability of these algorithms to identify key features for BCR prediction among patients with PCa. It is essential for healthcare professionals to understand and rely on the model’s decisions in a clinical setting. Moreover, the robustness of the XGBoost algorithm in the validation of a novel patient dataset supports its generalization capabilities, making it potentially applicable in dynamic clinical settings.

Previous oncology studies have highlighted the ability of tree-based algorithms with ensemble methods to handle complex datasets and extract nonlinear patterns, thus improving the prediction of clinically relevant events26,27,28. This may result in a more accurate assessment of the probability of recurrence in post-RALP patients with PCa.

In the present project, the analysis of the decision curves highlights the superiority of the AI model based on XGBoost over the CAPRA-S model in predicting BCR. While CAPRA-S offers moderate performance, the net clinical benefit of the XGBoost model, shown in the decision curve, suggests that using this model could avoid both overtreatment and undertreatment of patients. These findings align with previous studies that have demonstrated that ML models can outperform traditional models like CAPRA in complex clinical scenarios24. Model outputs were stratified into clinically actionable risk categories: > 80% as high risk (requiring intensified PSA monitoring and early salvage evaluation), < 20% as low risk (standard surveillance), and 20–80% as intermediate risk (individualized follow-up). These thresholds correspond to the net benefit range observed in the decision curve analysis (Fig. 1), where the XGBoost model outperformed CAPRA-S, particularly among patients with recurrence probabilities between 30 and 70%. In this intermediate-risk group, where clinical decisions are often uncertain, the model offers refined stratification, supporting more selective intervention and potentially reducing overtreatment.

The main limitation of this study is its retrospective, single-centre design, which may reduce the generalisability of the findings to other populations and clinical settings. Further constraints include potential over-regularisation introduced by class sub-sampling during model training, reliance on the accuracy and completeness of electronic health-record coding, and the absence of both genomic variables and imaging data, elements that could provide additional information and enhance the model’s predictive power. However, these results reinforce the need to consider advanced ML-based approaches to assess and predict clinical outcomes in post-RALP patients with BCR, providing a personalized perspective for counseling and treatment. It is important to conduct additional research to optimize these models and understand their limitations in the clinical setting. Future steps will include external validation using public datasets such as TCGA-PRAD, and the application of transfer learning techniques to evaluate model adaptability when incorporating molecular or imaging biomarkers. Continuous collaboration between clinical experts and data scientists is key to achieve effective medical decision-making.

Although the model included patients aged 18 to 80 years, certain subgroups such as older adults may be underrepresented. This raises potential concerns regarding algorithmic bias, as an uneven distribution of demographic or clinical characteristics could affect prediction reliability across populations. Future studies should ensure diverse and representative samples to support equitable clinical applicability of AI-based models.

The clinical applicability of our model for predicting BCR after RP is strengthened by the use of postoperative Gleason score, lymphovascular invasion, and tumor percentage, which have demonstrated strong associations with prostate cancer prognosis. These predictors not only enhance the interpretability of the model but also align with existing clinical assessment protocols, making the model results directly actionable for healthcare providers. The XGBoost model provides an individualised probability of BCR during the first 24 months after surgery. In clinical practice this probability can stratify patients into higher and lower BCR risk groups. A higher predicted risk supports more intensive management, including earlier consideration of salvage radiotherapy before the prostate-specific antigen rises appreciably, PSA surveillance every three months instead of every six months, and shared-decision counselling about adjuvant androgen deprivation therapy or enrolment in clinical trials. In contrast, a lower predicted risk justifies a more conservative approach in which imaging and laboratory follow-up are scheduled less frequently, thereby reducing patient burden and avoiding unnecessary interventions. Because the score is calculated from clinicopathological variables already stored in the electronic health record, it can be generated automatically at discharge and displayed alongside established tools such as CAPRA-S, giving clinicians an additional data driven aid to personalise postoperative care.

To facilitate real-world integration, we developed a prototype web-based risk calculator using Python and Streamlit. (Online Appendix 3, Fig. 1) It is currently used internally by the physicians in our Urology Department for prospective validation in new post-RARP patients. This pilot use enables real-time risk estimation at the point of care and allows comparison of model predictions with actual outcomes to iteratively assess clinical utility.

Further studies across diverse populations and clinical environments are essential to validate the model’s robustness and ensure its applicability in a wider range of clinical contexts.

Data availability

The dataset analyzed in this study contains sensitive patient information from a private hospital and cannot be publicly shared due to confidentiality restrictions. Access to the data may be granted upon reasonable request, subject to review and approval by the Ethics Committee of the Hospital Italiano de Buenos Aires. Requests must include a detailed justification and a research proposal outlining the intended use of the data. For inquiries regarding data access, please contact: .

References

-

Sung, H. et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 71(3), 209–249. https://doi.org/10.3322/caac.21660 (2021).

Article PubMed Google Scholar

-

Hamdy, F. C. et al. 10-year outcomes after monitoring, surgery, or radiotherapy for localized prostate cancer. New Engl. J. Med. 375(15), 1415–1424. https://doi.org/10.1056/NEJMoa1606220 (2016).

Article PubMed Google Scholar

-

Wilt, T. J. et al. Follow-up of prostatectomy versus observation for early prostate cancer. N. Engl. J. Med. 377(2), 132–142. https://doi.org/10.1056/NEJMoa1615869 (2017).

Article PubMed Google Scholar

-

Choueiri, T. K. et al. Impact of postoperative prostate-specific antigen disease recurrence and the use of salvage therapy on the risk of death. Cancer 116(8), 1887–1892. https://doi.org/10.1002/cncr.25013 (2010).

Article PubMed Google Scholar

-

Jackson, W. C. et al. Intermediate endpoints after postprostatectomy radiotherapy: 5-year distant metastasis to predict overall survival. Eur Urol. 74(4), 413–419. https://doi.org/10.1016/j.eururo.2017.12.023 (2018).

Article PubMed Google Scholar

-

Ross, A. E. et al. Utility of risk models in decision making after radical prostatectomy: Lessons from a natural history cohort of intermediate- and high-risk men. Eur. Urol. 69(3), 496–504. https://doi.org/10.1016/j.eururo.2015.04.016 (2016).

Article PubMed Google Scholar

-

Tilki, D. et al. EAU-EANM-ESTRO-ESUR-ISUP-SIOG guidelines on prostate cancer. Part II-2024 update: Treatment of relapsing and metastatic prostate cancer. Eur Urol. 86(2), 164–182 (2024).

-

Chun, F. K., Karakiewicz, P. I. & Briganti, A. Prostate cancer diagnosis: Importance of individualized risk stratification models over PSA alone. Eur. Urol. 54(2), 241–242. https://doi.org/10.1016/j.eururo.2008.05.024 (2008).

Article PubMed Google Scholar

-

D’Amico, A. V. et al. Biochemical outcome after radical prostatectomy, external beam radiation therapy, or interstitial radiation therapy for clinically localized prostate cancer. JAMA 280(11), 969–974. https://doi.org/10.1001/jama.280.11.969 (1998).

Article PubMed Google Scholar

-

Roberts, W. W. et al. Contemporary identification of patients at high risk of early prostate cancer recurrence after radical retropubic prostatectomy. Urology 57(6), 1033–1037. https://doi.org/10.1016/s0090-4295(01)00978-5 (2001).

Article PubMed Google Scholar

-

Kattan, M. W., Eastham, J. A., Stapleton, A. M., Wheeler, T. M. & Scardino, P. T. A preoperative nomogram for disease recurrence following radical prostatectomy for prostate cancer. J. Natl. Cancer Inst. 90(10), 766–771. https://doi.org/10.1093/jnci/90.10.766 (1998).

Article PubMed Google Scholar

-

Cooperberg, M. R. et al. The University of California, San Francisco Cancer of the prostate risk assessment score: a straightforward and reliable preoperative predictor of disease recurrence after radical prostatectomy. J. Urol. 173(6), 1938–1942. https://doi.org/10.1097/01.ju.0000158155.33890.e7 (2005).

Article PubMed PubMed Central Google Scholar

-

Greene, K. L. et al. Validation of the Kattan preoperative nomogram for prostate cancer recurrence using a community-based cohort: results from cancer of the prostate strategic urological research endeavor (capsure). J. Urol. 171(6), 2255–2259. https://doi.org/10.1097/01.ju.0000127733.01845.57 (2004).

Article PubMed Google Scholar

-

Campbell, J. M. et al. Optimum tools for predicting clinical outcomes in prostate cancer patients undergoing radical prostatectomy: A systematic review of prognostic accuracy and validity. Clin. Genitourin. Cancer. 15(5), e827–e834. https://doi.org/10.1016/j.clgc.2017.06.001 (2017).

Article PubMed Google Scholar

-

Kourou, K., Exarchos, T. P., Exarchos, K. P., Karamouzis, M. V. & Fotiadis, D. I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 15(13), 8–17. https://doi.org/10.1016/j.csbj.2014.11.005.PMID:25750696;PMCID:PMC4348437 (2014).

Article Google Scholar

-

Collins, G. S. et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 16(385), e078378. https://doi.org/10.1136/bmj-2023-078378.Erratum.In:BMJ.2024Apr18;385:q902.doi:10.1136/bmj.q902.PMID:38626948;PMCID:PMC11019967 (2024).

Article Google Scholar

-

Wolff, R. F. et al. PROBAST: A tool to assess the risk of bias and applicability of prediction model studies. Ann. Intern. Med. 170(1), 51–58. https://doi.org/10.7326/M18-1376 (2019).

Article PubMed Google Scholar

-

Weiner, A. B. et al. Risk stratification of patients with recurrence after primary treatment for prostate cancer: A systematic review. Eur. Urol. 86(3), 200–210 (2024).

PubMed Google Scholar

-

Suarez-Ibarrola, R., Hein, S., Reis, G., Gratzke, C. & Miernik, A. Current and future applications of machine and deep learning in urology: A review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J Urol. 38, 2329–2347 (2020).

PubMed Google Scholar

-

Greene, K. L. et al. Validation of the Kattan preoperative nomogram for prostate cancer recurrence using a community-based cohort: results from cancer of the prostate strategic urological research endeavor (capsure). J Urol. 171(6 Pt 1), 2255–2259. https://doi.org/10.1097/01.ju.0000127733.01845.57 (2004).

Article PubMed Google Scholar

-

Campbell, J. M. et al. Optimum tools for predicting clinical outcomes in prostate cancer patients undergoing radical prostatectomy: A systematic review of prognostic accuracy and validity. Clin. Genitourin. Cancer. 15(5), e827–e834. https://doi.org/10.1016/j.clgc.2017.06.001 (2017).

Article PubMed Google Scholar

-

Wong, N. C., Lam, C., Patterson, L. & Shayegan, B. Use of machine learning to predict early biochemical recurrence after robot-assisted prostatectomy. BJU Int. 123(1), 51–57 (2019).

PubMed Google Scholar

-

Eski, M. et al. Machine learning algorithms can more efficiently predict biochemical recurrence after robot assisted radical prostatectomy. Eur Urol. 79, 1651 (2021).

Google Scholar

-

Tan, Y. G. et al. Incorporating artificial intelligence in urology: Supervised machine learning algorithms demonstrate comparative advantage over nomograms in predicting biochemical recurrence after prostatectomy. Prostate 82(3), 298–305 (2022).

PubMed Google Scholar

-

Lee, S. J. et al. Prediction system for prostate cancer recurrence using machine learning. Appl. Sci. 10(4), 1333 (2020).

Google Scholar

-

Chen, Q. et al. Clinical data prediction model to identify patients with early-stage pancreatic cancer. JCO Clin. Cancer Inform. 5, 279–287. https://doi.org/10.1200/CCI.20.00137.PMID:33739856;PMCID:PMC8462624 (2021).

Article PubMed Google Scholar

-

Yuan, Y. et al. a machine learning approach using XGBoost predicts lung metastasis in patients with ovarian cancer. Biomed. Res. Int. 12(2022), 8501819. https://doi.org/10.1155/2022/8501819.PMID:36277898;PMCID:PMC9581702 (2022).

Article Google Scholar

-

Zhu, H. et al. Preoperative prediction for lymph node metastasis in early gastric cancer by interpretable machine learning models: A multicenter study. Surgery 171(6), 1543–1551. https://doi.org/10.1016/j.surg.2021.12.015 (2022).

Article PubMed Google Scholar

Download references

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Ethics declarations

Competing interests

The authors declare no competing interests.

Informed consent

Patients enrolled in the study voluntarily signed an informed consent form regarding the use of their data in accordance with the principles of the Declaration of Helsinki, complying with the standards and protocols for conducting human research established by the Ethics Committee of the Italian Hospital of Buenos Aires.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Reprints and permissions

About this article

Cite this article

Bergero, M.A., Martínez, P., Modina, P. et al. Artificial intelligence model for predicting early biochemical recurrence of prostate cancer after robotic-assisted radical prostatectomy. Sci Rep 15, 30822 (2025). https://doi.org/10.1038/s41598-025-16362-1

Download citation

-

Received:

-

Accepted:

-

Published:

-

DOI: https://doi.org/10.1038/s41598-025-16362-1