Introduction Prostate cancer (PCa) is a major public health issue in developed countries1. Radical prostatectomy (RP) is associated with a lower incidence of disease progression than active monitoring2,3. However, one-third of patients experience biochemical recurrence (BCR) after the procedure4,5. Previous studies have shown that the survival rate of patients with BCR is 20% lower after

Fine-tune OpenAI GPT-OSS models using Amazon SageMaker HyperPod recipes – AWS

This post is the second part of the GPT-OSS series focusing on model customization with Amazon SageMaker AI. In Part 1, we demonstrated fine-tuning GPT-OSS models using open source Hugging Face libraries with SageMaker training jobs, which supports distributed multi-GPU and multi-node configurations, so you can spin up high-performance clusters on demand.

In this post, we show how you can fine-tune GPT OSS models on using recipes on SageMaker HyperPod and Training Jobs. SageMaker HyperPod recipes help you get started with training and fine-tuning popular publicly available foundation models (FMs) such as Meta’s Llama, Mistral, and DeepSeek in just minutes, using either SageMaker HyperPod or training jobs. The recipes provide pre-built, validated configurations that alleviate the complexity of setting up distributed training environments while maintaining enterprise-grade performance and scalability for models. We outline steps to fine-tune the GPT-OSS model on a multilingual reasoning dataset, HuggingFaceH4/Multilingual-Thinking, so GPT-OSS can handle structured, chain-of-thought (CoT) reasoning across multiple languages.

Solution overview

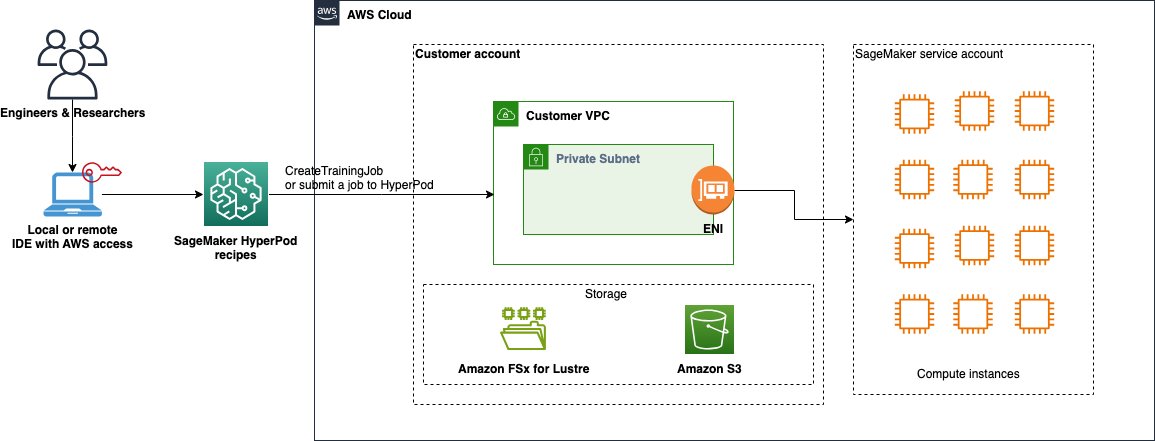

This solution uses SageMaker HyperPod recipes to run a fine-tuning job on HyperPod using Amazon Elastic Kubernetes Service (Amazon EKS) orchestration or training jobs. Recipes are processed through the SageMaker HyperPod recipe launcher, which serves as the orchestration layer responsible for launching a job on the corresponding architecture such as SageMaker HyperPod (Slurm or Amazon EKS) or training jobs. To learn more, see SageMaker HyperPod recipes.

For details on fine-tuning the GPT-OSS model, see Fine-tune OpenAI GPT-OSS models on Amazon SageMaker AI using Hugging Face libraries.

In the following sections, we discuss the prerequisites for both options, and then move on to the data preparation. The prepared data is saved to Amazon FSx for Lustre, which is used as the persistent file system for SageMaker HyperPod, or Amazon Simple Storage Service (Amazon S3) for training jobs. We then use recipes to submit the fine-tuning job, and finally deploy the trained model to a SageMaker endpoint for testing and evaluating the model. The following diagram illustrates this architecture.

Prerequisites

To follow along, you must have the following prerequisites:

- A local development environment with AWS credentials configured for creating and accessing SageMaker resources, or a remote environment such as Amazon SageMaker Studio.

- For SageMaker HyperPod fine-tuning, complete the following:

- Make sure you have one ml.p5.48xlarge instance (with 8 x NVIDIA H100 GPUs) for cluster usage. If you don’t have sufficient limits, request the following SageMaker quotas on the Service Quotas console: P5 instance (ml.p5.48xlarge) for HyperPod clusters (ml.p5.48xlarge for cluster usage): 1.

- Set up a SageMaker HyperPod cluster on Amazon EKS. For instructions, refer to Orchestrating SageMaker HyperPod clusters with Amazon EKS. Alternatively, you can use the AWS CloudFormation template provided in the Amazon EKS Support in Amazon SageMaker HyperPod workshop and follow the instructions to set up a cluster and a development environment to access and submit jobs to the cluster.

- Set up an FSx for Lustre file system for saving and loading data and checkpoints. Refer to Set Up an FSx for Lustre File System to set up an FSx for Lustre volume and associate it with the cluster.

- For fine-tuning the model using SageMaker training jobs, you must have one ml.p5.48xlarge instance (with 8 x NVIDIA H100 GPUs) for training jobs usage. If you don’t have sufficient limits, request the following SageMaker quotas on the Service Quotas console: P5 instance (ml.p5.48xlarge) for training jobs (ml.p5.48xlarge for cluster usage): 1.

It might take up to 24 hours for these limits to be approved. You can also use SageMaker training plans to reserve these instances for a specific timeframe and use case (cluster or training jobs usage). For more details, see Reserve training plans for your training jobs or HyperPod clusters.

Next, use your preferred development environment to prepare the dataset for fine-tuning. You can find the full code in the Generative AI using Amazon SageMaker repository on GitHub.

Data tokenization

We use the Hugging FaceH4/Multilingual-Thinking dataset, which is a multilingual reasoning dataset containing CoT examples translated into languages such as French, Spanish, and German. The recipe supports a sequence length of 4,000 tokens for the GPT-OSS 120B model. The following example code demonstrates how to tokenize the multilingual-thinking dataset. The recipe accepts data in Hugging Face format (arrow). After it’s tokenized, you can save the processed dataset to disk.

from datasets import load_dataset from transformers import AutoTokenizer import numpy as np dataset = load_dataset("HuggingFaceH4/Multilingual-Thinking", split="train") tokenizer = AutoTokenizer.from_pretrained("openai/gpt-oss-120b") messages = dataset[0]["messages"] conversation = tokenizer.apply_chat_template(messages, tokenize=False) print(conversation) def preprocess_function(example): return tokenizer.apply_chat_template(example['messages'], return_dict=True, padding="max_length", max_length=4096, truncation=True) def label(x): x["labels"]=np.array(x["input_ids"]) x["labels"][x["labels"]==tokenizer.pad_token_id]=-100 x["labels"]=x["labels"].tolist() return x dataset = dataset.map(preprocess_function, remove_columns=['reasoning_language', 'developer', 'user', 'analysis', 'final', 'messages']) dataset = dataset.map(label) # for HyperPod, save to mounted FSx volume dataset.save_to_disk("https://aws.amazon.com/fsx/multilingual_4096") # for training jobs, save to S3 dataset.save_to_disk("multilingual_4096") def upload_directory(local_dir, bucket_name, s3_prefix=''): s3_client = boto3.client('s3') for root, dirs, files in os.walk(local_dir): for file in files: local_path = os.path.join(root, file) # Calculate relative path for S3 relative_path = os.path.relpath(local_path, local_dir) s3_path = os.path.join(s3_prefix, relative_path).replace("\", "https://aws.amazon.com/") print(f"Uploading {local_path} to {s3_path}") s3_client.upload_file(local_path, bucket_name, s3_path) upload_directory('./multilingual_4096/', , 'multilingual_4096') Now that you have prepared and tokenized the dataset, you can fine-tune the GPT-OSS model on your dataset, using either SageMaker HyperPod or training jobs. SageMaker training jobs are ideal for one-off or periodic training workloads that need temporary compute resources, making it a fully managed, on-demand experience for your training needs. SageMaker HyperPod is optimal for continuous development and experimentation, providing a persistent, preconfigured, and failure-resilient cluster. Depending on your choice, skip to the appropriate section for next steps.

Fine-tune the model using SageMaker HyperPod

To fine-tune the model using HyperPod, start by setting up the virtual environment and installing the necessary dependencies to execute the training job on the EKS cluster. Make sure the cluster is InService before proceeding, and you’re using Python 3.9 or greater in your development environment.

python3 -m venv ${PWD}/venv source venv/bin/activateNext, download and set up the SageMaker HyperPod recipes repository:

git clone --recursive https://github.com/aws/sagemaker-hyperpod-recipes.git cd sagemaker-hyperpod-recipes pip3 install -r requirements.txt You can now use the SageMaker HyperPod recipe launch scripts to submit your training job. Using the recipe involves updating the k8s.yaml configuration file and executing the launch script.

In recipes_collection/cluster/k8s.yaml, update the persistent_volume_claims section. It mounts the FSx claim to the /fsx directory of each computing pod:

- claimName: fsx-claim mountPath: fsxSageMaker HyperPod recipes provide a launch script for each recipe within the launcher_scripts directory. To fine-tune the GPT-OSS-120B model, update the launch scripts located at launcher_scripts/gpt_oss/run_hf_gpt_oss_120b_seq4k_gpu_lora.sh and update the cluster_type parameter.

The updated launch script should look similar to the following code when running SageMaker HyperPod with Amazon EKS. Make sure that cluster=k8s and cluster_type=k8s are updated in the launch script:

#!/bin/bash # Original Copyright (c), NVIDIA CORPORATION. Modifications © Amazon.com #Users should setup their cluster type in /recipes_collection/config.yaml SAGEMAKER_TRAINING_LAUNCHER_DIR=${SAGEMAKER_TRAINING_LAUNCHER_DIR:-"$(pwd)"} HF_MODEL_NAME_OR_PATH="openai/gpt-oss-120b" # HuggingFace pretrained model name or path TRAIN_DIR="https://aws.amazon.com/fsx/multilingual_4096" # Location of training dataset VAL_DIR="https://aws.amazon.com/fsx/multilingual_4096" # Location of validation dataset EXP_DIR="https://aws.amazon.com/fsx/experiment" # Location to save experiment info including logging, checkpoints, ect HF_ACCESS_TOKEN="hf_xxxxxxxx" # Optional HuggingFace access token HYDRA_FULL_ERROR=1 python3 "${SAGEMAKER_TRAINING_LAUNCHER_DIR}/main.py" recipes=fine-tuning/gpt_oss/hf_gpt_oss_120b_seq4k_gpu_lora container="658645717510.dkr.ecr.us-west-2.amazonaws.com/smdistributed-modelparallel:sm-pytorch_gpt_oss_patch_pt-2.7_cuda12.8" base_results_dir="${SAGEMAKER_TRAINING_LAUNCHER_DIR}/results" recipes.run.name="hf-gpt-oss-120b-lora" cluster=k8s # Imp: add cluster line when running on HP EKS cluster_type=k8s # Imp: add cluster_type line when running on HP EKS recipes.exp_manager.exp_dir="$EXP_DIR" recipes.trainer.num_nodes=1 recipes.model.data.train_dir="$TRAIN_DIR" recipes.model.data.val_dir="$VAL_DIR" recipes.model.hf_model_name_or_path="$HF_MODEL_NAME_OR_PATH" recipes.model.hf_access_token="$HF_ACCESS_TOKEN" When the script is ready, you can launch fine-tuning of the GPT OSS 120B model using the following code:

chmod +x launcher_scripts/gpt_oss/run_hf_gpt_oss_120b_seq4k_gpu_lora.sh bash launcher_scripts/gpt_oss/run_hf_gpt_oss_120b_seq4k_gpu_lora.shAfter submitting a job for fine-tuning, you can use the following command to verify successful submission. You should be able to see the pods running in your cluster:

kubectl get pods NAME READY STATUS RESTARTS AGE hf-gpt-oss-120b-lora-h2cwd-worker-0 1/1 Running 0 14mTo check logs for the job, you can use the kubectl logs command:

kubectl logs -f hf-gpt-oss-120b-lora-h2cwd-worker-0

You should be able to see the following logs when the training begins and completes. You will find the checkpoints written to the /fsx/experiment/checkpoints folder.

warnings.warn( Epoch 0: 40%|████ | 50/125 [08:47<13:10, 0.09it/s, Loss/train=0.254, Norms/grad_norm=0.128, LR/learning_rate=2.2e-6] [NeMo I 2025-08-18 17:49:48 nemo_logging:381] save SageMakerCheckpointType.PEFT_FULL checkpoint: /fsx/experiment/checkpoints/peft_full/steps_50 [NeMo I 2025-08-18 17:49:48 nemo_logging:381] Saving PEFT checkpoint to /fsx/experiment/checkpoints/peft_full/steps_50 [NeMo I 2025-08-18 17:49:49 nemo_logging:381] Loading Base model from : openai/gpt-oss-120b You are attempting to use Flash Attention 2 without specifying a torch dtype. This might lead to unexpected behaviour Loading checkpoint shards: 100%|██████████| 15/15 [01:49<00:00, 7.33s/it] [NeMo I 2025-08-18 17:51:39 nemo_logging:381] Merging the adapter, this might take a while...... Unloading and merging model: 100%|██████████| 547/547 [00:07<00:00, 71.27it/s] [NeMo I 2025-08-18 17:51:47 nemo_logging:381] Checkpointing to /fsx/experiment/checkpoints/peft_full/steps_50/final-model...... [NeMo I 2025-08-18 18:00:14 nemo_logging:381] Successfully save the merged model checkpoint. `Trainer.fit` stopped: `max_steps=50` reached. Epoch 0: 40%|████ | 50/125 [23:09<34:43, 0.04it/s, Loss/train=0.264, Norms/grad_norm=0.137, LR/learning_rate=2e-6] When the training is complete, the final merged model can be found in the experiment directory path you defined in the launcher script under /fsx/experiment/checkpoints/peft_full/steps_50/final-model.

Fine-tune using SageMaker training jobs

You can also use recipes directly with SageMaker training jobs using the SageMaker Python SDK. The training jobs automatically spin up the compute, load the input data, run the training script, save the model to your output location, and tear down the instances, for a smooth training experience.

The following code snippet shows how to use recipes with the PyTorch estimator. You can use the training_recipe parameter to specify the training or fine-tuning recipe to be used, and recipe_overrides for any parameters that need replacement. For training jobs, update the input, output, and results directories to locations in /opt/ml as required by SageMaker training jobs.

import os import sagemaker,boto3 from sagemaker.pytorch import PyTorch from sagemaker.inputs import FileSystemInput sagemaker_session = sagemaker.Session() role = sagemaker.get_execution_role() bucket = sagemaker_session.default_bucket() output = os.path.join(f"s3://{bucket}", "output") recipe_overrides = { "run": { "results_dir": "https://aws.amazon.com/opt/ml/model", }, "exp_manager": { "exp_dir": "", "explicit_log_dir": "https://aws.amazon.com/opt/ml/output/tensorboard", "checkpoint_dir": "https://aws.amazon.com/opt/ml/checkpoints", }, "model": { "data": { "train_dir": "https://aws.amazon.com/opt/ml/input/data/train", "val_dir": "https://aws.amazon.com/opt/ml/input/data/val", }, }, "use_smp_model": "False", } # create the estimator object estimator = PyTorch( output_path=output, base_job_name=f"gpt-oss-recipe", role=role, instance_type="ml.p5.48xlarge", training_recipe="fine-tuning/gpt_oss/hf_gpt_oss_120b_seq4k_gpu_lora", recipe_overrides=recipe_overrides, sagemaker_session=sagemaker_session, image_uri="658645717510.dkr.ecr.us-west-2.amazonaws.com/smdistributed-modelparallel:sm-pytorch_gpt_oss_patch_pt-2.7_cuda12.8", ) # submit the training job estimator.fit( inputs={ "train": f"s3://{bucket}/datasets/multilingual_4096/", "val": f"s3://{bucket}/datasets/multilingual_4096/"}, wait=True)After the job is submitted, you can monitor the status of your training job on the SageMaker console, by choosing Training jobs under Training in the navigation pane. Choose the training job that starts with gpt-oss-recipe to view its details and logs. When the training job is complete, the outputs will be saved to an S3 location. You can get the location of the output artifacts from the S3 model artifact section on the job details page.

Run inference

After you fine-tune your GPT-OSS model with SageMaker recipes on either SageMaker training jobs or SageMaker HyperPod, the output is a customized model artifact that merges the base model with the customized PEFT adapters. This final model is stored in Amazon S3 and can be deployed directly from Amazon S3 to SageMaker endpoints for real-time inference.

To serve GPT-OSS models, you must have the latest vLLM containers (v0.10.1 or later). A full list of vllm-openai Docker image versions is available on Docker hub.

The steps to deploy your fine-tuned GPT-OSS model are outlined in this section.

Build the latest GPT-OSS container for your SageMaker endpoint

If you’re deploying the model from SageMaker Studio using JupyterLab or the Code Editor, both environments come with Docker preinstalled. Make sure that you’re using the SageMaker Distribution image v3.0 or later for compatibility.You can build your deployment container by running the following commands:

%%bash # <- use this if you're running this inside JupterLab cell # navigate to deploy dir from the current workdir, to build container cd ./deploy # build a push container chmod +X build.sh bash build.sh cd .. If you’re running these commands from a local terminal or other environment, simply omit the %%bash line and run the commands as standard shell commands.

The build.sh script is responsible for automatically building and pushing a vllm-openai container that is optimized for SageMaker endpoints. After it’s built, the custom SageMaker endpoint compatible vllm image is pushed to Amazon Elastic Container Registry (Amazon ECR). SageMaker endpoints can then pull this image from Amazon ECR at runtime to spin up the container for inference.

The following is an example of the build.sh script:

export REGION={region} export ACCOUNT_ID={account_id} export REPOSITORY_NAME=vllm export TAG=v0.10.1 full_name="${ACCOUNT_ID}.dkr.ecr.${REGION}.amazonaws.com/${REPOSITORY_NAME}:${TAG}" echo "building $full_name" DOCKER_BUILDKIT=0 docker build . --network sagemaker --tag $full_name --file Dockerfile aws ecr get-login-password --region $REGION | docker login --username AWS --password-stdin $ACCOUNT_ID.dkr.ecr.$REGION.amazonaws.com # If the repository doesn't exist in ECR, create it. aws ecr describe-repositories --region ${REGION} --repository-names "${REPOSITIRY_NAME}" > /dev/null 2>&1 if [ $? -ne 0 ] then aws ecr create-repository --region ${REGION} --repository-name "${REPOSITORY_NAME}" > /dev/null fi docker tag $REPOSITORY_NAME:$TAG ${full_name} docker push ${full_name}The Dockerfile defines how we convert an open source vLLM Docker image into a SageMaker hosting-compatible image. This involves extending the base vllm-openai image, adding the serve entrypoint script, and making it executable. See the following example Dockerfile:

FROM vllm/vllm-openai:v0.10.1 COPY serve /usr/bin/serve RUN chmod 777 /usr/bin/serve ENTRYPOINT [ "https://aws.amazon.com/usr/bin/serve" ]The serve script acts as a translation layer between SageMaker hosting conventions and the vLLM runtime. You can maintain the same deployment workflow you’re familiar with when hosting models on SageMaker endpoints, while automatically converting SageMaker-specific configurations into the format expected by vLLM.

Key points to note about this script:

- It enforces the use of port 8080, which SageMaker requires for inference containers

- It dynamically translates environment variables prefixed with

OPTION_into CLI arguments for vLLM (for example,OPTION_MAX_MODEL_LEN=4096changes to--max-model-len 4096) - It prints the final set of arguments for visibility

- It finally launches the vLLM API server with the translated arguments

The following is an example serve script:

#!/bin/bash # Define the prefix for environment variables to look for PREFIX="OPTION_" ARG_PREFIX="--" # Initialize an array for storing the arguments # port 8080 required by sagemaker, https://docs.aws.amazon.com/sagemaker/latest/dg/your-algorithms-inference-code.html#your-algorithms-inference-code-container-response ARGS=(--port 8080) # Loop through all environment variables while IFS='=' read -r key value; do # Remove the prefix from the key, convert to lowercase, and replace underscores with dashes arg_name=$(echo "${key#"${PREFIX}"}" | tr '[:upper:]' '[:lower:]' | tr '_' '-') # Add the argument name and value to the ARGS array ARGS+=("${ARG_PREFIX}${arg_name}") if [ -n "$value" ]; then ARGS+=("$value") fi done < <(env | grep "^${PREFIX}") echo "-------------------------------------------------------------------" echo "vLLM engine args: [${ARGS[@]}]" echo "-------------------------------------------------------------------" # Pass the collected arguments to the main entrypoint exec python3 -m vllm.entrypoints.openai.api_server "${ARGS[@]}"Host customized GPT-OSS as a SageMaker real-time endpoint

Now you can deploy your fine-tuned GPT-OSS model using the ECR image URI you built in the previous step. In this example, the model artifacts are stored securely in an S3 bucket, and SageMaker will download them into the container at runtime.Complete the following configurations:

- Set

model_datato point to the S3 prefix where your model artifacts are located - Set the

OPTION_MODELenvironment variable to/opt/ml/model, which is where SageMaker mounts the model inside the container - (Optional) If you’re serving a model from Hugging Face Hub instead of Amazon S3, you can set

OPTION_MODELdirectly to the Hugging Face model ID instead

The endpoint startup might take several minutes as the model artifacts are downloaded and the container is initialized.The following is an example deployment code:

inference_image = f"{account_id}.dkr.ecr.{region}.amazonaws.com/vllm:v0.10.1" ... ... lmi_model = sagemaker.Model( image_uri=inference_image, env={ "OPTION_MODEL": "https://aws.amazon.com/opt/ml/model", # set this to let SM endpoint read a model stored in s3, else set it to HF MODEL ID "OPTION_SERVED_MODEL_NAME": "model", "OPTION_TENSOR_PARALLEL_SIZE": json.dumps(num_gpus), "OPTION_DTYPE": "bfloat16", #"VLLM_ATTENTION_BACKEND": "TRITON_ATTN_VLLM_V1", # not required for vLLM 0.10.1 and above "OPTION_ASYNC_SCHEDULING": "true", "OPTION_QUANTIZATION": "mxfp4" }, role=role, name=model_name, model_data={ 'S3DataSource': { 'S3Uri': "s3://path/to/gpt-oss/model/artifacts", 'S3DataType': 'S3Prefix', 'CompressionType': 'None' } }, ) ... lmi_model.deploy( initial_instance_count=1, instance_type=instance_type, container_startup_health_check_timeout=600, endpoint_name=endpoint_name, endpoint_type=sagemaker.enums.EndpointType.INFERENCE_COMPONENT_BASED, inference_component_name=inference_component_name, resources=ResourceRequirements(requests={"num_accelerators": 1, "memory": 1024*3, "copies": 1,}), )Sample inference

After your endpoint is deployed and in the InService state, you can invoke your fine-tuned GPT-OSS model using the SageMaker Python SDK.

The following is an example predictor setup:

pretrained_predictor = sagemaker.Predictor( endpoint_name=endpoint_name, sagemaker_session=sagemaker.Session(boto3.Session(region_name=boto3.Session().region_name)), serializer=serializers.JSONSerializer(), deserializer=deserializers.JSONDeserializer(), component_name=inference_component_name )The modified vLLM container is fully compatible with the OpenAI-style messages input format, making it straightforward to send chat-style requests:

payload = { "messages": [{"role": "user", "content": "Hello who are you?"}], "parameters": {"max_new_tokens": 64, "temperature": 0.2} } output = pretrained_predictor.predict(payload)You have successfully deployed and invoked your custom fine-tuned GPT-OSS model on SageMaker real-time endpoints, using the vLLM framework for optimized, low-latency inference. You can find more GPT-OSS hosting examples in the OpenAI gpt-oss examples GitHub repo.

Clean up

To avoid incurring additional charges, complete the following steps to clean up the resources used in this post:

- Delete the SageMaker endpoint:

pretrained_predictor.delete_endpoint()

- If you created a SageMaker HyperPod cluster for the purposes of this post, delete the cluster by following the instructions in Deleting a SageMaker HyperPod cluster.

- Clean up the FSx for Lustre volume if it’s no longer needed by following instructions in Deleting a file system.

- If you used training jobs, the training instances are automatically deleted when the jobs are complete.

Conclusion

In this post, we showed how to fine-tune OpenAI’s GPT-OSS models (gpt-oss-120b and gpt-oss-20b) on SageMaker AI using SageMaker HyperPod recipes. We discussed how SageMaker HyperPod recipes provide a powerful yet accessible solution for organizations to scale their AI model training capabilities with large language models (LLMs) including GPT-OSS, using either a persistent cluster through SageMaker HyperPod, or an ephemeral cluster using SageMaker training jobs. The architecture streamlines complex distributed training workflows through its intuitive recipe-based approach, reducing setup time from weeks to minutes. We also showed how these fine-tuned models can be seamlessly deployed to production using SageMaker endpoints with vLLM optimization, providing enterprise-grade inference capabilities with OpenAI-compatible APIs. This end-to-end workflow, from training to deployment, helps organizations build and serve custom LLM solutions while using the scalable infrastructure of AWS and comprehensive ML platform capabilities of SageMaker.

To begin using the SageMaker HyperPod recipes, visit the Amazon SageMaker HyperPod recipes GitHub repo for comprehensive documentation and example implementations. If you’re interested in exploring the fine-tuning further, the Generative AI using Amazon SageMaker GitHub repo has the necessary code and notebooks. Our team continues to expand the recipe ecosystem based on customer feedback and emerging ML trends, making sure that you have the tools needed for successful AI model training.

Special thanks to everyone who contributed to the launch: Hengzhi Pei, Zach Kimberg, Andrew Tian, Leonard Lausen, Sanjay Dorairaj, Manish Agarwal, Sareeta Panda, Chang Ning Tsai, Maxwell Nuyens, Natasha Sivananjaiah, and Kanwaljit Khurmi.

About the authors

Durga Sury is a Senior Solutions Architect at Amazon SageMaker, where she helps enterprise customers build secure and scalable AI/ML systems. When she’s not architecting solutions, you can find her enjoying sunny walks with her dog, immersing herself in murder mystery books, or catching up on her favorite Netflix shows.

Durga Sury is a Senior Solutions Architect at Amazon SageMaker, where she helps enterprise customers build secure and scalable AI/ML systems. When she’s not architecting solutions, you can find her enjoying sunny walks with her dog, immersing herself in murder mystery books, or catching up on her favorite Netflix shows.

Pranav Murthy is a Senior Generative AI Data Scientist at AWS, specializing in helping organizations innovate with Generative AI, Deep Learning, and Machine Learning on Amazon SageMaker AI. Over the past 10+ years, he has developed and scaled advanced computer vision (CV) and natural language processing (NLP) models to tackle high-impact problems—from optimizing global supply chains to enabling real-time video analytics and multilingual search. When he’s not building AI solutions, Pranav enjoys playing strategic games like chess, traveling to discover new cultures, and mentoring aspiring AI practitioners. You can find Pranav on LinkedIn.

Pranav Murthy is a Senior Generative AI Data Scientist at AWS, specializing in helping organizations innovate with Generative AI, Deep Learning, and Machine Learning on Amazon SageMaker AI. Over the past 10+ years, he has developed and scaled advanced computer vision (CV) and natural language processing (NLP) models to tackle high-impact problems—from optimizing global supply chains to enabling real-time video analytics and multilingual search. When he’s not building AI solutions, Pranav enjoys playing strategic games like chess, traveling to discover new cultures, and mentoring aspiring AI practitioners. You can find Pranav on LinkedIn.

Sumedha Swamy is a Senior Manager of Product Management at Amazon Web Services (AWS), where he leads several areas of the Amazon SageMaker, including SageMaker Studio – the industry-leading integrated development environment for machine learning, developer and administrator experiences, AI infrastructure, and SageMaker SDK.

Sumedha Swamy is a Senior Manager of Product Management at Amazon Web Services (AWS), where he leads several areas of the Amazon SageMaker, including SageMaker Studio – the industry-leading integrated development environment for machine learning, developer and administrator experiences, AI infrastructure, and SageMaker SDK.

Dmitry Soldatkin is a Senior AI/ML Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. You can connect with Dmitry on LinkedIn.

Dmitry Soldatkin is a Senior AI/ML Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. You can connect with Dmitry on LinkedIn.

Arun Kumar Lokanatha is a Senior ML Solutions Architect with the Amazon SageMaker team. He specializes in large language model training workloads, helping customers build LLM workloads using SageMaker HyperPod, SageMaker training jobs, and SageMaker distributed training. Outside of work, he enjoys running, hiking, and cooking.

Arun Kumar Lokanatha is a Senior ML Solutions Architect with the Amazon SageMaker team. He specializes in large language model training workloads, helping customers build LLM workloads using SageMaker HyperPod, SageMaker training jobs, and SageMaker distributed training. Outside of work, he enjoys running, hiking, and cooking.

Anirudh Viswanathan is a Senior Product Manager, Technical, at AWS with the SageMaker team, where he focuses on Machine Learning. He holds a Master’s in Robotics from Carnegie Mellon University and an MBA from the Wharton School of Business. Anirudh is a named inventor on more than 50 AI/ML patents. He enjoys long-distance running, exploring art galleries, and attending Broadway shows.

Anirudh Viswanathan is a Senior Product Manager, Technical, at AWS with the SageMaker team, where he focuses on Machine Learning. He holds a Master’s in Robotics from Carnegie Mellon University and an MBA from the Wharton School of Business. Anirudh is a named inventor on more than 50 AI/ML patents. He enjoys long-distance running, exploring art galleries, and attending Broadway shows.