Get the scoop on the most recent ranking from the Tiobe programming language index, learn a no-fuss way to distribute DIY tooling across Python projects, and take a peek at ComfyUI: interactive, node-based tools for generative AI workloads in Python. Python faces new challenges from old rivals, but is it a blip or something more?

Source camera attribution using a rule-based explainable convolutional neural network – Scientific Reports

- Article

- Open access

- Published:

- Tahereh Nayerifard1,

- Haleh Amintoosi1 &

- Abbas Ghaemi Bafghi1

Scientific Reports , Article number: (2026) Cite this article

We are providing an unedited version of this manuscript to give early access to its findings. Before final publication, the manuscript will undergo further editing. Please note there may be errors present which affect the content, and all legal disclaimers apply.

Subjects

Abstract

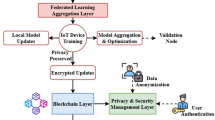

In recent years, there has been a push towards adopting artificial intelligence (AI) models in digital forensics (DF), particularly deep learning (DL) models. While these models assist DF experts, their lack of transparency raises concerns about reliability. Although eXplainable Artificial Intelligence (XAI) has progressed, current methods remain limited for DF applications. Existing visual XAI techniques do not provide sufficient clarity for challenging image forensics tasks such as Source Camera Identification (SCI), nor do they offer mechanisms to assess whether a model’s decision is correct. Most methods simply highlight influential regions without enabling examiners to validate the decision itself. Rule-based explainability is a promising strategy for increasing transparency, yet deploying it on real-world Convolutional Neural Networks (CNNs) is still challenging. Prior studies remain largely experimental and often require modifying the model to extract rules, conflicting with the integrity requirements of DF workflows. To address these gaps, this paper introduces a framework to make CNN models used in the analysis stage of digital forensics explainable. The framework, by following three fundamental steps—layers trace detection, layers majority voting, and rule extraction—provides structured and transparent visual output, and rule-based textual explainability that is understandable to the user. Based on this, the first explainable Source Camera Identification (SCI) model is introduced which is a challenging DF task to make it explainable. The explainable output allows for the rejection or confirmation of the main model’s prediction based on the decisions of the layers and compliance with the principle of integrity to the DF examiner. In addition, with the identification of 27 out of 37 incorrect predictions by the base model, the precision of the model was improved from 97.33% to 99.2%.

Data availability

The source code is available on GitHub.

References

-

Nayerifard, T., Amintoosi, H., Gaemi Bafghi, A. and Dehghantanha, A. Machine learning in digital forensics: A systematic literature review. https://doi.org/10.48550/arXiv.2306.04965 (2023).

-

Hall, S. W., Sakzad, A. & Choo, K. R. Explainable artificial intelligence for digital forensics. WIREs Forensic Sci. 4, 1–11. https://doi.org/10.1002/wfs2.1434 (2022).

Google Scholar

-

Fähndrich, J. et al. Digital forensics and strong AI: A structured literature review. Forensic Sci. Int. Digit. Investig. https://doi.org/10.1016/j.fsidi.2023.301617 (2023).

Google Scholar

-

Solanke, A. A. Explainable digital forensics AI: Towards mitigating distrust in AI-based digital forensics analysis using interpretable models. Forensic Sci. Int. Digit. Investig. https://doi.org/10.1016/j.fsidi.2022.301403 (2022).

Google Scholar

-

Ali, S. et al. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion https://doi.org/10.1016/j.inffus.2023.101805 (2023).

Google Scholar

-

IEEE Standards Association, Guide for an Architectural Framework for Explainable Artificial Intelligence. IEEE standard 2894 https://doi.org/10.1109/IEEESTD.2024.10659410 (2024).

-

Hall, S. W., Sakzad, A. & Minagar, S. A proof of concept implementation of explainable artificial intelligence (XAI) in digital forensics. In Network and System Security Conference https://doi.org/10.1007/978-3-031-23020-2_4 (2022).

-

Lo, W., Kulatilleke, G., Sarhan, M., Layeghy, S. & Portmann, M. XG-BoT: An explainable deep graph neural network for botnet detection and forensics. Internet Things https://doi.org/10.1016/j.iot.2023.100747 (2023).

Google Scholar

-

Henrique, S. et al. Forensic Science International: Synergy Deepfake forensics analysis: An explainable hierarchical ensemble of weakly supervised models. IForensic Sci. Int. Synerg. https://doi.org/10.1016/j.fsisyn.2022.100217 (2022).

Google Scholar

-

Ge, W. et al. MetaCluster: A universal interpretable classification framework for cybersecurity. IEEE Trans. Inf. Forensics Security 19, 3829–3843. https://doi.org/10.1109/TIFS.2024.3372808 (2024).

Google Scholar

-

Floreale, G. et al. Automated processing of eXplainable Artificial Intelligence outputs in deep learning models for fault diagnostics of large infrastructures. Eng. Appl. Artif. Intell. 149, 110518 (2025).

Google Scholar

-

Lundberg, S. M. and Lee, S. I. A unified approach to interpreting model predictions. IAdv. Neural Inf. Process. Syst. Preprint at https://doi.org/10.48550/arXiv.1705.07874 (2017).

-

Nayerifard, T., Amintoosi, H. & Gaemi Bafghi, A. A robust PRNU-based source camera attribution with convolutional neural networks. J. Supercomput. https://doi.org/10.1007/s11227-024-06579-8 (2025).

Google Scholar

-

Shullani, D., Fontani, M., Iuliani, M., Shaya, OAl. and Piv, A. VISION: A video and image dataset for source identification. EURASIP J. Inf. Secur. https://doi.org/10.1186/s13635-017-0067-2 (2017)

-

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. IInt. J. Comput. Vis. 128, 336–359. https://doi.org/10.1109/ICCV.2017.74 (2020).

Google Scholar

-

Sundararajan, M., Taly, A. & Yan, Q. Axiomatic attribution for deep networks. In 34th International Conference on Machine Learning, ICML 7, 5109–5118 https://doi.org/10.48550/arXiv.1703.01365 (2017).

-

Ghai, B., Vera Liao, Q., Zhang, Y., Bellamy, R. and Mueller, K. Explainable Active Learning (XAL): Toward AI Explanations as Interfaces for Machine Teachers Proc. ACM Human-Computer Interaction 4, 1–28, https://doi.org/10.1145/3432934 (2020).

-

Rasmussen, C. B., Kirk, K. & Moeslund, T. B. The challenge of data annotation in deep learning—A case study on whole plant corn silage. Sensors https://doi.org/10.3390/s22041596 (2022).

Google Scholar

Download references

Acknowledgements

Figures in this manuscript were drawn or modified by T.N. using draw.io (https://app.diagrams.net)

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Reprints and permissions

About this article

Cite this article

Nayerifard, T., Amintoosi, H. & Ghaemi Bafghi, A. Source camera attribution using a rule-based explainable convolutional neural network. Sci Rep (2026). https://doi.org/10.1038/s41598-026-40387-9

Download citation

-

Received:

-

Accepted:

-

Published:

-

DOI: https://doi.org/10.1038/s41598-026-40387-9