Introduction Diverse imaging modalities exist for evaluation of stroke. Since its first application in brain Magnetic Resonance Imaging (MRI), diffusion weighted imaging (DWI) became one of the essential and optimal techniques for diagnosing acute ischemic stroke1,2. DWI exhibits tissue characteristics by providing information of the random motion of the water molecules, so-called Brownian movement in

AnEEG: leveraging deep learning for effective artifact removal in EEG data – Nature

Introduction

One of the most important foundations of neuroscience studies is electroencephalography (EEG), which has produced invaluable insights regarding the vital functions of the human brain. EEG is recorded by capturing the electrical fluctuations of neurons, it does so by using a series of electrodes applied to the scalp to measure voltage fluctuations1. The versatility of EEG extends not only to BCI applications but also to clinical diagnostics in neurology, whereby it helps solve mysteries about the brain and diagnose neurological disorders, among many others. Through EEG, people have been able to investigate brain function, detect neurologic disorders, and even delve into human cognition depths2. However, the occurrence of unwanted artifacts makes the usefulness of EEG data highly problematic. cost-effective, simple, and exceptional temporal resolution is the main advantage of EEG3 from the abstract. This explains why it’s employed in several medical and scientific domains. ocular, muscle, powerline noise, and other environmental factors polluted the EEG signal during recordings from the abstract.

Artifacts are mainly separated in 2 categories: biological sources and environmental sources. Biological artifacts are generated by the human body and serve functions like ocular movements, cardiac rhythms, muscular contractions, etc. These biological noise sources can distort the recorded EEG signal, making it difficult to accurately interpret or analyze them. On the other hand, environmental noise originating from outside includes power line interference as well as inadvertent electrode movement. The second source is environmental artifacts generated from external sources like powerline interference, the movement of electrodes, etc. These artifacts also corrupt the structure of EEG data, adding to the complexity of the artifact removal task4.

These artifacts render EEG incomprehensible and unreliable but also add complexity to the process of meaningful analysis and diagnosis. Unable to address these artifacts not only complicates the interpretation of brain activity but also has important implications in clinical practice since their wrong presence can lead to misdiagnosis and treatment. Consequently, substantial efforts have been made in the field of EEG signal processing to create reliable artifact removal methods. Typically, analyzing EEG data involves considering signals within the range of 1–100 Hz. This range includes both neuronal signals that are the main and artifact signals, thus making their separation a daunting task. These include eye blinks, which typically have low-frequency components below 4 Hertz, and muscular activity with high-frequency characteristics above 13 Hz, thereby overlapping with genuine frequencies associated with EEGs5. As such, it is extremely difficult to separate these artifacts from their sources to maintain the rich neural information contained in an EEG dataset.

Several methods have been proposed to solve this problem and have been improved over the years, but sometimes they may not be effective in solving it6. These methods are mainly classified into two main approaches: those that estimate and remove artifacts from reference channels, and those that decompose EEG signals into alternative regions for artifact extraction. These methods include regression-based methods7, Blind Source Separation(BSS)8, Wavelet Transform, EMD (empirical mode decomposition)9, and their hybrid variants. Successfully identifying and eliminating artifacts from EEG-signals is a very important task in both clinical and practical applications. Manually visualizing segments, identifying, and removing the artifact causes significant loss in valuable neural data. Therefore, research needs to develop more sophisticated artifact removal methods, particularly when it comes to physiological artifacts.

Deep learning, a constantly growing technique, has shown its ability to overcome these challenges in recent years10. As a type of neural network, Generative Adversarial Networks (GANs) have demonstrated success in creating data that is nearly devoid of artifacts. GAN consists of two main components: a discriminator and a generator. The main job of the discriminator is to differentiate genuine from generated data, and on the other hand, the generator attempts to provide data that is identical to the genuine data11. This adversarial learning approach has demonstrated remarkable effectiveness in generating artifact-free EEG signals. This study takes an important step by proposing a new approach to artifact removal techniques that enhances the capabilities of GANs by integrating long-term and short-term memory-LSTM layers. LSTM, a type of recurrent neural network, is effectively capturing temporal dependencies and contextual information, making it well suited for EEG data processing tasks12, where temporal dynamics are important.

The primary objective of this work is to remove artifacts from EEG using AnEEG, a LSTM-based GAN deep model. The goal was to enable the GAN to generate pure EEG signals that maintain the original neural activity data by training the model on a variety of datasets containing EEG recordings with various artefacts. In a GAN architecture, the discriminator examines the quality, compares the generated data with ground-truth data, and guides the generator to produce more accurate signals without artifacts.

In the following sections of this paper, the methodology, experiments, and results of the proposed AnEEG model are explained. The effectiveness of the model in removing artifacts under different types of artifacts and recording conditions will also be demonstrated in the following parts. Ultimately, the proposed effort will significantly improve the quality of EEG data, which will increase the reach and significance of neurobiological research and therapeutic applications based on EEG.

Literature survey

In neuroscience research, removing artifacts from EEG-signals is a very essential task, because artifacts seriously affect the accuracy and reliability of the recorded data. Various approaches have been put forth to deal with this issue, with an emphasis on the creation of sophisticated techniques and clever algorithms for artifact removal. Literature shows that GANs are useful not only as generative models but also as a denoising technique for artifacts. Yang An et al.13 described an automatic denoising method for multichannel-EEG signal using a GAN-Generative Adversarial Network. To determine whether or not the filtered EEG can preserve as much of the original effective information, they suggested a new loss function. EEG signal’s range limitation was achieved by utilizing energy threshold-based normalization and sample entropy to identify anomalous signals. For removing noise, they defined a method of using GAN-based blind denoising and the discriminator was used to judge whether the noise was filtered out or not. In this work, they used the HaLT dataset that recorded 12 subjects and 960 trials per participant with six imaginary motion states. Data is collected using 22 electrodes and the data sampling frequency is 200 Hz.

For practical BCI applications, Eoin Brophy et al.14proposed a GAN-supervised technique for EEG signal denoising. They created a pipeline that removed artifacts from EEG time series data. In this experiment, the generator was fed noisy EEG data, and the resulting clean EEG signal was compared to it in the discriminator. With one layer fully connected to the output, the generator composed of a two-layered array of long-term short-term memory (LSTM) with 50 units hidden in each layer. The discriminator is a four-layer, one-dimensional convolutional neural network with a sigmoid function. This experiment made use of the open-source PhysioNet motor/imaging dataset. A 64-channel BCI2000 system with a 160 Hz data sample rate is used to record data.

Tian-Jian Luo et al.15 developed a technique for reconstruction of EEG signals using GAN with temporal-spatial-frequency loss function and Wasserstein distance. By calculating the mean-squared error from time series and power spectral density features, the loss function recreated the signal. Three distinct datasets with varying sampling rates were used in this experiment to train and assess the networks. The 2018-published Action Observation Dataset was the first dataset, and it was sampled at 250 Hz and 64 channels. The Grasp and Lift (GAL) dataset was the second one. A “Brain Amp” device with thirty two channels, sensitivity, and sampled at 500Hz and 0.1 mv is used to collect the data. The third dataset was the Motor Imagery dataset. Data was collected from 12 subjects using 32 channels, and the sampling rate was 250Hz.

Jin Yin et al.16 presented a technique for denoising EEG signals dubbed GCTNet, which included a transformer network and a GAN-guided parallel CNN. The discriminator identified and rectified holistic discrepancies between clean and denoised EEG signals, while the generator composed of parallel CNN blocks and transformer blocks, captured both global and temporal dependencies. To assess their approach, the researchers used one genuine dataset and three semi-simulated datasets. The MIT-BIH Arrhythmia Dataset combined clean ECG signals with EEG segments; the EEG DenoiseNet dataset combined clean EEG segments with EMG and EOG segments; and the semi-simulated EEG/EOG dataset involved linearly mixing EOG artefacts with clean EEG signals. The actual dataset is made up of recordings from a patient with epilepsy that were made for 10 s at a sampling rate of 250 Hz and included 21 channels. Compared to other existing approaches, GCTNet showed a considerable performance improvement, achieving a reduction of 11.15% in relative root mean square error-RRMSE and an improvement of 9.81 in signal-to-noise ratio-SNR.

Phattarapong Sawangjai et al.17 developed an Ocular artifact removal framework ‘EEGENet’, based on generative adversarial networks. The algorithm runs under various conditions, i.e., no Eye-movement, vertical Eye-movement, horizontal Eye-movement, and eye blinking. For the training set, state-of-the-art EOG suppression techniques were used to create a clean target EEG signal that could be used as the original truth. The cleaned dataset is used to train the discriminator, and raw EEG data is passed into the Generator. For the data, they used 3 open-source datasets. The first one was the EEG Eye Artefact Dataset, which was pre-processed using a notch filter after data was gathered from 50 subjects. The second dataset, BCI Competition IV2b, has three channels and a 250Hz sampling rate. The Multimodal Signal Dataset was the third dataset. It featured sixty electrodes and a 2500 Hz sampling rate.

Sandhyalati Behera et al.18 proposed a machine-learning based approach for eliminating artifacts from EEG signals. To address the challenges posed by nonlinear and non-stationary artifactual signals, they utilize a RVFLN(Random Vector Functional Link Network) model. An exponentially weighted RLS(Recursive Least Squares) algorithm is employed to build the adaptive filter within this novel RVFLN model. To build and validate their model, clean EEG data is sourced from the openly available Mendeley database, while ECG signals, used to create artifacts in the EEG signals, are collected from the Physionet database. This approach aims to effectively remove artifacts and verify the efficacy of the proposed algorithm.

A novel approach to artifact removal was put forth by Zainab Jamil et al.19, where they classified EEG fragments based on Eye-movement and then used discrete wavelet transform and independent component analysis to eliminate artifacts. The dataset was obtained under unrestricted conditions, from 29 subjects who engaged in activities such as walking, watching videos, and expressing gestures and facial expressions. EEG signals were processed by extracting thirteen morphological features to identify segments containing Eye-movements. These segments are then denoised without distorting the signal morphology.

Rajdeep Ghosh et al.20 provided a dependable technique for automatically identifying and eliminating muscular artifacts and eye blinks from EEG recordings. This method combines a long short-term memory-LSTM with a k closest neighbour (KNN) classifier. A sliding-window method lasting 0.5 s is utilized to find and eliminate artifacts. For every EEG segment, the properties of peak-to-peak amplitude, average rectified value, and variance are determined. Bed segments were identified by KNN classifier and then processed by the LSTM network to eliminate artifacts. The suggested techniques showed outstanding performance in terms of the signal-to-artifact ratio,correlation coefficient, structural similarity, and normalized RMS error, with an accuracy of 97.4% in identifying damaged segments.

Shalini Stalin et al.21 suggested an approach for identifying and eliminating major movement artifacts from one channel EEG. “Support Vector Machine(SVM)” was utilized in the detection procedure, and “Ensemble Empirical Mode Decomposition (EEMD)” was utilized to extract signal features. Motion artifacts can be eliminated with the use of canonical-correlation-analysis-CCA. And the remaining motion artifacts were eliminated using the WT-(Wavelet Transform) technique, they employed the “Hawks optimization algorithm (HHO)” to optimize the outcomes.

Sakib Mahmud et al.22 proposed the Attention Guided Operational CycleGAN (AGO-CycleGAN), to remove motion artifacts and enhance the quality of corrupted EEG signals. This approach combines PatchGAN-based discriminators with attention-guided Feature Pyramid Network generators that are adjusted bottlenecks, as well as self-generative operational neurons. The model was tested and trained on a single-channel EEG dataset of 23 subjects, using a subject-independent Jackknife cross-validation approach. The method outperformed other techniques and was evaluated through both qualitative and quantitative analyses, employing robust metrics in the temporal and frequency domains.

Full size table

Abhay B. Nayak et al.23 developed an effective approach for eliminating motion artifacts from EEG signals using the empirical wavelet transform (EWT) technique. Initially, the EEG signals are decomposed into narrowband signals known as intrinsic mode functions (IMFs). The first step involves employing principal component analysis (PCA) to suppress noise from the decomposed IMFs. In the second step, they identify IMFs with noisy components using a variance measure and subsequently remove the identified sections. For their experiments, they utilize publicly available EEG data from the Physionet dataset. Their IMF-variance-based method proves to be more efficient than PCA-based approaches.

A discrete wavelet transform-based technique for eliminating artifacts from EEG signals-blink artefacts in particular-is presented by Wenjia Gao et al.24. The technique consists of multiple steps: first, intervals containing blink artifacts are detected using a fixed-length window and a forward-backward low-pass filter (FB-LPF). The best representative blink signal is then used to build an adaptive bi-orthogonal wavelet (ABOW). Lastly, discrete wavelet transform-(DWT) and ABOW are used to filter the discovered signals. The DWT’s decomposition depth is automatically determined by comparing the signals that contain artefacts. They use a semi-simulated EEG dataset for their research, in which they capture 200 Hz signals from 54 healthy people without blinking, normalise, scale, and add ten various amplitude blink signals taken from the dataset.

Yuanzhe Dong et al.25 proposed an EEG denoising technique called Artefact Removal WGAN (AR-WGAN), which used the Wasserstein Generative Adversarial Network (WGAN). Its efficacy is assessed using a dataset that is made available to the public as well as one that was acquired on its own. The main contribution is providing a WGAN-based end-to-end denoising model that can effectively filter massive amounts of unprocessed EEG data. The generator, which consists of 1D convolutional and transposed convolutional layers, produced fake data, which produced output close to 0, which the discriminator discriminated from genuine data, which was produced around 1. The public EEGdenoiseNet Benchmark Dataset, which comprises semi-synthetic data with clean EEG segments along with segments of ocular and muscular artifacts, is used by the authors for experimental validation. They also use a self-assembled dataset that includes the raw EEG data from four healthy controls.

The literature reviews are detailed in Table 1, describing each work’s dataset, methodologies, performance, and limitations.

Generative adversarial network

Proposed in 201426, Generative Adversarial Networks (GANs) is a new method for semi-supervised and unsupervised learning. They accomplish this by modeling high-dimensional data distributions informally. The two primary parts of a GAN are the discriminator and the generator. The discriminator contrasts the bogus data produced by the generator with the genuine data. Most importantly, the generator can only learn through interacting with the discriminator; it does not have direct access to actual images. The discriminator can work with samples taken from a stack of real photos as well as generated samples. By identifying if the image originated from the generator or the real stack, the discriminator receives an error signal. This same error signal, through the discriminator, is used to train the generator, guiding it toward producing higher-quality forgeries. Using Equation 1, the cost of training the GAN is calculated.27:

$$begin{aligned} begin{aligned} min _{Gen} max _{Dis} F(Dis,Gen)&= {mathbb {E}}_{x sim P(X)} [log Dis(x; theta _D)] \&quad + {mathbb {E}}_{x sim P(Z)} [log (1 – Dis(z; theta _{text {Gen}}))] end{aligned} end{aligned}$$

(1)

Additionally, the loss function of GANs is expressed as:

$$begin{aligned} begin{aligned} L = min _{Gen} max _{Dis} [log Dis(x) + log (1 – Dis(Gen(z)))] end{aligned} end{aligned}$$

(2)

Three guiding concepts form the basis of GAN’s operational framework28:

-

Enable the learning of the generative model so that data can be generated using random representation.

-

Training the model in a conflicting situation where the discriminator and generator are in opposition.

-

Training the system as a whole with artificial intelligence algorithms and deep learning neural networks.

Granted that they function well for semi-supervised and reinforcement learning, GAN networks are essentially used for unsupervised machine learning techniques. Together, these elements provide GANs with end-to-end solutions across a range of industries, including finance, healthcare, and mechanical.

Materials and methods

EEG data is prone to artifacts, and there is currently no algorithm in the literature that can completely eliminate all artifacts. This work seeks to employ GAN as a method of artifact removal covering most artifacts because of its reconstructive approach. This study aims to denoize EEG data for sophisticated Brain-Computer Interface (BCI) analysis. The proposed method entails feeding noisy signals through a generator to generate clean versions, which are then compared to actual clean signals by a discriminator. This iterative process facilitates the effective denoising of EEG signals. Figure 3 illustrates the proposed models architecture, providing insight into the proposed approach to denoising EEG signals.

Data recording

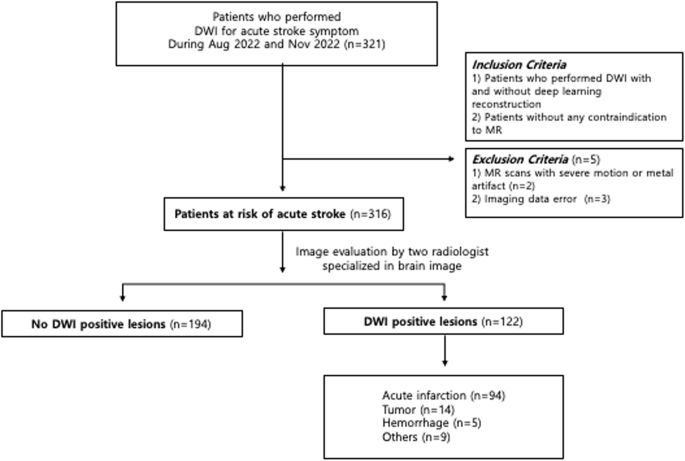

Building a computational dataset is crucial to training a deep learning model. A novel dataset with nine different artifacts for training and evaluating the model performance was created. These data were gathered from five subjects studying at Gauhati University, Assam, India. The subjects signed written consent for the EEG recording. Subjects performed several tasks while their EEG signals were recorded. These tasks included blinking, eye-movements, chewing, and others. Every participant took part in multiple recording sessions, providing us with different samples for efficient training and testing of the proposed model.

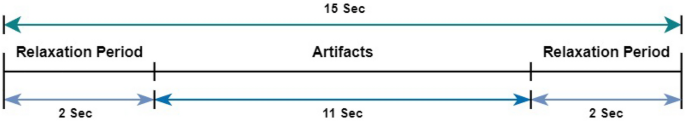

The data recording session began with a 2-s relaxing period during relaxation the subject was directed to remain motionless and relax. Following the relaxing phase, the subject was guided to blink continuously for 11 s. The process is mentioned in Fig. 1. The primary goal of the given task was to capture the electrical activity that goes along with blinking the eyes. Subsequently, another 2-s relaxation period ensued. Following the previous phase, the process was repeated, this time directing the participant to intentionally move their eyes for 11 s. The motive of the task is to capture electrical signals of the brain related to eye-movement. This was followed by another 2 s relaxation period, allowing the subject to recover and go back to their initial state before moving on to the next phase.

Data recording session paradigm, first and last 2-s is relaxing period.

Full size image

During the data collection session, this pattern is alternating between specific activities and relaxation intervals. Data was gathered for nine different artifacts including eye blinking, eye-movement, chewing, clenching teeth, swallowing movement, tongue movement, jaw movement, head movement, and shoulder movement.

However, during the recording process, subjects naturally blinked their eyes, which introduced an additional artifact. Therefore, the EEG segments contain the main artifact of interest along with the eye blink artifact. This was considered in the artifact removal process.

The data were recorded from thirty two channels using the international 10–20 system for electrode placement, at a sampling frequency of 128 Hz. A wearable EMOTIV-EPOC Flex Gel Kit with thirty two channels was used to collect data. A total of 117 s of data were recorded for each subject, resulting in a total data length of 14,976 samples per subject.

Data pre-processing

Preprocessing procedures are very essential part for EEG data analysis to improve the data quality and prepare them for more effective analysis. Given that the frequency range of 0.5 to 40 Hz is often where the main characteristics of EEG signals can be discovered, so, the raw EEG data was filtered within this range. This filtering process was effective in removing high-frequency noise, like linear noise at 50 or 60 Hz, while also correcting baseline drift29.

Subsequently, the original signals were normalized to limit the signal range. During activities such as eye blinking, facial muscle movement, or other artifacts, the amplitude values of EEG channels can experience sudden high fluctuations. Uncontrolled EEG values have the potential to cause instability in the GAN model because different subjects may show different ranges of uncertainty in their data. To address this issue, min-max normalization is used30. This method scales the EEG within a specified range, typically from − 1 to 1, ensuring consistency across all subjects.

After the normalization, each artifact is clipped from the raw normalized signal. This separation makes efficient management and independent analysis of artifacts while preserving their characteristics, temporal patterns, and associated features.

In used dataset, each artifact spans 11 s and is preceded and followed by a 2-s relaxation period, with the final 2 s overlapping with the relaxation period of the subsequent artifact, resulting in a total artifact duration of 15 s.

The EEG was captured from thirty two channels at a 128 Hz sampling rate. To extract all artifacts, the number of samples is calculated by multiplying the sampling rate with the length of the artifact plus the relaxation period (11 + 2 + 2). Mathematically, the total number of frames to be clipped (F) is determined as follows:

$$begin{aligned} F = T times 128 end{aligned}$$

(3)

Where F represents the total frames to be clipped and T represents the duration of the activity.

5-s EEG segments of blinking, eye-movements, chewing and clenching teeth from sub1 sub2 sub3 and sub4.

Full size image

After that, a 5-s window size was adopted to segment and each 15-s artifact was divided into non-overlapping 5-s segments. This results in the extraction of three segments from each channel, totaling 96 segments from all 32 channels. Considering data collection from five subjects, a total of 480 segments are collected. Similarly, 480 segments are collected for each artifact type. Additionally, Fig. 2 illustrates a segment of eye blink, eye-movement, chewing, and clench teeth artifacts from the first four subjects.

The segments are divided into two groups, adopting a ratio of 80:20 for training and testing purposes. Specifically, portions from four subjects were employed for training, and the segments from the fifth subject were saved for testing. A diverse dataset is ensured by this allocation for the model evaluation. Eyeblink, eye-movement, chewing, and clench teeth artifact segments from the four subjects are used for training, while the same artifact segments from the fifth subject are reserved for testing. Overall, 1536 EEG segments are allocated for training, with an additional 384 segments designated for testing.

Model architecture

The proposed investigation used a recurrent neural network architecture in addition to the Generative Adversarial Network (GAN) concept first presented by Ian Goodfellow31. The high-frequency noise, such as linear noise at 50 or 60 Hz, was successfully eliminated by this filtering procedure. As demonstrated in Fig. 3A, the design consists of two primary parts: the discriminator and the generator. Figure 3B,C show the architecture of the generator and the discriminator, respectively.

(A) Architectural Design of the Model B. Generator Architecture with Layered Model, C. Discriminator Architecture with Layered Model.

Full size image

The generator’s main goal was to generate clean EEG signals from raw input data that had been distorted by things like teeth clenching, eye blinking, and movement. On the other hand, the discriminator receives training to discern between the clean ground truth signals and the produced signals.

From the artifacted EEG signals (S^text {artifacted}) in the training dataset, wavelet decomposition (DWT) artifact removal method was applied to generate the clean target EEG signal S. These clean signals served as the reference baseline. Subsequently, (S^text {artifacted}) was utilized along with their corresponding clean signal S as inputs, to train the generator. The focus was to produce artifact-free signal (S’).

A two-step cleaning technique was employed, in the wavelet denoising method. First, each row of the data matrix was selected for subtracting the average trend from signal using Golay filtering. This initial step aids in eliminating any underlying trends that might obscure the EEG data. Then a thresholding method for the wavelet coefficients of each line was used to solve the noise removal problem. This involved decomposing the signal using the wavelet transform, determining the threshold of detail factors, and correspondingly reconstructing the signal. The thresholding process was determined by the third detail coefficient’s standard deviation, multiplied by a factor of 0.8.

To analyse serial data, the generator model was constructed using LSTM. When data sequences are followed by an activation function called a rectified parametric linear unit (PReLU), it can identify long-term relationships in those sequences. The architecture is described in Table 2. Using both the input and hidden states at each timestep, the LSTM produces the final output in a recurrent feedback loop. To enable the activation function to adaptively learn the rectification parameter for each neuron during training, PReLU introduce learnable parameters to it. This helps to reduce the vanishing gradient problem that deep networks frequently face32. With a ’tanh’ activation function, the Dense layer is the last in the model. The dense layer is used to convert the characteristics that the LSTM layers have learned into the final output format. The “tanh” activation function overwrites the output in the range [− 1, 1], which is typically used in GANs to create generator outputs. The generator output is then compared with the real data to calculate the RMSE(root mean square error).

LSTM layers make up the majority of the discriminator’s architecture when it comes to designing discriminator blocks. The LSTM layer is followed by a LeakyReLU activation function with a slightly decline in slope (alpha=0.2). In the LSTM layer, the number of units decreases to 64 from 1028. A Flatten layer is used after the LSTM layer. Next, a single neuron Dense layer is employed. The sigmoid activation function is applied to the Dense layer’s output. Figure 3 describes the model architecture.

Model training and implementation

In the phase of model training, the generator was fed with training data, from which the generator generated fake samples. These generated data were then sent to the discriminator block, where clean data of the corresponding artifacted segments were already present.

Specifically, 80% of the data was set aside for training, and this data was filtered using the wavelet decomposition technique beforehand. Thus, the generated signal is compared with its respective clean signal. The discriminator loss-function was set to binary cross-entropy, while the generator loss function was set to mean squared error (MSE). Both loss functions were optimized using the Adam optimizer, configured with a learning rate of 1e−3, (beta _1) at 0.9, (beta _2) at 0.999, and (epsilon) at 1e−08.

To prevent the discriminator from being updated during the generator training phase, it is set to non-trainable. The discriminator compares the generated data with the clean data at the end of each epoch to determine the MSE loss. The generator weights are then updated based on this loss. Additionally, the GAN loss was weighted with [1.0, 5e−4], applied to MSE and binary cross-entropy, respectively. This process helped the generator produce a signal (S’) that closely resembled the ground truth signal S. The model was trained with a batch size of 128 over 500 epochs.

Full size table

Once the training process is completed, the trained generator and discriminator models are obtained. However, the generator is needed to purify the signal. The discriminator’s main job is to deliver loss, which directs the generator to adjust its weights to produce more precise signals. After successful training, it was required to send the 5-s segmented normalized data, which was stored for testing purposes. Specifically, 20% of the data was reserved for testing. This test data is then fed into the trained generator to produce cleaned EEG signals, enabling us to evaluate the performance of the model on unseen data.

Evaluation methods

Five metrics are primarily utilised to assess the performance of the signal produced by the suggested model: CC (Correlation Coefficient), RMSE (Root Mean Square Error), NMSE (Normalised Mean Square Error), Signal to Noise Ratio (SNR) and Signal to Artifact Ratio (SAR).

Normalized mean squared error (NMSE)

It is a metric, used to confirm whether the generated signal by the model is similar to the actual EEG signal. In addition to visual examination, the Normalised Mean Squared Error (NMSE) measures the average squared difference between the Raw and Generated signals, normalised by the original signal’s variance. Mathematically, it is presented as:

$$begin{aligned} text {MSE}= & frac{{Vert text {orgSig}(:) – text {recSig}(:) Vert }_{2}^2}{text {length}(text {orgSig}(:))} end{aligned}$$

(4)

$$begin{aligned} text {NMSE}= & frac{{text {MSE}}}{Vert text {orgSig}(:) Vert _{2}^2} end{aligned}$$

(5)

Here, orgSig(:) represents the original signal, recSig(:) represents the reconstructed signal.

NMSE provides a measurement of the relative error between the original (raw signal) and generated signals, considering the scale of the signals. NMSE values close to zero indicate a good match between the original (raw signal) and generated signals, while higher values indicate larger discrepancies.

Root mean squared error (RMSE)

Without normalization, the RMSE offers a metric comparable to the NMSE. It represents the square root of the average squared difference between corresponding samples of the original and reconstructed signals. Mathematically, it is expressed as33:

$$begin{aligned} text {MSE}= & frac{sum _{i=1}^{N} (data_i – text {estimate}_i)^2}{text {numel}(data)} end{aligned}$$

(6)

$$begin{aligned} text {RMSE}= & sqrt{text {MSE}} end{aligned}$$

(7)

Here, (text {data}_i) and (text {estimate}_i) represent the (text {i})-th elements of the data and estimate vectors, respectively. The total number of elements in the data vectors are defined by N. numel(data) indicates the number of elements in the data vector.

RMSE quantifies the average size of the errors between the generated and original signals. It presents an absolute measure of the error, regardless of the scale of the signals. Lower RMSE (Root-Mean-Square Error) values define better agreement between the original (raw signal) and reconstructed signals.

Correlation coefficient (CC)

Correlation Coefficient (CC) is employed to evaluate the model’s capability to remove artifacts while maintaining the brain signal data. It measures how closely relate original (raw signal) and reconstructed signals to each other linearly. Mathematically, CC is expressed as:

$$text {CC} = frac{{sum _{i=1}^{N}}({{Sig}_{i}} – {bar{Sig}}) ({{Sig}^{prime}_{i}} – {bar{Sig}^{prime}})}{sqrt{{sum _{i=1}^{N}}({{Sig}_{i}} – {bar{Sig}})^2} {sqrt{{sum _{i=1}^{N}}({{Sig}^{prime}_{i}} – {bar{Sig}^{prime}})^2}}}$$

(8)

Here, Sig and (Sig^{prime}) represent the (i-)th sample of the original and generated signals, respectively, and ({bar{Sig}}) and (Sig^{prime}) represent the mean of the original (raw signal) and generated signals. Respectively, Total number of samples is represented by N.

How well the variation of one signal predicts the variation of the others is measured by CC. Its values range from − 1 to 1, where 0 denotes no correlation, − 1 represents a perfect negative correlation, and 1 represents a perfect positive correlation. Higher CC values indicate stronger linear agreement between the original (raw signal) and reconstructed signals. Thus, CC helps assess the model’s ability to preserve essential brain signal data while removing artifacts.

Signal to noise ratio (SNR)

SNR is employed to evaluate the effectiveness of artifact removal by comparing the signal power within a specific frequency band to the noise power. The formula used for calculating the SNR in decibels (dB) is:

$$begin{aligned} text {SNR}_{text {band}} = 10 times log _{10}left( frac{P_{text {signal}}}{P_{text {noise}}}right) end{aligned}$$

(9)

(P_{text {signal}}) is the power of the EEG signal within a particular frequency band, calculated by summing the power spectral density (PSD) values within the frequency band of interest.

$$begin{aligned} P_{text {signal}} = sum _{k in text {Band}} |Y(k)|^2 end{aligned}$$

(10)

Y(k) represents the FFT coefficient at the (k^{text {th}}) frequency bin.

The power of the noise is then calculated by subtracting the signal power from the total power of the entire signal:

$$begin{aligned} P_{text {noise}} = sum _{k=0}^{N-1} |Y(k)|^2 – P_{text {signal}} end{aligned}$$

(11)

in the equation, (sum _{k=0}^{N-1} left| Y(k) right| ^2) is the total power of the signal, summed across all frequency.

A higher SNR indicates a cleaner signal with less residual noise, confirming the model’s effectiveness in separating the desired EEG signal from unwanted artifacts35. This metric demonstrates how well the artifact removal process enhances the signal quality, ensuring that the EEG data is more representative of the true neural activity, with minimal distortion from external noise sources.

Signal to artifact ratio (SAR)

Signal-to-Artifact Ratio (SAR) measures the effectiveness of a methodology by comparing the variance of the artifact signal to the variance of the residual artifact after applying a given methodology. Mathematically, SAR is expressed as:

$$begin{aligned} text {SAR} = 10 times log _{10} left( frac{sigma (A)}{sigma (A – A’)}right) end{aligned}$$

(12)

where (A) represents the Artifact signal, and (A’) represents the cleaned signal. Higher SAR value indicates a more effective artifact removal, suggesting that the resulting signal is cleaner and more representative of the underlying brain activity.

Results and discussion

Following the completion of all training and testing phases with success, the proposed denoising model demonstrated impressive efficacy in removing noise (Artifacts) from EEG signals while preserving essential brain signal data.

The proposed model underwent rigorous optimization using 80% of the dataset during the training stage. The trend of decreasing loss values over time indicates that the model demonstrated consistent convergence. This implies that during the training stage, the model successfully learned to denoise EEG signals.

Utilizing the remaining 20% of the dataset in the testing phase, the model exhibited robust performance. After that the quantitative assessment was performed using metrics such as Normalized Mean Squared Error-NMSE, Root Mean Squared Error-RMSE, Correlation Coefficient-CC, Signal to Noise Ratio-SNR and Signal to Artifact Ratio-SAR. These metrics provided a comprehensive evaluation of how closely the generated signals matched the original EEG signals, reflecting a strong agreement between them.

In Fig. 4, segments of each artifact (eye blink, eye-movement, chewing, clench teeth) are presented. The figure exhibits the raw EEG signals alongside those generated by the proposed model and signals cleaned using wavelet decomposition methods.

Distinct characteristics of each artifact are observable: eye blink artifacts are denoted by high sudden spikes in the EEG signals, while eye-movement artifacts display square waves. Chewing artifacts appear as rhythmic and repeated patterns with moderate to high amplitude, and clenching teeth artifacts are typified by high-amplitude spikes.

The raw EEG signal are represented by the green signal, while the signals highlighted in red and blue depict the corrected signals after artifact removal. These denoised signals demonstrating how well the method works to reduce artifacts while preserving the integrity of the EEG data. In smoother waveforms, The removal of artifacts results, facilitating more accurate analysis of underlying brain activity. After denoising, the red signal successfully reduces the abrupt oscillations found in the green signal, as can be seen by comparing the two signals. The comparison clearly illustrates how the proposed method effectively reduces artifacts while maintaining the integrity of the underlying EEG data. The denoised signals provide strong evidence of the model’s capability to preserve essential brain activity while minimizing unwanted noise, thus ensuring a cleaner and more accurate representation of the EEG signals.

Raw EEG signals (Green), Wavelet Denoised EEG signals (Blue) and generated signal by the proposed model (Red) of Eye Blink and eye-movement, Chewing and Clench Teeth artifacts.

Full size image

In Fig. 5, an EEG signal displaying both the presence of Eye Blink artifact and its clean counterpart across 32 channels is showcased. The clean EEG signal is represented by the green signals, while the artifact affected EEG signal is depicted in orange. This visualization provides a clear comparison between the noisy and clean signals. Additionally, the successful removal of other artifacts, such as eye-movement, chewing, and teeth clenching, from the EEG signals has been documented and made available for review. These results, along with the corresponding EEG images, have been uploaded to the GitHub repository, as referenced in34. This allows for further examination and validation of the model’s performance across various types of artifact.

Eye Blink Artifact (Orange) vs. Clean Signal (Green) Across 32 Channels.

Full size image

Following the quantitative analysis, which included assessing NMSE, RMSE, CC, SNR and SAR, the generated signals were compared with the original signals, as well as the signals cleaned by wavelet decomposition methods with the original signals. Subsequently, both the GAN-based signals and the wavelet-based signals were compared to determine which performed better.

For examining the characteristics of the signals, RMSE was used, which gives information about the average magnitude of the errors between the generated or wavelet-decomposed signals and the original signals. By focusing on the deviations in signal reconstruction, RMSE helps quantify how closely the denoised signals resemble the true, artifact-free signals.

Full size table

In Table 3, the RMSE value for each electrode was calculated, comparing the original signal with the wavelet-based signal and the original signal with the signal generated by the model. The RMSE values for the generated signals are consistently smaller than wavelet denoising signals for most electrodes, there are exceptions observed, indicating the superior performance of the proposed model in preserving the integrity of the EEG signals. The average RMSE for the generated eye blink, eye-movement, chewing, and clench teeth signals is 0.0739, 0.0336, 0.0609, and 0.0345 respectively, while the average RMSE for the wavelet-based signal is 0.0753, 0.0353, 0.0621 and 0.0355 respectively. It means that the proposed model-generated signal generally showed more effective agreement with the original signals compared to the wavelet-based signals. Lower RMSE (Root-mean-square Error) values indicate better agreement between the original (Raw Signal) and reconstructed signal. Therefore, in conclusion, the signals generated by the proposed model possess more accurate characteristics compared to those obtained through wavelet methods, although some electrodes may require further investigation.

Full size table

After assessing the RMSE values, the similarity between the original and noise-free signals using NMSE was evaluated. By calculating NMSE, the model’s ability to accurately reconstruct the clean signal from the noisy input is assessed.

For NMSE, the original signal and the wavelet denoised signal as well as the NMSE value between the original signal and the reconstructed signal were computed. It can be observed that the NMSE values of the generated signals are close to zero relative to the wavelet-denoised signals. Table 4 presents all the calculated NMSE values. For all 32 electrodes, the NMSE values were calculated and averaged, it was found that the average NMSE value for the generated signal by the proposed model for eye blink, eye-movement, chewing, and clench teeth is 0.0320, 0.0195, 0.0131, and 0.0089 respectively, while the average NMSE value for the wavelet-based signals is 0.0388, 0.0257, 0.0175 and 0.0124 respectively. This indicates that the proposed model created signal are more similar to the original signal compared to the wavelet-based denoised signals. NMSE values close to zero imply a good match between the original and reconstructed signal, while the higher values of NMSE signify greater discrepancies between the original and reconstructed signal.

Full size table

These results demonstrates that, in terms of maintaining the similarity between the generated and original noise-free signals, the proposed model performed better than the wavelet-based methods, as seen by the generated signals’ lower average NMSE values.

After the NMSE evaluation, the model was assessed based on the likeness between the original and denoised signals by calculating the correlation coefficient (CC). CC was calculated both between the original signal and the reconstructed signal, as well as between the original signal and the wavelet-denoised signal. All calculated values for the 32 channels are shown in Table 5. The average CC for the generated signal is 0.6985, 0.6440, 0.6244, and 0.61 for eye blink, eye-movement, chewing, and clenching teeth signals, respectively, indicating a strong linear agreement with the original signals. In contrast, the average CC for the wavelet-based signals was 0.6280, 0.5638, 0.5686, and 0.5685 respectively.

A higher CC value indicates a more robust linear relationship between the original and reconstructed signal. In contrast to the wavelet-based denoised signals, the higher average CC value found for the created signals indicates a tighter alignment with the original signal. This indicates the strength of the model, in maintaining the linear relationship between the original and generated signal, confirming its efficiency over the wavelet-based method.

To evaluate the effectiveness of the proposed model, Signal-to-Noise Ratio-SNR was also evaluated. SNR measures the strength of the signal relative to the level of noise, making it an essential indicator of how well the noise has been subtracted and how much the signal power has been enhanced in the generated signals by the proposed model. The SNR across all 32 electrodes for all artifacts: Eye Blink, eye-movement, Chewing, and Clench Teeth was calculated. The signals were divided into four frequency bands: Theta (4–8 Hz), Alpha (8–13 Hz), Beta (13–30 Hz), and Gamma (30–50 Hz).

Full size table

Full size table

Full size table

Full size table

For the Eye Blink artifact(refer Table 6), the proposed model demonstrated superior performance with average SNR values of 7.71, 10.62, 13.08, and 10.42 for the Theta, Alpha, Beta, and Gamma bands, respectively. In comparison, the raw artifacted signal yielded lower SNR values of 3.24, 3.91, 2.23, and (-)2.95. The wavelet-based (DWT) denoised signal showed improvements with SNR values of 6.72, 10.26, 10.62, and 10.63.

For the eye-movement artifact (refer Table 7), the proposed model again outperformed the raw and DWT-cleaned signals. The generated signal achieved average SNR values of 7.10, 6.64, 13.54, and 13.57 across the Theta, Alpha, Beta, and Gamma bands, respectively. In contrast, the raw artifacted signal had much lower SNR values of 3.61, (-)0.44, 2.69, and 0.54, while the DWT-cleaned signal reached 6.20, 5.94, 12.25, and 12.98.

For the Chewing artifact (refer Table 8), the proposed model consistently outperformed both the raw and DWT methods. The average SNR values for the generated signal were 5.51, 6.48, 12.60, and 14.73 in the Theta, Alpha, Beta, and Gamma bands, respectively. The raw artifacted signal had lower SNR values of 3.16, 0.59, 5.37, and 5.50, while the DWT-cleaned signal yielded 3.09, 5.05, 11.69, and 14.37.

Lastly, for the Clench Teeth artifact (refer Table 9), the proposed model showed superior performance with SNR values of 5.51, 7.27, 13.01, and 14.55 in the Theta, Alpha, Beta, and Gamma bands, respectively. The raw artifacted signal exhibited SNR values of 5.09, 3.61, 7.08, and 5.73, while the DWT method resulted in 3.64, 6.61, 12.16, and 13.77.

Overall, the results demonstrate that the proposed model consistently achieves higher SNR values across all frequency bands compared to both the raw and DWT-cleaned signals. There is a general trend where the SNR increases with frequency, indicating that the signal quality improves relative to the noise as frequency increases. This suggests that the higher frequency components of the signal are more distinct and less affected by noise in the proposed model compared to the DWT approach. All the values are plotted separately for each artifact for better comparison: the Eye Blink plot is shown in Fig. 6A, the eye-movement plot in Fig. 6B, the Chewing plot in Fig. 6C, and the Clench Teeth plot in Fig. 6D. In these plots, the orange represents the GAN-based signal, the grey represents the DWT-based signal, and the blue represents the raw signal.

Comparative SNR Analysis Across Frequency Bands for (A) Eye Blink, (B) Eye-movement, (C) Chewing, (D) Clench Teeth Using the Proposed Method—AnEEG (Orange), DWT based Method (Grey), and Raw Signals (Blue).

Full size image

After evaluating the Signal-to-Noise Ratio (SNR), we also calculate the Signal-to-Artifact Ratio (SAR) for each artifact. SAR is another important metric that quantifies the ratio between the clean signal and the residual artifact.SAR was calculated for all 32 electrodes across all four artifacts: Eye Blink, Eye-movement, Chewing, and Clench Teeth. Afterward, the SAR values were averaged across all channels to obtain an overall performance measure. The evaluated data is presented in Table 10, showcasing the model’s ability to reduce artifacts.

Full size table

The average SAR value for Eye Blink is ({textbf {0}}.{textbf {87}}) for the GAN-based method, compared to 0.83 for the DWT-based method. For Eye-movement, the GAN approach achieved a SAR of ({textbf {0}}.{textbf {62}}), while DWT achieved 0.50. In the case of Chewing artifacts, the GAN method resulted in a SAR of ({textbf {0}}.{textbf {90}}), while DWT yielded 0.80. Lastly, for Clench Teeth artifacts, the GAN method outperformed DWT with a SAR of ({textbf {1}}.{textbf {47}}) compared to 1.38. In all artifact, the proposed method demonstrated superior performance in artifact removal compared to DWT.

After testing and evaluating all the criteria on the split artifact dataset, the model was also analysed on an additional dataset, “SAM-40”36 which was recorded at Gauhati University, Department of Information Technology, Guwahati, Assam , India. This dataset was gathered from 40 subjects to monitor induced stress while performing tasks such as the Stroop color-word test, arithmetic tasks, and mirror image recognition tasks.

The same pre-processing technique was used on this dataset like the proposed dataset. Then, taking one subject’s data to clean artifact, and then analyzed the RMSE, NMSE, CC, SNR, and SAR for the clean signals generated by the proposed model. The RMSE, NMSE, and CC values were calculated for all 32 electrodes and then averaged. These values are described in Table 11. Additionally, SNR and SAR values are presented in Table 12.

Full size table

Full size table

In the SAM-40 dataset, the RMSE for the proposed model’s generated signal is 0.0819, compared to 0.0831 for DWT. The NMSE for the generated signal is 0.0066, while DWT’s NMSE is 0.0164. The CC for the proposed model’s generated signal is 0.5894, compared to 0.5273 for DWT. These results indicate that, once again, the proposed model outperforms DWT, with lower RMSE and NMSE values, and a higher CC value.

The SNR and SAR values are preseneted in Table 11, the SNR was calculated across different frequency bands. The SNR for the proposed model’s generated signal is 6.95 in the Theta band, 9.72 in the Alpha band, 13.44 in the Beta band, and 12.62 in the Gamma band. In comparison, the SNR for the raw artifacted signal is 2.32 in Theta, 2.90 in Alpha, 4.33 in Beta, and 0.63 in Gamma. For the DWT-cleaned signal, the SNR is 5.31 in Theta, 8.62 in Alpha, 11.79 in Beta, and 11.43 in Gamma. A general trend where SNR rises with frequency was noted, suggesting that the signal quality improves relative to noise as frequency increases. The graph illustrating these SNR comparisons is plotted in Fig. 7, where orange represents the proposed model’s generated signal, grey represents DWT, and blue represents the raw signal.

Comparative SNR Analysis Across Frequency Bands for “SAM-40” Dataset Using the Proposed Method (AnEEG) (Orange), DWT based Method (Grey), and Raw Signals (Blue).

Full size image

Lastly,the SAR for the SAM-40 dataset was calculated. The SAR values are also described in Table 11 alongside SNR. The SAR for the proposed model is 1.34, compared to 1.09 for DWT. This further demonstrates the proposed model’s superior performance in artifact removal and signal preservation.

Hence, the proposed GAN-based denoising model showed remarkable effectiveness in eliminating noise from EEG signal, while keeping the crucial information about brain activity. Quantitative indicators like NMSE, RMSE, and CC show that the model consistently performed well throughout the demanding training and testing process.

Conclusion

The proposed study offered a unique model “AnEEG” for removing the artifacts from EEG data by utilizing a Generative Adversarial Network-GAN with layers of Long Short-Term Memory-LSTM. EEG is a very essential tool in both neuroscience and clinical fields, providing various brain activities. However, this collected information is always polluted with different unwanted information, which makes it difficult to study brain activity.

By employing GANs with LSTM layers, the proposed model was able to generate artifact-free EEG signals and also successfully preserve essential brain signal data. The quantitative evaluation based on Root Mean Squared Error-RMSE, Normalized Mean Squared Error-NMSE, and Correlation Coefficient-CC highlighted the greater efficacy of the model compared to traditional wavelet-based denoising methods. After evaluation, the model got higher CC and lower NMSE and RMSE values, indicating, stronger linear agreement with the ground-truth signals and better concurrence with the original signals, respectively.

When compared to alternative approaches, some emphasis on specific artifacts, such as ocular artifacts17 or motion artifacts21, AnEEG effectively handles a broader range of artifacts, including Eye Blink, Eye-movement, Chewing, and Clenching Teeth, across different datasets. This generalizability is essential for real-world applications where multiple artifacts may occur simultaneously. The model consistently outperforms traditional methods like ICA, DWT, and other GAN-based approaches16, achieving higher Signal-to-Noise Ratio-SNR and Signal-to-Artifact Ratio-SAR values across different frequency bands and datasets. For example, in the Eye Blink dataset, AnEEG achieves higher SNR in the Beta band (13.08 for GAN-based vs. 10.62 for DWT).

Beyond benchmark datasets, the model has undergone extensive testing on challenging real-world datasets, such as SAM-40, where it continues to outperform existing methods16 in SNR and SAR metrics. This adaptability enhances the model’s reliability for practical applications, unlike other models limited to semi-simulated data or specific datasets19. Compared to other GAN-based approaches that require extensive training and fine-tuning15, AnEEG is more computationally efficient, making it suitable for real-time applications without compromising performance. While other methods like22 target single-channel motion artifacts, AnEEG effectively removes multiple artifacts from multi-channel EEG data.

The study in24 focused on specific filters and wavelet transforms, which show limitations in detecting short-duration artifacts. AnEEG overcomes these limitations, providing higher CC values and overall better artifact removal performance. Furthermore, methods13 that struggle with heavy noise signals, a common issue in real-life environments, are outperformed by AnEEG, which handles challenging artifacts like clenching teeth and chewing more effectively. AnEEG achieves a lower RMSE, with a value of 0.073 in the Eye Blink artifact, compared to 0.076 achieved by the methods in13 . Some existing methods required prior knowledge of artifact frequencies18, which can be difficult in real-world scenarios. In contrast, AnEEG operates across different frequencies without the need for such specific knowledge. Additionally, while manual selection of noise components19 is time-consuming, AnEEG automates the artifact removal process, eliminating the need for manual intervention.

Lastly, whereas a lot of research focuses on limited evaluation metrics, this work employs a comprehensive set of metrics, including RMSE, NMSE, CC, SNR, and SAR, to thoroughly assess AnEEG’s effectiveness in artifact removal.

Overall, the use of deep learning capacity to improve EEG quality yields encouraging results. This advancement delivers more precise brain activity information, facilitating research and clinical diagnosis.