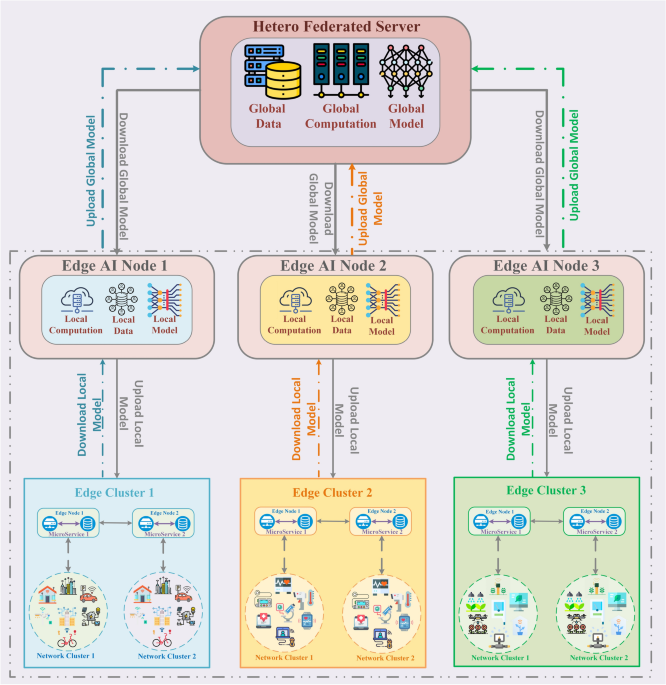

Introduction The rapid increase of Internet of Things (IoT) devices and transportable technology in recent years has led to an exponential increase in application data generation1. According to a Cisco report2, around 2.32 zeta bytes of data are produced daily at the network edge of 2023. This enormous volume of edge data has driven the

Application of artificial intelligence in VSD prenatal diagnosis from fetal heart ultrasound images

- Research

- Open access

- Published:

- Furong Li1,6 na1,

- Ping Li3 na1,

- Zhonghua Liu4 na1,

- Shunlan Liu2,

- Pan Zeng5,

- Haisheng Song6,

- Peizhong Liu5 &

- …

- Guorong Lyu2

BMC Pregnancy and Childbirth volume 24, Article number: 758 (2024) Cite this article

Abstract

Background

Developing a combined artificial intelligence (AI) and ultrasound imaging to provide an accurate, objective, and efficient adjunctive diagnostic approach for fetal heart ventricular septal defects (VSD).

Methods

1,451 fetal heart ultrasound images from 500 pregnant women were comprehensively analyzed between January 2016 and June 2022. The fetal heart region was manually labeled and the presence of VSD was discriminated by experts. The principle of five-fold cross-validation was followed in the training set to develop the AI model to assist in the diagnosis of VSD. The model was evaluated in the test set using metrics such as mAP@0.5, precision, recall, and F1 score. The diagnostic accuracy and inference time were also compared with junior doctors, intermediate doctors, and senior doctors.

Results

The mAP@0.5, precision, recall, and F1 scores for the AI model diagnosis of VSD were 0.926, 0.879, 0.873, and 0.88, respectively. The accuracy of junior doctors and intermediate doctors improved by 6.7% and 2.8%, respectively, with the assistance of this system.

Conclusions

This study reports an AI-assisted diagnostic method for VSD that has a high agreement with manual recognition. It also has a low number of parameters and computational complexity, which can also improve the diagnostic accuracy and speed of some physicians for VSD.

Peer Review reports

Introduction

Congenital heart disease(CHD) is one of the causes of neonatal mortality, with an incidence of approximately 4–13% of live births in China, and worldwide the total incidence of CHD in live births is 0.8–1.1% [1, 2]. According to the World Health Organization (WHO), ventricular septal defect (VSD) is a common form of CHD that refers to an incomplete development of the septal portion of the heart, resulting in an incomplete separation between the two chambers of the heart. Normally, the right and left chambers of the heart are separated by the ventricular septum, thus keeping oxygen-rich blood separate from oxygen-poor blood in circulation. However, VSD may cause these two types of blood to mix, thus affecting the normal functioning of the heart. According to statistics, the prevalence of VSD is about 20–57% of the CHD [3]. Many studies have shown that the apical four-chamber cardiac view (4CH) and left ventricular outflow tract view (LVOT) are the most commonly used planes for prenatal screening for VSD [4, 5]. The schematic diagram of VSD and no-VSD in 4CH and LVOT is shown in Fig. 1. The width of the defect in VSD often ranges from 0.1 to 3.0 cm, with smaller defects located in the muscular portion and larger defects located in the membranous portion. In small defects, the heart size can be normal, but in large defects, the left ventricle is more obviously enlarged compared with the right ventricle. The large defects not only affect the development, but are also associated with difficulty in feeding, shortness of breath, excessive sweating, fatigue, respiratory difficulties, recurrent lung infections, or even accompanied heart failure and infective endocarditis, which can seriously affect the health of the fetus. VSD can exist alone or in conjunction with other cardiac anomalies such as atrial septal defects, arterial conduit failure, or as part of cardiac complex anomalies such as permanent arterial trunks, tetralogy of Fallot, and right ventricular double outlet [6, 7]. Due to the close association of this disease with changes in the internal diameter of the heart chambers and the size of the defects, if not intervened promptly, it will have a serious impact on the child’s growth and development. In addition, different fetuses with VSD have different types and locations of defects, and there are obvious differences in the choice of clinical treatment timing and prognosis assessment. Therefore, for a fetus with VSD during pregnancy, early detection helps identify changes in the defect promptly, clarify the potential for self-healing, and ensure timely, effective interventions. This benefits both maternal and fetal health, significantly improving prognosis and holding great clinical significance.

(a) no-VSD in 4CH, (b) VSD in 4CH, (c) no-VSD in LVOT, (d) VSD in LVOT

Full size image

With the advancement of modern technology, the detection rate of fetal CHD can be improved by utilizing prenatal ultrasound screening techniques. Although great progress has been made in 3D/4D ultrasound, Doppler ultrasound, and other ultrasound, 2D ultrasound is still widely used as a prenatal screening tool due to its low computational complexity and ease of use [8]. However, the interpretation of 2D ultrasound images is a challenging task for sonographers. This is because ultrasound images have scattered noise, resulting in a low signal-to-noise ratio of the image. The effect of shadowing also makes it difficult to depict the boundaries of potential organs. Therefore, identification of organs and detection of any abnormality relies heavily on the expertise of the sonographer, and the diagnosis of congenital heart disease is highly specialized, with a high rate of misdiagnosis and underdiagnosis by non-specialist hospitalists.

Machine learning and deep learning are both artificial intelligence (AI) techniques that deal with complex tasks primarily by mimicking the way neurons in the human brain connect [9]. AI has a wide range of applications in the medical field, such as medical image analysis, pathology and tissue image analysis, genomics data analysis, disease prediction and monitoring, etc [8, 10]. In particular, significant progress has been made in medical image diagnosis, as it can extract complex features from medical images to assist doctors in disease diagnosis and prediction, such as the automatic detection of breast cancer, lung cancer, and other diseases through deep convolutional neural networks (DCNN) [11, 12]. Many studies have shown that machine learning methods are also popular in fetal prenatal screening and birth risk prediction [13,14,15]. Many scholars apply machine learning models to assist doctors in predicting fetal birth asphyxia risk [16, 17]. Some researchers have used deep learning models to automatically detect and localize various fetal organs, such as the heart, brain, lungs, and limbs, which helps doctors more accurately assess the health of the fetus [18]. Some studies have used deep learning methods to monitor fetal growth trends, including measurements of parameters such as head circumference, abdominal circumference, and femur length, information that is critical for assessing whether fetal development is normal or not [19]. Some scholars have also applied AI methods to detect and diagnose possible fetal pathologies, such as heart malformations, brain abnormalities, kidney problems, etc. Deep learning models can analyze details in ultrasound images and provide early diagnostic information [20]. In this paper, we apply AI methods to assist in the diagnosis of VSD in fetal heart ultrasound images to improve diagnostic efficiency.

Related work

In recent years, the application of artificial intelligence (AI) in obstetric ultrasound has made significant progress, especially in the detection of complex fetal structures, automated image analysis, and disease screening.AI technology can significantly improve the diagnostic efficiency and accuracy of prenatal ultrasound, and has demonstrated an expert level of detection, especially in complex congenital heart disease (CHD) screening. Arnaout et al. (2021 ) and Ejaz et al. (2024) showed that AI can help physicians reduce misdiagnosis by automating the identification of complex cardiac structures through a multi-neural network system, especially in the early screening of neonatal CHD [21, 22]. AI has also shown good results in other fetal development monitoring, Horgan et al. (2023) and Ramirez Zegarra and Ghi (2023) noted that AI can improve the accuracy of foetal growth assessment through automated image processing and reduce the workload of doctors [23, 24]. In addition, the combination of 5D ultrasound imaging technology with AI provides more dimensional information for real-time 3D image analysis, and Jost et al. (2023) suggest that this combination may revolutionise clinical practice in obstetrics and gynaecology [25]. In terms of screening for fetal heart-brain anomalies, Enache et al. (2024) showed that AI can effectively identify abnormalities in fetal heart-brain structures, improving screening efficiency and reducing manual analysis time [26]. The application of deep learning algorithms (e.g., convolutional neural networks) in foetal organ recognition and disease detection has shown its superiority in image segmentation and analysis [27]. These techniques have helped AI to show great potential in ultrasound image analysis. The future direction of AI in obstetrics and gynaecology covers a wide range of areas such as high-risk pregnancy management and preterm birth prediction. Although its technological potential is huge, further clinical validation and optimisation is needed. Malani et al. (2023) stated that AI will continue to support physicians with more accurate diagnosis and risk assessment through automated analysis and deep learning [28]. Shuping Sun et al. [29] successfully diagnosed small VSD, moderate VSD, and large VSD in adults using heart sound time and frequency domain feature extraction as well as SVM classifier and ellipse model. Shih-Hsin Chen et al. [30] achieved classification of three VSD subtypes in adults on Doppler ultrasound images using a modified YOLOv4-DenseNet framework; Jia Liu et al. [31] analyzed heart sound recordings from 884 Children with left-to-right shunted coronary artery disease using a residual convolutional recurrent neural network (RCRnet) classification model to achieve classification of atrial septal Defects (ASDs), Ventricular Septal Defects (VSDs), Perianeurysmal Ductal Arterial Insufficiency (PDAs), and Combined Coronary Artery Disease. Kotaro Miura et al. [32] successfully identified the presence of ASD on 12-lead electrocardiography of 3 hospitals from 2 continents using a CNN-based model; SITI NURMAINI et al. [33] processed fetal ultrasound images using Mask-RCNN and achieved a segmentation detection accuracy of more than 90% for multiple fetal cardiac structures; Dr. Lokaiah Pullagura et al. [34] achieved identification of fetal congenital heart disease by machine learning method K-Nearest Neighbor algorithm and decision tree based on Fetal Electrocardiogram (FECG) signals.

The focus of this paper is to develop an AI and deep learning based model for automatic detection of ventricular septal defect (VSD) in fetal cardiac ultrasound images and to evaluate its effectiveness in assisting physicians in diagnosis. The effectiveness of AI in dynamic ultrasound videos is also validated, demonstrating its potential for real-time clinical applications.

Materials and methods

The flow chart of this study is shown in Fig. 2. We first retrospectively collect prenatal ultrasound screening records of pregnant women and obtain compliant ultrasound images of the fetal heart. Then, the septal region of the fetal heart is labeled and the presence or absence of VSD is classified by experts. Based on the original images and labeling information, the deep learning fetal heart VSD detection algorithm is trained and evaluated in the training set and the test set following the principle of five-fold cross-validation. Finally, its performance is summarized and reported.

The overall workflow of this study

Full size image

Patient characteristics

In the retrospective study, 500 pregnant women who underwent prenatal fetal cardiac ultrasound were selected for the study between January 2016 and June 2022 at the First Hospital of Fujian Medical University and the Second Hospital of Fujian Medical University. The mean age of pregnant women is 30 years old, the maximum value is 46 years old, and the minimum value is 18 years old; the mean gestational age is 22 weeks, the maximum value is 39 weeks + 6 days, and the minimum value is 14 weeks + 3 days, as is shown in Table 1. The age distribution of pregnant women and fetus is shown in Fig. 3. As you can see from the maternal age distribution charts, the age distribution is not typically normal. Specifically, pregnant women’s ages are highly concentrated in the 27–32 year old range and less distributed in other ranges. This suggests that the data on maternal age may be skewed and therefore the use of the median is more appropriate. The graphs of gestational age distribution also showed some concentration, especially in the VSD group where gestational age was mostly concentrated between 22 and 28 weeks, while the distribution of gestational age in the no VSD group was wider. Based on these distributional characteristics, the data on fetal age may also be skewed, so the median should also be used to reflect the distribution of fetal age more accurately. Of these, 242 fetuses with a diagnosis of VSD have gold-standard cardiovascular angiographic and/or surgical findings as verification. All patients signed written informed consent and all data were anonymized.

Full size table

The age distribution of pregnant women and fetus

Full size image

Image acquisition

We obtain 1451 fetal cardiac ultrasound images from 500 pregnant women, and the instruments used in the examinations are DC-8, Resona 8 (Mindray, China), Voluson E10, Voluson E8, Voluson E6, Rietta 70, HI Vision Preiru, Erlangshen ( Hitachi, Japan), Logiq P6, Expert 730 (General Electric, USA), Aixlorer (Acoustics, France), and Sequoia 512 (Siemens, Germany). The image sizes are 704 × 561, 720 × 576, 720 × 370, 768 × 576, and 1260 × 910. The inclusion criteria for the ultrasound images are as follows: (1) The images do not contain Doppler signals. (2) No excessive acoustic shadows are observed. (3) If the same pregnant woman has more than one examination documented in the system, we will have an interval of more than four weeks between each examination. (4) Images were deemed appropriate for selection based on the clear visibility of key cardiac landmarks. Specifically, the four-chamber view (4CH) clearly shows the structure of the left and right atria and ventricles, with a focus on the continuity of the interventricular septum. The left ventricular outflow tract (LVOT) view clearly shows the anatomy of the aortic valve and the left ventricular outflow tract, with particular emphasis on the relationship between the interventricular septum and the aortic root.

Image annotation

All 2D ultrasound images have been labeled by two experienced sonographers using the labeling software labelme. For the annotation, one sonographer is required to manually outline the extent of the interventricular septum in fetal cardiac ultrasound images. Another sonographer reviews all the manual annotations to ensure the quality of the annotations, and for any disagreement, the two experts discussed and proposed new annotations as necessary until a consensus was reached. This is an essential fact for developing and validating deep learning algorithms.

Deep learning algorithm

In this work, we design an end-to-end deep learning model to detect VSD. we label our data with 0 and 1, respectively, based on real diagnostic results, where 1 represents the presence of VSD and 0 represents the absence. The supervised network we designed detects the ventricular septal regions of all images and classifies them with 0 and 1, guided by ground truth labeling. The network structure consists of four modules: Input, Backbone, Neck, and Head, as shown in Fig. 3(A). Fetal heart ultrasound images are pre-processed through a series of operations such as data enhancement at the input part, and then fed into the backbone network, which partially extracts features from the processed images; subsequently, the extracted features are fused by the Neck module to obtain features of three sizes: large, medium, and small; and the fused features are fed into the Head module, which outputs results of the localization of the ventricular septal region and the classification results of the presence or absence of VSD after detection.

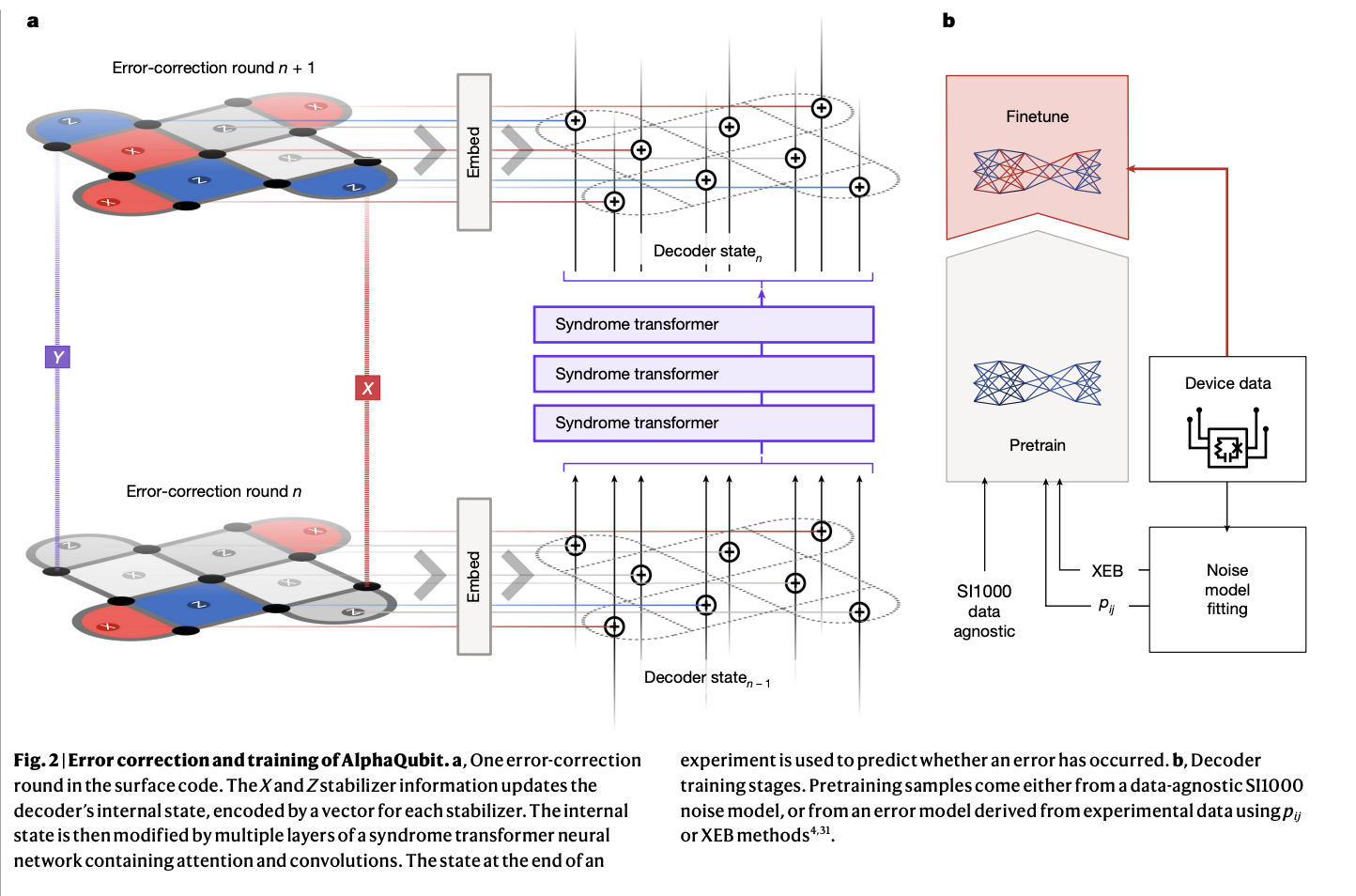

The whole network in this work can be described as a network similar to YOLOv5, as is shown in Fig. 4(A), but there are two parts of differences. The first is the network structure of the Backbone, we designed the Backbone in which we used Shuffle_block based on two key techniques named Group Convolution and Channel Shuffle to significantly reduce the computational complexity and the number of parameters while maintaining high performance, as shown in Fig. 4(B). The main idea is to divide the input channels into multiple groups. These channels are then rearranged so each group contains information from different input channels, and then convolution operations are performed within each group. Secondly, we add a dynamic sparse attention mechanism based on two-layer routing to the small target output layer of the Head module for more flexible computational allocation and content awareness, as shown in Fig. 4(C). Based on the proposed two-layer routing attention, we also propose a new general-purpose vision transformer called BiFormer, as is shown in Fig. 4(D). It exploits sparsity to save computation and memory and involves only GPU-friendly dense matrix multiplication that focuses on only a small portion of the relevant region without being interfered with by other irrelevant regions, resulting in excellent performance and computational efficiency, especially in dense prediction tasks, e.g., for the fetal heart septum, a small and dense structure. Comparison with other models shows that our model has much lower complexity than the benchmark model, and the number of model parameters is greatly reduced.

Details of the Deep-learning algorithm. (A) The overall framework diagram of our proposed model; (B) The structure of Shuffle_Block in the Backbone module; (C) The structure of the Biformer Attention Mechanism we added in the Head module; (D) The structure of Biformer_Block, the main module of Biformer Attention Mechanism

Full size image

Training strategies

To address the problem of positive and negative sample size imbalance, we use the Mosaic data enhancement method, which randomly selects four different images in each training batch, randomly selects a synthesis location, and stitches the four images together at the selected synthesis location to form a new synthesized image. This compositing process involves scaling, cropping, and arranging the four images together so that they form a whole image. The labeling information of the target is also adjusted and the coordinates and dimensions of the target bounding box are recalculated and mapped onto the new synthesized image. This improves the generalization ability of the model, helps the model to better handle complex scenes better, and improves the model’s robustness. Meanwhile, we follow the principle of five-fold cross-validation to divide the dataset during training to ensure that the model is evaluated on several different training and testing sets to improve the stability and generalisation of the model.

The experiments are conducted on a computer running Windows 10 with the following hardware configuration: a 12th Gen Intel(R) Core (TM) i5-12400 F as the CPU, 16GB of RAM, and an NVIDIA GeForce RTX3050 with 12GB of GPU memory. The programming environment used is Python 3.8. The deep learning framework used in the experiments is Pytorch 1.9. The experiments process divides all the images into training and test sets in a ratio of 8:2. The input images are all of size 640 × 640, and the training is performed 300 epoch. The dataset division details are shown in Table 2.

Full size table

In this study, hyperparameter tuning is performed for the proposed deep learning architecture to ensure optimal model performance. Specific hyperparameter tuning includes tuning of learning rate, batch size, number of training rounds, and optimiser selection. To find the optimal model, we adopt an experimental trial-and-error approach by experimenting with multiple hyperparameter combinations and testing their performance on the validation set. After several experiments and adjustments, we finally chose the following hyperparameter settings: a learning rate of 0.01, a batch size of 8, and training using the SGD optimiser. The results of each experiment were evaluated by five-fold cross-validation on metrics such as mAP@0.5, precision, recall, and F1 score, which ultimately determined the current optimal model configuration.

Performance testing

Models are quantitatively evaluated by detecting mAP@0.5, precision (Pre), recall (Re), and F1 scores through 5-fold cross-validation.

The precision is the proportion of samples predicted by the model to be positive cases that are actually positive cases. It is expressed by the following formula:

$$:begin{array}{c}Pre=frac{TP}{TP+FP}end{array}$$

(1)

Where TP (True Positives) indicates the number of samples that the model correctly predicted as positive examples and FP (False Positives) indicates the number of samples that the model incorrectly predicted as positive examples. Higher precision indicates that the model is more accurate in the prediction of positive cases.

Recall is the proportion of samples that are truly positive cases that the model successfully predicts as positive. It is expressed by the following formula:

$$:begin{array}{c}Re=frac{TP}{TP+FN}end{array}$$

(2)

Where TP (True Positives) indicates the number of samples that the model correctly predicts as positive examples and FN (False Negatives) indicates the number of samples that the model incorrectly predicts as negative examples. A high recall indicates that the model better captures the positive examples.

The F1 score is the reconciled average of precision and recall, which combines the accuracy and coverage of the model. The F1 score is represented by the following equation:

$$:begin{array}{c}F1=frac{2times:Precisiontimes:Recall}{Precision+Recall}end{array}$$

(3)

The F1 score takes values between 0 and 1, where 1 indicates the best performance and 0 is the worst performance.

The mAP synthesizes the precision-recall curves for different categories and computes their average. mAP computation usually consists of the following steps:

- (1)

Calculate the precision-recall curves for each category.

- (2)

Calculate the Area Under the Curve (AUC) for each category.

- (3)

The AUC for all categories is averaged to obtain mAP.

The value of mAP ranges from 0 to 1, where 1 indicates the best performance and 0 indicates the worst performance. In this study, we used mAP@0.5 as an evaluation metric, where 0.5 means that we set the IoU (Intersection over Union) threshold to 0.5. This means that when the IoU of the predicted frame to the true frame is greater than or equal to 0.5, the the detection is considered as correct. This choice is based on standard practice for target detection tasks and can balance the accuracy and recall of the detection without adding too many false positives. We believe that mAP@0.5 can effectively reflect the overall performance of AI models in detecting VSDs and help measure their robustness in complex clinical applications.

In order to fully evaluate the performance of our model, we compare it with both the current better-performing classification models (Resnet18 [35]、EfficientnetV2s [36]、Densenet [37]和Mobile netV3-large [38]) as well as target detection models (Faster R-CNN [39]、YOLOV5s [40]、YOLOV7 [41]、YOLOX [42]).

Statistical analysis

The statistical analysis was conducted using SPSS 20.0 software, and the performance of different models was assessed using McNemar’s test. When the p-value is less than 0.05, it indicates that the difference is statistically significant.

Results

In our detection task, VSD is treated as the primary class of detection. No-VSD is not considered a separate detection class but rather implies the absence of VSD in the given image. In cases where neither VSD nor no-VSD is detected, the image will be categorized as ‘no detection,’ meaning that the model did not identify any relevant features for classification. This approach ensures that the focus remains on identifying the presence of VSD without introducing additional complexity from non-relevant or ambiguous detections.

The real-time detection results of the model for VSD are shown in Fig. 5. where row A is the original image, row B is the expert annotation, and row C is the detection result of the model; the first and fifth columns are the no-VSD in LVOT, the second and sixth columns are the no-VSD in 4CH, and the third and eighth columns are the VSD in 4CH, the fourth and seventh columns are the VSD in LVOT. The results show that the deep learning method is highly consistent for the detection of the fetal heart ventricular septal region and automatic diagnosis of VSD with manual discrimination.

In addition to the analysis of static ultrasound images, we tested the AI model on dynamic screening videos. The performance of the AI model in dynamic detection was further validated by analyzing the captured real-time ultrasound videos. The video detection effect of the AI model for fetal heart ultrasound screening is shown in: https://github.com/1024Strawberries/VSD.

The detection results

Full size image

Full size table

Test results of the best model for 5-fold cross-validation (a) F1 Confidence Curve (b) P-R Curve (c) Confusion matrix

Full size image

5-fold cross-validation

Table 3 shows the results of the 5-fold cross-validation. The fourth fold has the highest training evaluation metrics, saving the model weights for subsequent testing. The model has an average mAP@0.5 of 0.91, an average precision of 0.855, and an average recall of 0.883. Individual metrics are reproducible in a single training validation run. Figure 6 shows the results of the model weights obtained from the fourth fold used for the test set.

Comparison results

Table 4 shows the performance comparison results with existing classification and target detection models for assisted diagnosis of VSD in fetal heart ultrasound images. Among the models compared with the AI models in this study, ResNet50, EfficientNetV2s, DenseNet201, and MobileNetV3-large were pre-trained on the ImageNet dataset, a widely used public dataset for image classification tasks. In contrast, the target detection models Faster R-CNN, YOLOv5s, YOLOv7, and YOLOX-s are usually pretrained using COCO or ImageNet datasets. These pre-training make the models primed for a wide range of image classification and detection tasks. Our model, on the other hand, was trained exclusively on the fetal cardiac ultrasound image dataset and was not pre-trained using publicly available datasets, thus making it better adapted to the specific needs of medical images. ’Parameters’ and ‘FLOPS’ are used to measure the complexity and computational efficiency of the model. Specifically, the number of ‘Parameters’ indicates the number of trainable parameters in the model, with a smaller number of ‘Parameters’ implying that the model is more lightweight and suitable for running in environments with limited computational resources. FLOPS is used as a measure of the amount of computation required for the model to run, indicating the number of floating point operations performed by the model within each second. By analysing these two metrics, we can evaluate the performance of the model on different hardware devices to ensure that it can run efficiently on resource-constrained devices while maintaining high accuracy. The model chosen for this study has low values for the number of parameters and FLOPS, making it particularly suitable for deployment in low-resource environments with high detection performance.The method we use can maintain high performance while reducing computational and storage overhead and is more suitable for resource-constrained environments with low device arithmetic.

Full size table

Table 5 shows the comparison of the VSD diagnostic results in fetal heart ultrasound images by our method with those of junior doctors, intermediate doctors, and senior doctors. Specifically, junior doctors are those with shorter experience (usually 1–2 years) and are at the stage of building up their expertise and skills. Senior doctors are those with longer working experience (usually more than 10 years) who have gained extensive clinical diagnostic experience and are able to manage complex cases independently. It can be seen that the diagnostic accuracy of our method is the same as that of senior doctors, but the unit level of inference speed for one image is improved from minutes to milliseconds; meanwhile, the diagnostic accuracies of junior doctors and intermediate doctors are improved by 6.7% and 2.8%, respectively, with the assistance of the AI method.

Full size table

Conclusion and discussion

In this study, we launch an exploration of VSD based on fetal heart ultrasound images. We propose an artificial intelligence method that has been trained and tested on 1451 fetal heart ultrasound images from two hospitals. It is verified that our model has a small number of parameters, low computational complexity, and achieves 92.6% accuracy in recognizing the presence of VSD, which is highly consistent with the diagnosis results of senior doctors, with a speed improvement to 7ms/sheet, and has a certain auxiliary effect on the diagnosis of junior doctors. Meanwhile, the performance of our method outperforms other classification models and target detection models, and it is more suitable for regions with limited resources and low device arithmetic.

This study demonstrates that AI models have significant effects in assisting doctors in VSD diagnosis, especially in improving the diagnostic accuracy of beginner and intermediate doctors, which has important implications for medical education and clinical training. Traditional medical training relies on the accumulation of a large number of cases and experience, and beginners often encounter difficulties in diagnosing small and complex VSDs.The AI system is able to provide instant feedback in real time through automatic recognition and annotation techniques, helping doctors identify cardiac structures and abnormalities faster and more accurately, especially in complex or rare cases, and the assistance of AI makes up for the shortcomings of inexperience. Integrating AI systems into medical training can provide an interactive learning platform for beginners, generate highly accurate diagnostic results, continuously track doctors’ diagnostic decisions, help doctors understand and improve their weaknesses in the diagnostic process, and thus enhance medical skill acquisition. Through long-term use, doctors can not only improve their VSD diagnostic ability, but also improve their overall image interpretation and cardiology knowledge through interactive learning with the AI, shortening the learning curve. In addition, the AI system can also be used as a distance learning tool in resource-limited areas, helping young doctors to obtain high-quality diagnostic feedback in the absence of expert guidance, providing standardised diagnostic support, and helping to reduce the differences in diagnostic levels between doctors, promoting the popularity and fairness of medical education.

However, there are still some limitations in this study. First, we recognise that only a few VSD types, such as membranous and muscular VSDs, were demonstrated in this study. as VSDs can occur anywhere in the septum, and even multiple VSDs may occur, future studies will consider incorporating more VSD types and complex cases to improve the adaptability of the AI model. Second, the AI model training and testing in this study was based on static ultrasound images, which were not evaluated in clinical real-time video loops or foetal scans. We realise that this may be a gap with the actual clinical screening environment, especially for beginners, where detecting small VSDs is difficult. Therefore, future studies will focus on the application of the AI system in real-time dynamic scanning and evaluate its performance in unfavourable conditions such as different fetal positions and no colour Doppler. Third, this study initially validated the diagnostic efficacy of AI in static images, but it is still necessary to test its feasibility in a real clinical setting, especially to assist in real-time screening. Future prospective studies will further evaluate the enhancement of AI on the detection rate of VSD in real-time ultrasound scanning and explore its potential clinical application value in actual diagnosis. Finally, because of the “black-box” nature of the deep learning model, the interpretability for the intermediate process of VSD diagnosis is low. Future research should aim to improve the accuracy and reliability of the models to be able to detect and categorize different types of heart defects at an early stage. Different medical image modalities as well as clinical data can also be combined, allowing for a more comprehensive assessment and diagnosis of congenital heart disease. More attention should be paid to how to interpret the model’s decisions and provide reliable uncertainty estimates to help physicians understand the diagnostic results.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request. To validate the performance of the AI system in dynamic ultrasound screening, a link to the real-time screening video is included for readers to further review the testing process and results. ( https://github.com/1024Strawberries/VSD)

Abbreviations

- CHD:

-

Congenital Heart Disease

- VSD:

-

Ventricular Septal Defect

- 4CH:

-

Four-chamber cardiac view

- LVOT:

-

Left Ventricular Outflow Tract view

- DCNN:

-

Deep Convolutional Neural Networks

- AI:

-

Artificial Intelligence

References

-

El-Chouli M, Mohr GH, Bang CN, Malmborg M, Ahlehoff O, Torp-Pedersen C, Gerds TA, Idorn L, Raunsø J, Gislason G. Time trends in simple congenital heart Disease over 39 years: a Danish Nationwide Study. J Am Heart Assoc. 2021;10(14):e020375. Epub 2021 Jul 3. PMID. 34219468; PMCID: PMC8483486.

Article PubMed PubMed Central Google Scholar

-

Pan F, Xu W, Li J, Huang Z, Shu Q. Trends in the disease burden of congenital heart disease in China over the past three decades. Zhejiang Da Xue Xue Bao Yi Xue Ban. 2022;51(3):267–77. https://doi.org/10.3724/zdxbyxb-2022-0072. English.

Article PubMed PubMed Central Google Scholar

-

WHO CO. World health organization. Responding to Community Spread of COVID-19 Reference WHO/COVID-19/Community_Transmission/20201. 2020.

-

Rieder W, Eperon I, Meagher S. Congenital heart anomalies in the first trimester: from screening to diagnosis. Prenat Diagn. 2023;43:889–900.

Article PubMed Google Scholar

-

Tudorache S, Cara M, Iliescu DG, Novac L, Cernea N. First trimester two- and four-dimensional cardiac scan: intra- and interobserver agreement, comparison between methods and benefits of color Doppler technique. Ultrasound Obstet Gynecol. 2013;42:659–68.

Article PubMed Google Scholar

-

Soto B, Becker A, Moulaert A, Lie J, Anderson R. Classification of ventricular septal defects. Heart. 1980;43:332–43.

Article Google Scholar

-

Penny DJ, Vick GW. Ventricular septal defect. Lancet. 2011;377:1103–12.

Article PubMed Google Scholar

-

Sridevi S, Kanimozhi T, Bhattacharjee S, Reddy SS, Shahwar D. Hybrid Quantum Classical Neural Network-Based Classification of Prenatal Ventricular Septal Defect from Ultrasound Images. In: International Conference on Computational Intelligence and Data Engineering. Springer; 2022. pp. 461–8.

-

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–44.

Article PubMed Google Scholar

-

Ximenes RS, Bravo-Valenzuela NJ, Pares DBS, Araujo Júnior E. The use of cardiac ultrasound imaging in first-trimester prenatal diagnosis of congenital heart diseases. J Clin Ultrasound. 2023;51:225–39.

Article PubMed Google Scholar

-

Amrane M, Oukid S, Gagaoua I, Ensari T. Breast cancer classification using machine learning. 2018 electric electronics, computer science, biomedical engineerings’ meeting (EBBT). IEEE; 2018. pp. 1–4.

-

Wang D, Khosla A, Gargeya R, Irshad H, Beck AH. Deep learning for identifying metastatic breast cancer. arXiv Preprint arXiv:160605718. 2016.

-

Horgan R, Nehme L, Abuhamad A. Artificial intelligence in obstetric ultrasound: a scoping review. Prenat Diagn. 2023.

-

Darsareh F, Ranjbar A, Farashah MV, Mehrnoush V, Shekari M, Jahromi MS. Application of machine learning to identify risk factors of birth asphyxia. BMC Pregnancy Childbirth. 2023;23:156.

Article PubMed PubMed Central Google Scholar

-

Huang B, Zheng S, Ma B, Yang Y, Zhang S, Jin L. Using deep learning to predict the outcome of live birth from more than 10,000 embryo data. BMC Pregnancy Childbirth. 2022;22:36.

Article PubMed PubMed Central Google Scholar

-

Islam MN, Mustafina SN, Mahmud T, Khan NI. Machine learning to predict pregnancy outcomes: a systematic review, synthesizing framework and future research agenda. BMC Pregnancy Childbirth. 2022;22:348.

Article PubMed PubMed Central Google Scholar

-

Gangadhar MS, Sai KVS, Kumar SHS, Kumar KA, Kavitha M, Aravinth S. Machine Learning and Deep Learning Techniques on Accurate Risk Prediction of Coronary Heart Disease. In:, Methodologies, Communication. (ICCMC). IEEE; 2023. pp. 227–32.

-

Morris SA, Lopez KN. Deep learning for detecting congenital heart disease in the fetus. Nat Med. 2021;27:764–5.

Article PubMed Google Scholar

-

Wang Y, Shi Y, Zhang C, Su K, Hu Y, Chen L, et al. Fetal weight estimation based on deep neural network: a retrospective observational study. BMC Pregnancy Childbirth. 2023;23:560.

Article PubMed PubMed Central Google Scholar

-

Zhu J, Chen J, Zhang Y, Ji J. Brain tissue development of neonates with congenital septal defect: study on MRI image evaluation of Deep Learning Algorithm. Pakistan J Med Sci. 2021;37:1652.

Google Scholar

-

Arnaout R, Curran L, Zhao Y, Levine JC, Chinn E, Moon-Grady AJ. An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease. Nat Med. 2021;27(5):882–91.

Article PubMed PubMed Central Google Scholar

-

Ejaz H, Thyyib T, Ibrahim A, Nishat A, Malay J. Role of artificial intelligence in early detection of congenital heart diseases in neonates. Front Digit Health. 2024;5:1345814.

Article PubMed PubMed Central Google Scholar

-

Horgan R, Nehme L, Abuhamad A. Artificial intelligence in obstetric ultrasound: a scoping review. Prenat Diagn. 2023;43(9):1176–219.

Article PubMed Google Scholar

-

Ramirez Zegarra R, Ghi T. Use of artificial intelligence and deep learning in fetal ultrasound imaging. Ultrasound Obstet Gynecol. 2023;62(2):185–94.

Article PubMed Google Scholar

-

Jost E, Kosian P, Jimenez Cruz J, Albarqouni S, Gembruch U, Strizek B, Recker F. Evolving the era of 5D Ultrasound? A systematic literature review on the applications for Artificial Intelligence Ultrasound Imaging in Obstetrics and Gynecology. J Clin Med. 2023;12(21):6833.

Article PubMed PubMed Central Google Scholar

-

Enache, I. A., Iovoaica-Rămescu, C., Ciobanu, Ș. G., Berbecaru, E. I. A., Vochin,A., Băluță, I. D., … Iliescu, D. G. (2024). Artificial Intelligence in Obstetric Anomaly Scan: Heart and Brain. Life, 14(2), 166.

-

Fiorentino MC, Villani FP, Di Cosmo M, Frontoni E, Moccia S. A review on deep-learning algorithms for fetal ultrasound-image analysis. Med Image Anal. 2023;83:102629.

Article PubMed Google Scholar

-

Malani IV, Shrivastava SN, D., Raka MS. (2023). A comprehensive review of the role of artificial intelligence in obstetrics and gynecology. Cureus, 15(2).

-

Sun S, Wang H, Jiang Z, Fang Y, Tao T. Segmentation-based heart sound feature extraction combined with classifier models for a VSD diagnosis system. Expert Syst Appl. 2014;41:1769–80.

Article Google Scholar

-

Chen S-H, Tai I-H, Chen Y-H, Weng K-P, Hsieh K-S. Data Augmentation for a Deep Learning Framework for ventricular septal defect Ultrasound Image classification. SpringerLink. 2021;:310–22.

-

Liu J, Wang H, Yang Z, Quan J, Liu L, Tian J. Deep learning-based computer-aided heart sound analysis in children with left-to-right shunt congenital heart disease. Int J Cardiol. 2022;348:58–64.

Article PubMed Google Scholar

-

Miura K, Yagi R, Miyama H, Kimura M, Kanazawa H, Hashimoto M et al. Deep learning-based model detects atrial septal defects from electrocardiography: a cross-sectional multicenter hospital-based study. eClinicalMedicine. 2023.

-

Nurmaini S, Rachmatullah MN, Sapitri AI, Darmawahyuni A, Jovandy A, Firdaus F, et al. Accurate detection of septal defects with fetal ultrasonography images using deep learning-based multiclass instance segmentation. IEEE Access. 2020;8:196160–74.

Article Google Scholar

-

Dr. Lokaiah Pullagura MRD. Recognition of fetal heart diseases through machine learning techniques. Annals RSCB. 2021;25:2601–15.

Google Scholar

-

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. pp. 770–8.

-

Tan M, Le Q. Efficientnetv2: Smaller models and faster training. In: International conference on machine learning. PMLR; 2021. pp. 10096–106.

-

Iandola F, Moskewicz M, Karayev S, Girshick R, Darrell T, Keutzer K. Densenet: implementing efficient convnet descriptor pyramids. arXiv Preprint arXiv:14041869. 2014.

-

Howard A, Sandler M, Chu G, Chen L-C, Chen B, Tan M et al. Searching for mobilenetv3. In: Proceedings of the IEEE/CVF international conference on computer vision. 2019. pp. 1314–24.

-

Ren S, He K, Girshick R, Sun J. Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst. 2015;28.

-

Jocher G, Chaurasia A, Stoken A, Borovec J, Kwon Y, Michael K et al. ultralytics/yolov5: v6. 2-yolov5 classification models, apple m1, reproducibility, clearml and deci. Ai integrations. Zenodo. 2022.

-

Wang C-Y, Bochkovskiy A, Liao H-YM. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. 2023.

-

Ge Z, Liu S, Wang F, Li Z, Sun J. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:210708430. 2021.

Download references

Acknowledgements

The authors would like to thank the nurses and physicians who helped collect and label the data. The authors would like to express their sincere gratitude to the editors and reviewers of BMC Pregnancy and Childbirth for their valuable comments and suggestions on this study. The professional guidance of the editors and the constructive feedback from the reviewers during the revision and refinement of the manuscript have greatly enhanced the quality and rigour of this paper. We express our heartfelt gratitude for their hard work and support.

Funding

This work was supported by the Grants from National Natural Science Foundation of Fujian (2021J011404, 2023J01173, 2023J011784) and by the Quanzhou scientific and technological planning projects (2022NS057).

Ethics declarations

Ethics approval and consent to participate

This study was in accordance with the Declaration of Helsinki on Medical Research Involving Human Subjects. All patients signed a written informed consent and underwent routine clinical treatment at our center. It was approved by the Medical Ethics Committee of the Second Affiliated Hospital of Fujian Medical University (2020 − 115).

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Reprints and permissions

About this article

Cite this article

Li, F., Li, P., Liu, Z. et al. Application of artificial intelligence in VSD prenatal diagnosis from fetal heart ultrasound images. BMC Pregnancy Childbirth 24, 758 (2024). https://doi.org/10.1186/s12884-024-06916-y

Download citation

-

Received:

-

Accepted:

-

Published:

-

DOI: https://doi.org/10.1186/s12884-024-06916-y