Introduction Deep learning is an advanced machine learning technique based on artificial neural networks inspired by a human brain, especially featuring the capability to automatically extract complex patterns of data by multi-layer neural networks [1,2]. The neural network consists of input, hidden, and output layers. Unlike conventional machine learning algorithms, deep learning architectures have multiple

Postoperative outcome analysis of chronic rhinosinusitis using transfer learning with pre …

- Research

- Open access

- Published:

- Wentao Gong1,2 na1,

- Keguang Chen3 na1,

- Xiao Chen2,

- Xueli Liu2,

- Zhen Li2,

- Li Wang2,

- Yuxuan Shi2,

- Quan Liu2,

- Xicai Sun2,4,

- Xinsheng Huang3,

- Xu Luo5 &

- …

- Hongmeng Yu1,2,4

BioMedical Engineering OnLine volume 24, Article number: 95 (2025) Cite this article

Abstract

Background

This study developed a foundation model-based analytical framework for the analysis of postoperative endoscopic images in chronic rhinosinusitis (CRS). The framework leverages the standardized identification and reproducible results enabled by artificial intelligence algorithms, combined with the strengths of pre-trained foundation models in developing downstream applications. This approach effectively addresses the inherent challenge of strong subjectivity in conventional postoperative endoscopic evaluation for CRS.

Methods

The postoperative sinus cavity status in CRS was classified into three states: “polyp”, “edema”, and “smooth”, to establish an endoscopic image dataset. Using transfer learning based on pre-trained large models for endoscopic images, we developed an analytical model for postoperative outcome evaluation in CRS. Comparative studies with various traditional training methods were conducted to evaluate this approach, demonstrating that it can achieve satisfactory model performance even with limited datasets.

Results

The endoscopic image-based pre-trained transfer learning model proposed in this study demonstrates significant advantages over conventional methods in diagnostic performance. In the precision evaluation for distinguishing smooth mucosa from rest conditions (edema and polyps), our model achieved mean accuracy and AUC values of 91.17% and 0.97, respectively, with specificity reaching 86.35% and sensitivity attaining 91.85%. This represents an approximate 4% improvement in mean accuracy compared to traditional algorithms. Notably, in the differential diagnosis between polyps and rest conditions (smooth mucosa and edema), the proposed algorithm attained mean accuracy and AUC values of 81.87% and 0.90, respectively, demonstrating specificity of 80.53% and sensitivity of 81.04%. This configuration shows a substantial 15% enhancement in mean accuracy relative to conventional diagnostic approaches.

Conclusion

The transfer learning algorithm model based on pre-trained foundation models can provide accurate and reproducible analysis of postoperative outcomes in CRS, effectively addressing the issue of high subjectivity in postoperative evaluation. With limited data, our model can achieve better generalization performance compared to traditional algorithms.

Introduction

CRS represents one of the most prevalent conditions managed by otolaryngology-head and neck surgery specialists. In China, the overall prevalence of CRS reaches 8%, affecting approximately 110 million individuals [1]. When pharmacological therapy proves ineffective, patients require endoscopic sinus surgery (ESS), followed by multidisciplinary treatment. Postoperative follow-up and interventions during the 3- to 6-month recovery period are critical for consolidating surgical outcomes [2].

Clinical decision-making during follow-up primarily relies on endoscopic evaluation of surgical cavity status [3]. However, standardized postoperative management faces two major clinical challenges: first, the subjective nature of endoscopic assessment frequently leads to inter-rater variability among clinicians, resulting in discrepant therapeutic decisions for identical cavity presentations. Second, the necessity for in-person endoscopic evaluations creates significant compliance barriers in contemporary fast-paced societies, with patients often experiencing challenges in maintaining regular follow-up schedules or becoming lost to follow-up.

The advent of big data and advancements in computational hardware have driven revolutionary progress in artificial intelligence (AI) over the past decade. Deep learning (DL) algorithms, particularly convolutional neural networks (CNNs) [4], have been extensively investigated and implemented in medical practice. Notable applications include chest CT-based pulmonary disease screening [5], gastrointestinal endoscopy-aided digestive disorder detection [6], and fundus image analysis for ocular pathology identification [7]. Significantly, multiple AI systems have obtained Class III medical device certifications (the highest risk category requiring rigorous clinical validation), demonstrating tangible clinical integration for enhancing diagnostic accuracy and reducing clinician workload.

Currently, in the field of AI-assisted diagnosis using nasal endoscopy, AI algorithms based on static white light endoscopic images [8, 9] or narrow-band images [10] have been developed to detect nasopharyngeal malignant tumors, delineate the extent of nasopharyngeal cancer [11], differentiate nasal polyps from papillomas [12], and assess the degree of adenoid hypertrophy in children [13]. Additionally, AI algorithms utilizing dynamic endoscopic videos have been employed to screen for nasopharyngeal cancer [14]. These approaches have demonstrated reliable auxiliary diagnostic capabilities in specific clinical scenarios.

In this study, we aim to exploit the strengths of deep learning algorithms in image recognition to develop a classification model trained on CRS postoperative nasal endoscopic images. The goal is to establish a standardized, reproducible, and easily implementable method for disease assessment, which will facilitate the integration of AI models into clinical settings for supporting CRS chronic disease management. This approach also lays the groundwork for potential future applications of digital therapies for CRS, mediated by home-use endoscopes.

Result

Experiment setup

Our method is implemented using PyTorch, and the experiments were conducted on two NVIDIA A800 GPUs (80 GB memory). For the random initialization method, we trained the model from traditional random initialization (Scratch) for 100 epochs with a batch size of 16, using the Adam optimizer and an initial learning rate set to 2e-4. A polynomial learning rate scheduling strategy was used, and the learning rate was exponentially decayed by a factor of 0.9 at each epoch. For the pre-trained foundation models based on CLIP [15], MedSAM [16], and Endo-FM [17], the learning rate was adjusted to 2e-5, while all other settings remained the same as in the Scratch method.

AI model performance

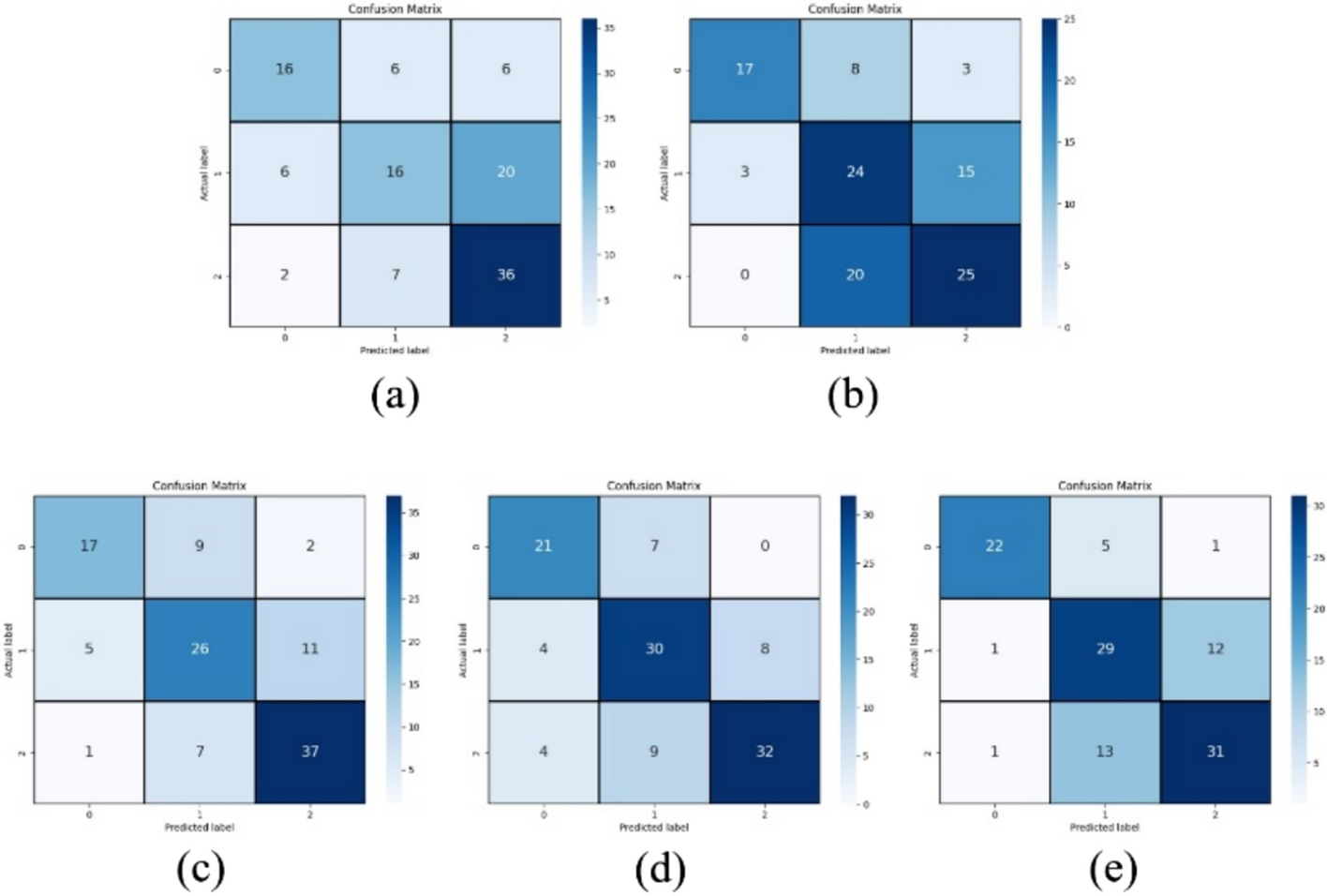

After screening and standardization, this study divided all labeled images into a training set (400 images each of polyp, edema, and smooth), a validation set (50 images each of polyp, edema, and smooth), and a test set (50 images each of polyp, edema, and smooth) according to an 8:1:1 ratio. Based on the above dataset, this study employed the ViT-B [18] network model as the base model and compared it with ResNet-50 [19]. We then conducted a comparative analysis of the training methods, including Scratch, CLIP-based pre-trained model, MedSAM-based pre-trained model, and Endo-FM-based pre-trained model. The accuracy metrics of the five training methods are presented in Table 1. To provide a more comprehensive and intuitive comparison of the diagnostic performance of the five categories, this study also included experiments comparing confusion matrices and ROC curve accuracy, as shown in Figs. 1 and 2. The results showed that the performance difference between the randomly initialized ResNet and ViT is minimal. In the diagnosis of smooth vs. -rest, the accuracy of ViT is approximately 5% higher than that of ResNet. However, in the diagnosis of polyps vs. -rest, the accuracy of ResNet is about 3% higher than that of ViT.

Full size table

a–e Show the three-class confusion matrices of the test set accuracies for ResNet and ViT trained from Scratch, as well as ViT models utilizing CLIP, MedSAM, and Endo-FM as pre-trained foundation models for transfer learning

Full size image

a–e Show the three-class ROC curves of the test set accuracies for ResNet and ViT trained from Scratch, as well as ViT models utilizing CLIP, MedSAM, and Endo-FM as pre-trained foundation models for transfer learning. class 0 represents Smooth vs. -Rest, class 1 represents Edema vs. -Rest, and class 2 represents Polyp vs. -Rest

Full size image

In the comparison of models after transfer learning with pre-trained foundation models, the model based on the Endo-FM pre-trained foundation model demonstrated the best performance on the test set. In the accuracy test, the proposed model based on the pre-trained transfer model from endoscopic images showed a significant advantage over traditional methods. In the diagnosis of smooth and rest conditions (edema and polyp), the average accuracy was 91.17% (95% CI 86.95–96.52), and the AUC reached 0.97 (95% CI 0.93–0.99). The specificity was 86.35% (95% CI 77.99–94.07), and the sensitivity was 91.85% (95% CI 4.37–91.20), with an average accuracy improvement of about 4% compared to traditional methods. In the diagnosis of polyp vs. rest conditions (smooth and edema), the average accuracy was 81.87% (95% CI 73.04–87.83), and the AUC was 0.90 (95% CI 0.83–0.95). The specificity was 80.53% (95% CI 70.29–86.92), and the sensitivity was 81.04% (95% CI 71.10–87.38), with an average accuracy improvement of about 15% (p < 0.05) compared to traditional methods.

In the Smooth vs.-Rest classification, “Rest” includes edema and polyp states, whereas in the Polyp vs.-Rest classification, “Rest” includes smooth and edema states. In the “Methods” column, the term before “-” represents the base model, while the term after “-” indicates the transfer learning model (with “Scratch” referring to training from the beginning without any pre-trained weights). Specificity, Proportion of correctly identified negative samples; Sensitivity, Proportion of correctly identified positive samples; F1 score, Harmonic mean of Precision and Recall; AUC, Area under the ROC curve.

Discussion

In the clinical diagnosis and treatment process, patients with CRS who do not respond well to standardized pharmacological therapy may opt for surgical treatment. Accurate assessment and appropriate intervention of ESS postoperative outcomes are critical for improving CRS prognosis. However, in actual clinical practice, endoscopy-based CRS postoperative evaluations are highly subjective, and different evaluation standards may lead to different intervention measures. Additionally, since disease assessment relies on outpatient endoscopic examinations, some patients have poor follow-up adherence, leading to loss to follow-up, which makes it difficult to provide high-quality, full-process disease management for individual patients. To address these two clinical challenges, this study utilizes AI algorithms to establish an analysis model based on CRS postoperative nasal endoscopy images, which can accurately and reproducibly distinguish between smooth, edema, or polyp conditions in the surgical cavity, thus providing a reliable reference for developing appropriate intervention measures.

Due to the high heterogeneity of CRS postoperative images and limited training data, traditional algorithms often face limitations in generalization and universality. To address this technical challenge, this study proposes using pre-trained foundation models from the professional field for transfer learning, making full use of the limited dataset to achieve optimal model performance. To validate the effectiveness of this approach, this study compared two technical routes: traditional model training and transfer learning based on pre-trained foundation models, and examined their differences in accuracy, sensitivity, and specificity in assessing surgical cavity condition. The results showed that the model obtained from transfer learning with a pre-trained foundation model significantly outperformed the traditional algorithm. This improvement is mainly due to the comprehensive and diverse features extracted from massive data by the pre-trained foundation model, enabling the model to achieve better generalization with limited labeled training data [20].

This study selected multiple pre-trained foundation models for transfer learning. The comparison of validation results reveals that the Endo-FM algorithm, based on the endoscopic dataset, outperforms both MedSAM and CLIP. The reason is that the vast gastrointestinal endoscopic image features provided by Endo-FM are stylistically closer to the imaging conditions and texture of CRS surgical cavity images, leading to better transfer performance [21]. Based on this result, future work can collect large-scale nasal endoscopy images to build a nasal endoscopy-specific dataset, which will provide more direct and comprehensive nasal endoscopy features for achieving optimal model performance. To assess whether the image features the model focuses on are consistent with the features required for clinical decision-making, we visualized the attention map of the optimal model (Fig. 3). The results show that the model developed in this study correctly focuses on key areas of the disease and is able to extract features from these key areas for CRS postoperative assessment and diagnostic tasks.

Visualization of Attention Maps. a and c Are endoscopic images of edema and polyp lesions, respectively. b and d Are the attention maps obtained from the model for a and c, respectively

Full size image

In addition, the establishment of this algorithm model provides a potential solution to the issues of poor follow-up adherence and postoperative loss to follow-up. Previous studies have demonstrated the use of mobile phone photos for detecting skin lesions [22], wearable devices for automatic heart rate monitoring [23], and fall monitoring, which have fully proven that the combination of home medical devices and AI algorithms can achieve diagnostic results similar to those of hospitals under specific conditions. Since CRS postoperative follow-up heavily relies on nasal endoscopy, and with the trend of miniaturization of endoscopes, home-use endoscopes could be developed for home follow-up, where patients take photos of the surgical cavity, upload the images to the cloud for online analysis and evaluation, and receive AI diagnostic suggestions. This approach would improve the convenience of follow-up and the quality of full-process disease management. Furthermore, due to the transfer learning based on pre-trained foundation models, the model in this study exhibits strong domain generalization ability, which can address the issue of weakened model performance due to the imaging quality and diversity of home-use endoscopes, offering substantial clinical and application potential in the future.

Conclusions

In summary, the transfer learning algorithm model based on pre-trained foundation models can perform accurate and reproducible analysis of CRS postoperative outcomes based on nasal endoscopy images, which effectively addresses the issue of high subjectivity in CRS postoperative evaluations. Thanks to the comprehensive and generalizable feature learning ability of pre-trained large models, better generalization performance can be achieved with a small amount of labeled data compared to traditional algorithms. In future work, we will continue to research and develop pre-trained foundation models in the field of nasal endoscopy to achieve better accuracy and generalization ability. As endoscope manufacturing technology continues to improve, this algorithm model can be integrated with home-use endoscopes, potentially enabling postoperative home examinations for CRS, providing patients with more convenient follow-up options and achieving more efficient and high-quality full-process management.

Materials and methods

Datasets

Based on the recovery status of the local mucosa in the surgical cavity after ESS, the surgical cavity is classified into three states: “polyp (including cysts)”, “edema”, and “smooth”. These states correspond to different intervention strategies: “polyp” may require surgical intervention, “edema” necessitates medication control, and “smooth” only requires regular follow-up. This study is a retrospective analysis. Data from CRS postoperative nasal endoscopy examinations conducted at the Eye & ENT Hospital of Fudan University and Zhongshan Hospital Fudan University from May 2020 to the present were collected. Based on the nasal endoscopy reports, preliminary screening of diagnostic data for the three states—”polyp”, “edema”, and “smooth”—was performed. Two endoscopy experts with over 15 years of clinical experience reviewed the images. Ultimately, a dataset of approximately 2,000 postoperative nasal endoscopy images was created. This dataset was used for training and validating the CRS postoperative outcome model. The study was approved by the Ethics Committee of the Eye & ENT Hospital of Fudan University. And written informed consents are waived from all patients. (Ethics Approval Number: 2023188-1).

Model establishment

Due to the complexity of the nasal cavity condition after CRS surgery and the fact that the quality of endoscopic images is influenced by factors such as instrument model, shooting angle and position, and the surgeon’s technique, traditional model training methods are prone to overfitting when the training dataset is limited [24], making it difficult to achieve optimal diagnostic accuracy and stability. To obtain the best classification performance with a limited dataset, this study employed two pre-trained models as base models: CLIP, based on a large-scale general visual dataset; MedSAM, based on a comprehensive medical image dataset; and Endo-FM, based on a large-scale endoscopic image dataset. Transfer learning was then applied using the dataset constructed in the earlier phase. To assess the effectiveness of the pre-trained foundation model transfer learning, Scratch was also trained as a baseline method for comparison. In terms of the basic network structure, the study employed a ViT-based architecture for image encoding, with a Multi-Layer Perceptron (MLP) serving as the classification head. Additionally, a comparative experiment using a ResNet-based architecture with random initialization was conducted to systematically evaluate the classification performance of different network structures for the CRS postoperative nasal endoscopy diagnostic model.

Specifically, we adopted the ViT-B/32 model as the base network, set the input image size to 224 × 224 × 3, and divided it into 49 patches (7 × 7) of 32 × 32 pixels, as shown in Fig. 4. Additionally, to perform the image classification task, a classification token (CLS token) was added to the embedding of each image patch, resulting in a total of 50 patches. Each patch was then mapped to a one-dimensional vector of length 3072 (32 × 32 × 3), as shown in formula (1). Here, (mathbf{x}in {mathbb{R}}^{Htimes Wtimes C}) represents the input image, P is the size of the patches obtained from the segmentation, E is the mapping matrix for the image patches, and N is the total number of patches:

Structure of the CRS postoperative outcome model

Full size image

$${mathbf{z}}_{0}=left[{mathbf{x}}_{text{class }};{mathbf{x}}_{p}^{1}mathbf{E};{mathbf{x}}_{p}^{2}mathbf{E};cdots ;{mathbf{x}}_{p}^{N}mathbf{E}right]+{mathbf{E}}_{pos}, mathbf{E}in {mathbb{R}}^{left({P}^{2}cdot Cright)times D},{mathbf{E}}_{pos}in {mathbb{R}}^{(N+1)times D}$$

(1)

Subsequently, the mapped feature vector z is normalized and input into the ViT network for image information encoding. The input feature vector undergoes linear mapping through the Multi-Head Self-Attention (MSA) module and the MLP. For each head, distinct query (Q), key (K), and value (V) matrices are generated. The calculation methods are provided in formulas (2)–(3). In this context, LN refers to the linear normalization layer, and L denotes the number of layers in the network, which is set to 12 in this study:

$${mathbf{z^{prime}}}_{ell } = {text{MSA}}left( {{text{LN}}left( {{mathbf{z}}_{ell – 1} } right)} right) + {mathbf{z}}_{ell – 1} ,ell = 1 ldots L,$$

(2)

$${mathbf{z}}_{ell } = {text{MLP}}left( {{text{LN}}left( {{mathbf{z^{prime}}}_{ell } } right)} right) + {mathbf{z^{prime}}}_{ell } ,ell = 1 ldots L.$$

(3)

Finally, the image features obtained from the Transformer Encoder are input into an MLP network to perform the image classification task. This study focuses on a three-class classification task, aiming to determine the pathological condition of the input nasal endoscopy images.

In the transfer training task, this study utilizes three types of pre-trained model parameters (CLIP, MedSAM, and Endo-FM) to initialize the parameters of the model’s Transformer Encoder module. And a detection MLP Head module is added at the end of the Encoder. The parameter initialization based on the visual image dataset uses the model parameters from the Vision Encoder module in CLIP, the initialization based on multiple medical imaging datasets uses the parameters from the Encoder module of MedSAM, and the initialization based on the endoscopic image dataset uses the parameters from the Student Video Transformer module in Endo-FM. The MLP Head module parameters are initialized randomly, and the transferred backbone network is fine-tuned during training. The training process uses the cross-entropy loss function for model parameter iteration, as shown in formula (4). Here, ({p}_{ic}) represents the model’s predicted probability, ({y}_{ic}) is the label value, M is the number of classes, and N is the number of samples. Finally, inference is performed based on the optimal model obtained from training, completing the diagnosis of the three surgical cavity states: “polyp”, “edema”, and “smooth”:

$${L}_{cls}=-frac{1}{N}sum_{i} sum_{c=1}^{M} {y}_{ic}text{log}left({p}_{ic}right).$$

(4)

Evaluation metrics

This study trains the aforementioned model based on the constructed CRS postoperative outcome diagnostic training set. During the training process, the model with the highest classification accuracy (ACC) on the validation set was selected as the optimal model and subsequently evaluated for diagnostic performance on the test set. In the diagnostic performance evaluation, a one-vs-rest strategy was employed to achieve multi-class recognition. In this approach, each category is treated as a separate binary classification task, where one class is considered the positive class and the remaining classes are grouped as the negative class. For each category, the model was trained to distinguish the target class from all other classes. The accuracy (%), sensitivity (%), specificity (%), F1 score, and area under the receiver operating characteristic curve (AUC) were calculated based on the comparison between positive and negative classes, with each metric reported alongside its 95% confidence interval. In the ROC curve, the vertical axis represents sensitivity (true positive rate), and the horizontal axis represents specificity (false positive rate). When AUC < 0.5, the model has no diagnostic value; when 0.5 ≤ AUC < 0.7, the model has low diagnostic value; when 0.7 ≤ AUC < 0.9, the model has moderate diagnostic value; and when AUC ≥ 0.9, the model has high diagnostic value. As a supplement, we also evaluated the model’s AUC performance under both micro-average and macro-average multi-class evaluation strategies.

Data availability

No datasets were generated or analyzed during the current study.

Abbreviations

- CRS:

-

Chronic rhinosinusitis

- ESS:

-

Endoscopic sinus surgery

- AUC:

-

Area under the receiver operating characteristic curve

- ROC:

-

Receiver operating characteristic curve

- AI:

-

Artificial intelligence

- CNNS:

-

Convolutional neural networks

- CT:

-

Computed tomography

- VIT:

-

Vision transformer

- MLP:

-

Multi-layer perceptron

- MSA:

-

Multi-head self-attention

- ACC:

-

Accuracy

References

-

Zhonghua Er Bi Yan Hou Tou Jing Wai Ke Za Zhi Subspecialty Group of Rhinology, Editorial Board of Chinese Journal of Otorhinolaryngology Head and Neck Surgery, Subspecialty Group of Rhinology, Society of Otorhinolaryngology Head and Neck Surgery, Chinese Medical Association. [Chinese guidelines for diagnosis and treatment of chronic rhinosinusitis (2018)] 2019;54:81–100.

-

Zhonghua Er Bi Yan Hou Tou Jing Wai Ke Za Zhi Subspecialty Group of Rhinology, Editorial Board of Chinese Journal of Otorhinolaryngology Head and Neck Surgery, Subspecialty Group of Rhinology, Society of Otorhinolaryngology Head and Neck Surgery, Chinese Medical Association. [Guidelines for diagnosis and treatment of chronic rhinosinusitis (2012, Kunming)]. 2013;48:92–4.

-

Matheny KE, Tseng EY, Carter KB, Cobb WB, Fong KJ. Self-cross-linked hyaluronic acid hydrogel in ethmoidectomy: a randomized, controlled trial. Am J Rhinol Allergy. 2014;28:508–13.

Google Scholar

-

Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Commun ACM. 2017;60:84–90.

Google Scholar

-

Ardila D, Kiraly AP, Bharadwaj S, Choi B, Reicher JJ, Peng L, et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat Med. 2019;25:954–61.

Google Scholar

-

Cai S-L, Li B, Tan W-M, Niu X-J, Yu H-H, Yao L-Q, et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc. 2019;90:745-753.e2.

Google Scholar

-

Lin D, Xiong J, Liu C, Zhao L, Li Z, Yu S, et al. Application of comprehensive artificial intelligence retinal expert (CARE) system: a national real-world evidence study. Lancet Digit Health. 2021;3:e486–95.

Google Scholar

-

Li C, Jing B, Ke L, Li B, Xia W, He C, et al. Development and validation of an endoscopic images-based deep learning model for detection with nasopharyngeal malignancies. Cancer Commun (Lond). 2018;38:59.

Google Scholar

-

Wang S-X, Li Y, Zhu J-Q, Wang M-L, Zhang W, Tie C-W, et al. The detection of nasopharyngeal carcinomas using a neural network based on nasopharyngoscopic images. Laryngoscope. 2024;134:127–35.

Google Scholar

-

Xu J, Wang J, Bian X, Zhu J-Q, Tie C-W, Liu X, et al. Deep learning for nasopharyngeal carcinoma identification using both white light and narrow-band imaging endoscopy. Laryngoscope. 2022;132:999–1007.

Google Scholar

-

Shi Y, Wang H, Ji H, Liu H, Li Y, He N, et al. A deep weakly semi-supervised framework for endoscopic lesion segmentation. Med Image Anal. 2023;90: 102973.

Google Scholar

-

Girdler B, Moon H, Bae MR, Ryu SS, Bae J, Yu MS. Feasibility of a deep learning-based algorithm for automated detection and classification of nasal polyps and inverted papillomas on nasal endoscopic images. Int Forum Allergy Rhinol. 2021;11:1637–46.

Google Scholar

-

Bi M, Zheng S, Li X, Liu H, Feng X, Fan Y, et al. MIB-ANet: A novel multi-scale deep network for nasal endoscopy-based adenoid hypertrophy grading. Front Med (Lausanne). 2023;10:1142261.

Google Scholar

-

He Z, Zhang K, Zhao N, Wang Y, Hou W, Meng Q, et al. Deep learning for real-time detection of nasopharyngeal carcinoma during nasopharyngeal endoscopy. iScience. 2023;26: 107463.

Google Scholar

-

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv; 2021. http://arxiv.org/abs/2103.00020. Accessed 12 Mar 2025

-

Ma J, He Y, Li F, Han L, You C, Wang B. Segment anything in medical images. Nat Commun. 2024;15:654.

Google Scholar

-

Wang Z, Liu C, Zhang S, Dou Q. Foundation Model for Endoscopy Video Analysis via Large-scale Self-supervised Pre-train. arXiv; 2024. Available from: http://arxiv.org/abs/2306.16741. Accessed 12 Mar 2025

-

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv; 2021. http://arxiv.org/abs/2010.11929. Accessed 9 May 2024

-

He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. arXiv; 2015. http://arxiv.org/abs/1512.03385. Accessesd 24 Jan 2024

-

Huang Z, Wang H, Deng Z, Ye J, Su Y, Sun H, et al. STU-Net: Scalable and Transferable Medical Image Segmentation Models Empowered by Large-Scale Supervised Pre-training. arXiv; 2023. http://arxiv.org/abs/2304.06716. Accessesd 12 Mar 2025

-

Cui B, Islam M, Bai L, Ren H. Surgical-DINO: adapter learning of foundation models for depth estimation in endoscopic surgery. Int J Comput Assist Radiol Surg. 2024;19:1013–20.

Google Scholar

-

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–8.

Google Scholar

-

Torres-Soto J, Ashley EA. Multi-task deep learning for cardiac rhythm detection in wearable devices. NPJ Digit Med. 2020;3:116.

Google Scholar

-

Alzubaidi L, Al-Amidie M, Al-Asadi A, Humaidi AJ, Al-Shamma O, Fadhel MA, et al. Novel transfer learning approach for medical imaging with limited labeled data. Cancers (Basel). 2021;13:1590.

Google Scholar

Download references

Funding

This work was sponsored by the National Natural Science Foundation of China (82371123, 82301276); Shanghai Science and Technology Committee Foundation(23Y31900500); the New Technologies of Endoscopic Surgery in Skull Base Tumor: CAMS Innovation Fund for Medical Sciences(CIFMS) (2019-I2M-5-003); National Key Clinical specialty construction project(Z155080000004), and Shanghai Sailing Program(23YF140480, 24YF2745500).

Ethics declarations

Ethics approval and consent to participate

The current study was conducted in accordance with the 1964 Declaration of Helsinki and was approved by the Institutional Research Ethics Committee of Eye & ENT Hospital of Fudan University. And written informed consents are waived from all patients (Ethics Approval Number: 2023188-1).

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

Reprints and permissions

About this article

Cite this article

Gong, W., Chen, K., Chen, X. et al. Postoperative outcome analysis of chronic rhinosinusitis using transfer learning with pre-trained foundation models based on endoscopic images: a multicenter, observational study. BioMed Eng OnLine 24, 95 (2025). https://doi.org/10.1186/s12938-025-01428-y

Download citation

-

Received:

-

Accepted:

-

Published:

-

DOI: https://doi.org/10.1186/s12938-025-01428-y