Introduction Deep learning is an advanced machine learning technique based on artificial neural networks inspired by a human brain, especially featuring the capability to automatically extract complex patterns of data by multi-layer neural networks [1,2]. The neural network consists of input, hidden, and output layers. Unlike conventional machine learning algorithms, deep learning architectures have multiple

Statistical Mechanics Explains Heavy-Tailed Self-Regularization In Neural Networks

The enduring mystery of why deep neural networks learn so effectively has taken a step closer to resolution with a new theoretical framework, unveiled by Charles H. Martin of Calculation Consulting and Christopher Hinrichs of Onyx Point Systems, and colleagues. Their work introduces a Semi-Empirical Theory of Learning, or SETOL, which provides a formal explanation for the origins of key metrics already known to predict the performance of state-of-the-art neural networks, crucially, without requiring access to training or testing data. By drawing upon techniques from statistical mechanics and random matrix theory, the researchers identify mathematical conditions that appear essential for successful learning, including a novel metric, termed ERG, which reflects the principles of renormalization group theory. Demonstrating strong agreement between theory and experiment on both simple and complex neural networks, SETOL offers a powerful new lens through which to understand the inner workings of deep learning and potentially guide the development of even more effective artificial intelligence.

Researchers have demonstrated that key metrics, alpha and alpha-hat, accurately predict the performance of modern neural networks without requiring access to training or testing data. This work introduces the SemiEmpirical Theory of Learning (SETOL), a new framework that explains these predictive metrics by combining insights from statistical mechanics and random matrix theory. SETOL identifies mathematical conditions that define ideal learning, introducing a novel metric, ERG, which conceptually mirrors the Wilson Exact Renormalization Group, a process used in statistical physics to simplify complex systems.

Random Matrix Models and Free Probability Tools

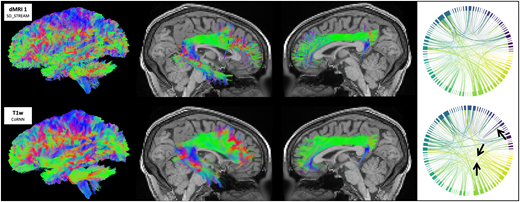

This research delves into the mathematical properties of random matrices and how to model them using tools from free probability. The work focuses on understanding the spectral distribution of these matrices, crucial for applications in signal processing and dimensionality reduction. Free probability provides a framework for analyzing the behavior of random matrices, allowing researchers to model their combined behavior. Key concepts include the Inverse Marchenko-Pastur (IMP) model, which represents the limiting spectral distribution of sample covariance matrices, and the R-transform, a function that encodes spectral properties and facilitates calculations.

The research details the calculation of the G-function, which represents the spectral distribution of a random matrix, and highlights the importance of handling branch cuts, discontinuities in the R-transform. Ensuring analyticity, or smoothness, of the function is crucial for applying the tools of free probability, often achieved by truncating the power law tail of the spectral distribution. These detailed calculations and emphasis on analyticity demonstrate the rigor of the research, with potential applications in signal processing, dimensionality reduction, and machine learning.

ERG Metric Explains Neural Network Learning

Researchers have developed a new theoretical framework, the SemiEmpirical Theory of Learning (SETOL), that explains the remarkable performance of modern neural networks. This theory provides a formal explanation for key metrics, known as alpha and alpha-hat, which have previously been observed to accurately predict the performance of state-of-the-art neural networks without requiring access to training or testing data. SETOL combines insights from statistical mechanics and random matrix theory to understand how these networks learn and generalize. The core of SETOL lies in identifying mathematical conditions that define ideal learning, introducing a new metric, ERG, which conceptually mirrors a key process in statistical physics called the Wilson Exact Renormalization Group.

Testing this theory on a simple neural network model demonstrated strong agreement with its theoretical predictions, validating its underlying assumptions. Importantly, the research confirms a strong alignment between the HTSR alpha metric and the SETOL ERG metric, not only in the test model but also in complex, state-of-the-art neural networks. This work moves beyond purely descriptive approaches by deriving its results from first principles, explaining why specific patterns emerge in the weight matrices of well-trained networks. The theory suggests that an optimal learning state is defined by a critical exponent of α = 2, representing a boundary between effective generalization and overfitting, analogous to phase transitions observed in physics.

Theory Explains Neural Network Performance and Metrics

This research presents a SemiEmpirical Theory of Learning (SETOL) that explains the performance of modern neural networks. The team successfully provides a formal explanation for key metrics, alpha and alpha-hat, which previously predicted the accuracy of these networks without needing training or testing data. By applying techniques from statistical mechanics and random matrix theory, they demonstrate mathematical preconditions for effective learning, introducing a new metric, ERG, linked to a process called the Wilson Exact Renormalization Group. Testing SETOL on a simple multilayer perceptron and applying it to state-of-the-art networks, the researchers found strong agreement between their theoretical predictions and empirical results. They demonstrated that layer qualities can be estimated by analyzing the spectral density of weight matrices, and that these estimates align well with existing metrics like HTSR alpha and the newly developed ERG. While the study confirms the theory’s ability to explain current network performance, the authors acknowledge limitations in the model’s complexity and scope, suggesting future research explore the theory’s applicability to a wider range of network architectures and investigate the implications of these findings for improving learning algorithms.